What To Expect From AMD's June Datacenter, AI Shindig

Comment AMD is just weeks away from unveiling the next phase of its datacenter portfolio at a swish launch in San Francisco.

Having showed off its latest mainstream datacenter chips in November, the Epyc processor designer is expected to spill the tea on its upcoming specialized components for cloud, AI, and technical computing.

So, before CEO Lisa Su takes to the stage on June 13, we thought we'd run through all the products we expect AMD to announce.

AMD will send an APU to the datacenter

Given all the hype around generative AI as of late, let's kick things off with AMD's accelerated processing unit (APU), the Instinct MI300A.

APUs have been a mainstay of AMD's PC and embedded electronics lineup: they feature a CPU cluster with a built-in graphics processor, and can handle a combination of computation and parallel-processing workloads.

Rather than personal computing, MI300A will have a very different focus: AI/ML and high-performance computing. In fact, we now know the chip will be the brains of the forthcoming US Lawrence Livermore National Laboratory's El Capitan supercomputer.

The MI300A is also unlike any APU we've seen from AMD to date — well, save for one. Back in 2017, Intel and AMD teamed up to pair an Intel CPU die with an AMD Radeon GPU and HBM2 memory. The MI300A will follow a similar pattern, but instead of Intel CPU cores, it'll use AMD's in-house Zen family cores and pack a lot more GPU performance.

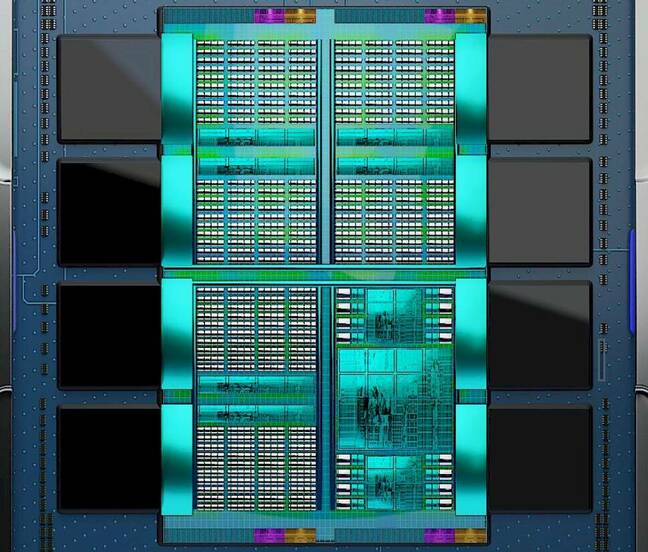

AMD has been fairly tight-lipped so far, but has revealed that the MI300A will feature 24 Zen 4 cores, 128GB of HBM3 memory, and from the renderings what appears to be six CDNA GPU dies.

Curiously, it appears AMD may not be using the same chiplet architecture found in last year's Epyc 4 Genoa series. The MI300A renderings appear to show two core-complex dies (CCDs) – what AMD calls its CPU chiplets. Judging from the picture, which could be obfuscated or just wrong, AMD may actually use two 16-core CCDs, like the ones we expect to find on Bergamo – more on that later – rather than two 12-core CCDs. Dual 16-core CCDs would be too many cores, but it's not uncommon for AMD to disable cores on its CCDs, bringing the working number down from 32 to 24.

If this is in fact the case, AMD may have done this to maintain a higher core clock for the given power budget.

AMD has revealed that the chip will deliver an 8x uplift in AI performance and a 5x improvement in performance per watt compared to the MI250X, which underpins the 1.1 exaFLOPS Frontier supercomputer. A decent portion of this uplift likely comes from the addition of FP8 support this time around. Lower precision usually renders higher overall performance in AI workloads at the expense of reduced accuracy.

Considering a single Epyc 4 can consume upwards of 400W and the MI250X has a power budget of about 600W, it's safe to assume the MI300A won't be frugal when it comes to power. Our colleagues over at The Next Platform estimate in this deep-dive that the chip will deliver somewhere in the neighborhood of 3 petaFLOPS of FP8 performance on a 900W power budget. That would make the chip less power hungry than Nvidia's Grace-Hopper superchip, but also not quite as powerful either.

The MI300A is expected to be just one of several SKUs to bear the name. We fully expect there to be a GPU-only configuration. Looking at the package renders, it sure looks like AMD could fit an extra two GPU dies on there, once the CCDs and I/O die have been stripped away.

As far as socket options, the MI300A looks like it will support at least four socket configurations. From what we've seen of El Capitan, it appears that each node will feature four APUs.

From a market standpoint, nobody else has a chip quite like it. Intel's Falcon Shores XPU was supposed to feature a similar CPU+GPU configuration, but that project was canned in favor of a regular GPU. That leaves Nvidia's Grace-Hopper superchip as AMD's chief competitor.

With that said, they're very different beasts. The MI300A is shaping up to be a proper APU with direct die-to-die communications and a shared pool of memory. Grace-Hopper differs in that it glues together a 72-core Arm-compatible CPU processor with a 96GB H100 GPU using Nvidia's NVLink-C2C interconnect.

AMD will challenge Ampere with a cloud-focused CPU of its own

Since the introduction of AMD's first Epyc processors in 2017, the chips have seen steady adoption by cloud providers prioritizing core-density over individual core performance.

Over the years, we've seen AMD's chips go from 32 cores to 64, and most recently to 96. However, starting in 2020, a competitor arrived, promising even higher core counts. Ampere's Arm-compatible Altra processors offered 80 and eventually 128 and 192 cores and were aimed at cloud providers, who gobbled them up.

The arithmetic was rather simple: more CPU cores meant customers could comfortably pack more VMs and containers into a single box. To address this emerging market segment, AMD revealed it was working on a core-optimized chip of its own. At its Accelerated Data Center event in late 2021, AMD teased a 128-core processor called Bergamo that was designed specifically for cloud-native workloads.

We expect the chip, which was initially planned for early this year, to be one of AMD's big announcements at June's event. So here's what we know about the chip so far.

Bergamo will introduce a new variation of AMD's Zen 4 core called Zen 4c. We also know that Bergamo will feature a different core configuration than Epyc 4 Genoa. Instead of 12 eight-core CCDs, it's our understanding that Bergamo will feature eight 16-core CCDs to achieve its 128-core target.

This denser core arrangement tells us that the Zen 4c core will likely be cut down compared to the full-fat Zen 4 cores found in Genoa. If we had to guess, AMD is probably shrinking the already large L3 cache — each CCD has 32MB on Genoa — to make room for the extra cores. Though that's a guess, and AMD may have stripped out additional functionality of low utility to cloud customers.

We also don't know much about the kind of performance we can expect from the chip's new Zen 4c cores, but we can make some educated guesses. If AMD follows Ampere's example, we can expect Bergamo to prioritize consistent clock speeds over boost frequencies. In other words, relatively high base clocks, but not much if anything in terms of boost clocks.

We also suspect that AMD will maintain similar thermal design power (TDP) targets for the chip as we saw in Epyc 4, putting it in the 360-400W range. This alone would necessitate a more conservative frequency scaling than in previous Epyc parts due to the chip's higher core count.

One area where Bergamo will differ from Ampere's cloud-native chips is support for simultaneous multithreading. A single Bergamo chip will sport 128 cores and 256 threads. In addition to multi-threading, it's worth remembering that these AMD cores are still x86-64. That means if your application runs on an Epyc or Xeon today, it should run on Bergamo without issue. Yes, you can recompile or port software, if possible, but you know what we mean.

The same can't always be said of Ampere Altra – or AWS's Graviton for that matter – despite considerable effort by Arm to certify systems and cloud instances running on its instruction set. Migrating workloads to these chips isn't always a given.

- Look mom, no InifiniBand: Nvidia's DGX GH200 glues 256 superchips with NVLink

- Arm announces Cortex-X4 among latest CPU and GPU designs

- Intel mulls cutting ties to 16 and 32-bit support

- Intel abandons XPU plan to cram CPU, GPU, memory into one package

Despite this, AMD won't have the first mover advantage here. The market for cloud-optimized silicon has grown considerably hotter over the past few years, and Bergamo will have to contend with not only Ampere's latest generation of Arm CPUs, but Intel's own core-optimized parts before long.

Ampere recently showed off its AmpereOne family of chips, which will top out at 192 cores. Meanwhile, Intel has promised a 144-core Xeon called Sierra Forest for the first half of 2024. So while AMD will beat Intel to market, it won't have a core-lead over its long-time rival.

AMD Genoa-X will pile on the cache

Bergamo isn't the only chip we expect to see at AMD's June event. The biz is expected to launch its second generation of "technical" processors, codenamed Genoa-X.

These chips are designed for a variety of technical computing applications, such as computational fluid dynamics, databases, and other bandwidth-intensive workloads.

Introduced in 2021 with the announcement of Milan-X, the chips feature an advanced packaging technique that layers additional SRAM atop the chip's CCDs. Using this method, AMD was able to pack an additional 64MB of L3 cache on each die for a total of 768MB of L3 on its top-specced chip.

As we reported last June, Genoa-X will push that to more than 1GB of L3 per socket. This makes sense since Genoa has four more CCDs than Milan, but it also suggests that AMD isn't doing anything special this time around.

With 96MB of L3 per CCD and 12 CCDs on AMD's 96-core Genoa-X chips, that works out to 1,152MB of L3 cache per socket.

However, as our sister site The Next Platform found, the higher price associated with these chips isn't always matched by their performance. Despite this, AMD has previously demonstrated significant performance uplifts in certain workloads, such as Synopsys's VCS test.

More to come

AMD's June event is shaping up to be a big one, but the biz isn't quite ready to reveal everything. Notably, it has at least one more CPU launch slated for this year: Siena.

While we don't know much about Siena just yet, AMD has said the product targets the edge and telco markets, prioritizes performance-per-watt, and will feature up to 64 cores.

As we've previously reported, Intel holds an outsized influence over the edge market, where the vast majority of "edge" systems are running Xeon processors. However, we'll have to wait until the second half of 2023 to see how compelling AMD's contender in the space really is. ®

From Chip War To Cloud War: The Next Frontier In Global Tech Competition

The global chip war, characterized by intense competition among nations and corporations for supremacy in semiconductor ... Read more

The High Stakes Of Tech Regulation: Security Risks And Market Dynamics

The influence of tech giants in the global economy continues to grow, raising crucial questions about how to balance sec... Read more

The Tyranny Of Instagram Interiors: Why It's Time To Break Free From Algorithm-Driven Aesthetics

Instagram has become a dominant force in shaping interior design trends, offering a seemingly endless stream of inspirat... Read more

The Data Crunch In AI: Strategies For Sustainability

Exploring solutions to the imminent exhaustion of internet data for AI training.As the artificial intelligence (AI) indu... Read more

Google Abandons Four-Year Effort To Remove Cookies From Chrome Browser

After four years of dedicated effort, Google has decided to abandon its plan to remove third-party cookies from its Chro... Read more

LinkedIn Embraces AI And Gamification To Drive User Engagement And Revenue

In an effort to tackle slowing revenue growth and enhance user engagement, LinkedIn is turning to artificial intelligenc... Read more