US Army Turns To 'Scylla' AI To Protect Depot

The US Army is testing a new AI product that it says can identify threats from a mile away, and all without the need for any new hardware.

Called Scylla after the man-eating sea monster of Greek legend, the Army has been testing the platform for the past eight months at the Blue Grass Army Depot (BGAD) in eastern Kentucky, a munitions depot and former chemical weapons stockpiling site, where it's been used to enhance physical security at the installation.

"Scylla test and evaluation has demonstrated a probability of detection above 96% accuracy standards, significantly lowering … false alarm rates due to environmental phenomena," BGAD electronic security systems manager Chris Willoughby said of the results of experiments.

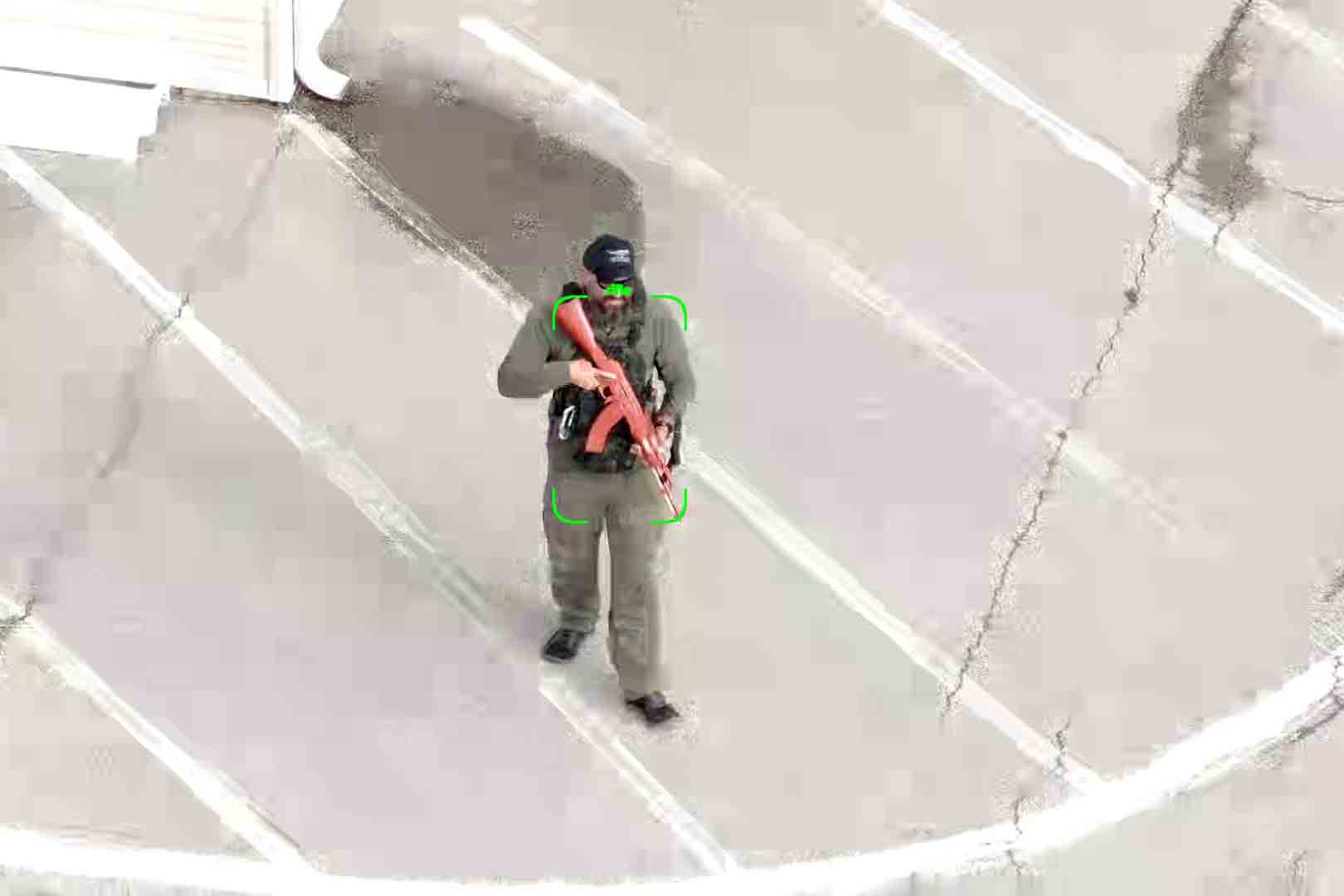

The Physical Security Enterprise and Analysis Group (PSEAG), which is leading the Scylla tests, has trained Scylla to "detect and classify" persons' features, their behavior, and whether they're armed in real time in order to eliminate wasted security responses to non-threatening situations.

"Scylla AI leverages any suitable video feed available to monitor, learn and alert in an instant, lessening the operational burden on security personnel," said Drew Walter, the US deputy assistant secretary of defense for nuclear matters. "Scylla's transformative potential lies in its support to PSEAG's core mission, which is to safeguard America's strategic nuclear capabilities."

Regardless of what it's protecting, the Army said Scylla uses drones and wide-area cameras to monitor facilities, detect potential threats, and tell personnel when they need to respond with far more accuracy than a puny human.

"If you're the security operator, do you think you could watch 15 cameras at one time … and pick out a gun at 1,000 feet? Scylla can," Willoughby said.

In one example of a simulated Scylla response, the system was able to use a camera a mile away to detect an "intruder" with a firearm climbing a water tower. A closer camera was able to follow up to get a better look, identifying the person as kneeling on the tower's catwalk.

In another example, Scylla reportedly alerted security personnel "within seconds" of two armed individuals who were identified via facial recognition as BGAD personnel. Scylla was also able to spot people breaching a fence and follow them with a drone before security was able to intercept, detect smoke coming from a vehicle from about 700 feet away, and identify a "mock fight" between two people "within seconds."

BGAD is the only facility currently testing Scylla, but the DoD said the Navy and Marine Corps are planning their own tests at Joint Base Charleston in South Carolina in the next few months. It's not clear if additional tests are planned, or if the Army has retired its Scylla setup following the conclusion of the tests.

So, what exactly is Scylla?

The greatest thing about Scylla, from the DoD's perspective, is its cost-efficiency: It's a commercial solution available for private and public customers that is allegedly able to do all its tasks without the need for additional hardware. But there's the option to add Scylla's proprietary hardware if desired.

It's not clear whether the US military has used a turnkey version of Scylla or customized it to its own ends, and the DoD didn't respond to questions for this story.

- AI models still racist, even with more balanced training

- US Army: We want to absorb private-sector AI 'as fast as y'all are building them'

- US Army drafts AI to combat recruitment shortfall

- US military pulls the trigger, uses AI to target air strikes

Either way, the eponymous company behind Scylla makes some big promises about its AI's capabilities, including that it's "free of ethnic bias" because it "deliberately built balanced datasets in order to eliminate bias in face recognition," whatever that means.

Scylla also claims it can't identify particular ethnic or racial groups, and that it "does not store any data that can be considered personal." Nor does it store footage or images, which at least makes sense, given it's an attachment to existing security platforms that are likely doing the recording themselves.

One claim in particular sticks out, however: Scylla claims it only sells its systems for ethical purposes and "will not sell software to any party that is involved in human rights breaches," but it has also touted its work in Oman, a middle eastern country that doesn't have the best record on human rights.

The US State Department has expressed concern over "significant human rights issues" in Oman that have been echoed by a number of human rights groups over the years. Scylla has been used to facilitate COVID screening at airports in the Sultanate, but its partnership with the Bin Omeir Group of Companies could see Scylla be used for purposes it purports not to want to engage in.

According to Scylla, Bin Omeir will use its AI "to support government initiatives with a focus on public safety and security." Given Oman's record of cracking down on freedom of expression and assembly, that's not a great look for a self-described ethical AI company.

Scylla didn't respond to questions for this story. ®

From Chip War To Cloud War: The Next Frontier In Global Tech Competition

The global chip war, characterized by intense competition among nations and corporations for supremacy in semiconductor ... Read more

The High Stakes Of Tech Regulation: Security Risks And Market Dynamics

The influence of tech giants in the global economy continues to grow, raising crucial questions about how to balance sec... Read more

The Tyranny Of Instagram Interiors: Why It's Time To Break Free From Algorithm-Driven Aesthetics

Instagram has become a dominant force in shaping interior design trends, offering a seemingly endless stream of inspirat... Read more

The Data Crunch In AI: Strategies For Sustainability

Exploring solutions to the imminent exhaustion of internet data for AI training.As the artificial intelligence (AI) indu... Read more

Google Abandons Four-Year Effort To Remove Cookies From Chrome Browser

After four years of dedicated effort, Google has decided to abandon its plan to remove third-party cookies from its Chro... Read more

LinkedIn Embraces AI And Gamification To Drive User Engagement And Revenue

In an effort to tackle slowing revenue growth and enhance user engagement, LinkedIn is turning to artificial intelligenc... Read more