The Troublesome Economics Of CPU-only AI

Analysis Today, most GenAI models are trained and run on GPUs or some other specialized accelerator, but that doesn't mean they have to be. In fact, several chipmakers have suggested that CPUs are more than adequate for many enterprise AI use cases.

Now Google has rekindled the subject of CPU-based inference and fine-tuning, detailing its experience with the advanced matrix extensions baked into Intel's 4th-Gen (Sapphire Rapids) Xeon cores.

In its testing, the search and advertising giant determined it was possible to achieve what we would consider acceptable second-token latencies when running large language models (LLMs) in the 7-13 billion parameter range at 16-bit precision.

In an email to The Register, Google said it was able to achieve a time per output token (TPOT) of 55 milliseconds for the 7B parameter model using a C3 VM with 176 vCPUs on board. As we understand it, hyperthreading was disabled for these tests, so only 88 of the VM's threads were actually active.

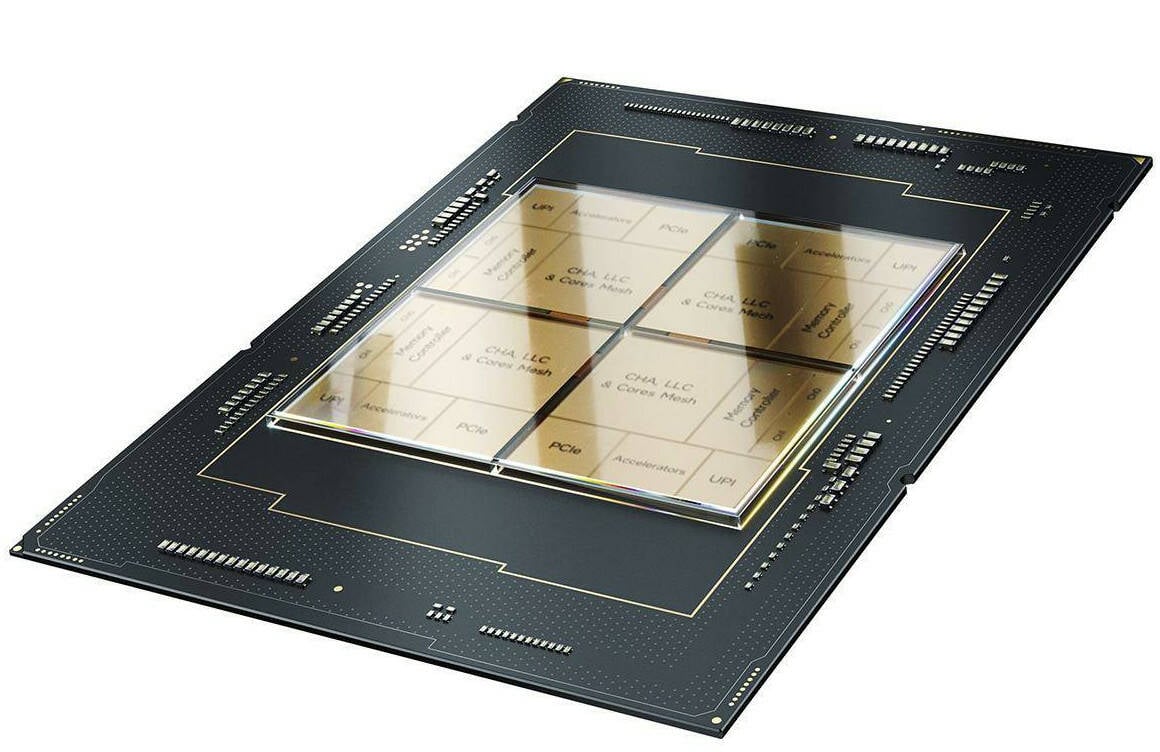

Using a pair of 4th-Gen Xeons, Google says it was able to achieve output latencies of 55 milliseconds per token in Llama 2 7B - Click to enlarge

To measure throughput, Google tested the Llama 2 models at a batch size of six — you can think of this as simulating six simultaneous user requests. For the smaller 7B version of the model, the VM managed roughly 220 to 230 tokens per second, depending on whether Intel's TDX security functionality was enabled. Performance for the larger 13B model was a little better than half that.

At a batch size of six in Llama 2 7B, Google managed to squeeze about 230 tokens per second out of the Xeons - Click to enlarge

Google also tested fine-tuning on Meta's 125 million parameter RoBERTa model using the Stanford Question Answering Dataset (SQuAD), where, regardless of whether TDX was enabled, the AMX-accelerated C3 instances managed to complete the job in under 25 minutes.

But while the results clearly demonstrate that it's possible to run and even fine-tune language models, that wasn't really the intent of Google's analysis. As you may have noticed from the charts, there are no comparisons to GPUs. That's because the post's goal was to demonstrate the speed-up afforded by AMX for GenAI workloads over older Ice Lake Xeons and the minimal impact associated with enabling TDX.

In this regard, AMX and Sapphire Rapids' faster DDR5 memory does afford quite the speed-up over its N2 instances powered by the older Xeon. Compared to a standard N2 instance, Google says switching to an AMX-enabled C3 VM netted a 4.14x-4.54x speed up for fine-tuning. Meanwhile, for inference, it claims a 3x improvement in latency and a sevenfold boost in throughput.

Whether it makes any sense to use Google's AMX-enabled C3 or newer Emerald Rapids-based C4 instances instead of a GPU for GenAI workloads is another matter entirely.

There's a reason AI is associated with GPUs

We all know GPUs are expensive and in incredibly high demand amid the AI boom. We've seen H100s go for $40,000 a pop on the second-hand market. Renting them from cloud providers isn't cheap either and often requires signing a one to three year contract to get the best deal.

The good news is, depending on how large your models are, you may not need Nvidia or AMD's most powerful accelerators. Meta's Llama 2 7B and 13B can easily run on far less costly chips like AMD's MI210 or Nvidia's L4 or L40S. The latter two can be rented for around $600-$1,200 a month — including enough vCPUs and memory to make it work — without commitment, if you're willing to shop around.

This is a real problem for CPU-based AI. While CPUs may be perceived as costing less than GPUs, that 176 vCPU C3 instance used in Google's example isn't exactly cheap and will set you back $5,464 a month, according to Google's pricing calculator.

Breaking that down into tokens per dollar, the CPU is a hard sell for any kind of extended workload. Going off the roughly 230 tokens a second, Google was able to squeeze from its C3 instance when running the 7B parameter Llama 2 model at batch size of six, which works out to about $9 per million tokens generated. If you're willing to make a three-year commitment, that'll drop things down to $2,459 a month or about $4 per million tokens generated.

Drawing comparisons to GPUs is a bit tricky, as we don't know how many input and output tokens were used in Google's testing. For our tests, we set our input prompt to 1,024 tokens and our output to 128 to simulate a summarization task.

Running this on an Nvidia L40S, we found we could achieve a throughput of about 250 tokens a second at a batch size of six in Llama 2 7B at FP16. At $1.68 an hour or $1,226 a month to rent on Vultr (you may be able to rent it for even less elsewhere), that works out to about $1.87 per million tokens generated. However, we also found that the GPU could continue scaling performance up to a batch size of 16, where it managed 500 token/s of throughput, or $0.93 per million tokens generated.

The takeaway is that CPUs would need to handle much larger batch sizes than GPUs to match their performance.

We'll note that even if it can scale to larger batch sizes, you're still looking at a best-case scenario for either part. That's because maintaining those batch sizes requires enough requests to keep the queue filled, which is unlikely for an enterprise where folks log off at the end of the day.

Some of this can be mitigated by using serverless CPU and GPU offerings, which can spin workloads up and down automatically based on demand. Depending on the platform, this may introduce some latency in the case of a cold start, but does ensure customers are only paying for GPU or CPU time they're actually using.

Some of these offerings are even billed per token. Fireworks.AI, for example, charges 20 cents per million tokens for models between four and 16 billion parameters in size. That's considerably cheaper than renting a GPU by the hour. Alternatively, for the same price as renting or buying dedicated hardware, you could access a much larger more capable model via API. However, depending on the nature of your workload, that may not be appropriate or even legal.

- Hugging Face puts the squeeze on Nvidia's software ambitions

- With Granite Rapids, Intel is back to trading blows with AMD

- BOFH: The Boss pulled the plug on our AI, so we pulled the pin on him

- European datacenter energy consumption set to triple by end of decade

Don't count CPUs out just yet

While Intel's 4th-Gen Xeons might not be the fastest or cheapest way to deploy AI models, that doesn't mean it's automatically a bad idea.

For one, the conversation around cost changes a bit when talking about on-prem deployments or existing cloud commitments, especially if you've already got a bunch of underutilized cores to play with. Faced with buying an $8,000 GPU and a system to put it in, it's not hard to imagine a CTO asking, "can't we do this with what we already have?" If an AI proof of concept fails, at least with the CPU you can put it to work running databases or VMs. The GPU, on the other hand, might become a very expensive paperweight, unless someone can find something else to accelerate with it.

Intel in particular has latched on to this concept ever since debuting AMX in its Sapphire Rapids Xeons in early 2023. That part was never designed with LLMs in mind, but its newly launched Granite Rapids Xeons certainly are.

Earlier this year, Intel showed off a pre-production system running Llama 2 70B on a single Xeon 6 processor while achieving 82 milliseconds of second-token latency, or about 12 tokens per second. This was possible thanks to a couple of factors, including higher core counts and therefore more AMX engines, 4-bit quantization to compress the model, and most importantly, the use of speedy MRDIMMs.

With 12 channels of 8,800MT/s memory, Granite Rapids boasts 844 GB/s of memory bandwidth, nearly the same as that L40S we discussed earlier. And, because inference is a largely memory-bandwidth-bound workload, this means the performance between the two should actually be fairly close.

While CPUs are beginning to catch up with lower-end GPUs in terms of memory bandwidth, they're still no match for high-end accelerators like Nvidia's H100 or AMD's MI300X, which boast multiple terabytes of bandwidth. Pricing also remains a challenge for CPUs. Intel's 6900P-series Granite Rapids parts will set you back between $11,400 and $17,800, and that's before you factor in the cost of memory.

For some, the flexibility of an AI-capable CPU will be worth the higher cost. For others, the efficiency GPUs offer for certain workloads will make them a better investment. ®

From Chip War To Cloud War: The Next Frontier In Global Tech Competition

The global chip war, characterized by intense competition among nations and corporations for supremacy in semiconductor ... Read more

The High Stakes Of Tech Regulation: Security Risks And Market Dynamics

The influence of tech giants in the global economy continues to grow, raising crucial questions about how to balance sec... Read more

The Tyranny Of Instagram Interiors: Why It's Time To Break Free From Algorithm-Driven Aesthetics

Instagram has become a dominant force in shaping interior design trends, offering a seemingly endless stream of inspirat... Read more

The Data Crunch In AI: Strategies For Sustainability

Exploring solutions to the imminent exhaustion of internet data for AI training.As the artificial intelligence (AI) indu... Read more

Google Abandons Four-Year Effort To Remove Cookies From Chrome Browser

After four years of dedicated effort, Google has decided to abandon its plan to remove third-party cookies from its Chro... Read more

LinkedIn Embraces AI And Gamification To Drive User Engagement And Revenue

In an effort to tackle slowing revenue growth and enhance user engagement, LinkedIn is turning to artificial intelligenc... Read more