IBM Takes A Crack At 'utility Scale' Quantum Processing With Heron Processor

IBM has unveiled the Heron – a quantum processor it claims has achieved "utility scale" – and a so-called modular System Two architecture that will employ it in production.

Heron is the latest installment in IBM's series of quantum processing units (QPUs). The device features 133 qubits – up from the 127 in the previous model, dubbed Eagle. IBM also claims Heron delivers a fivefold improvement in error rates compared to its predecessor – important because qubits contain more information than is needed for computing tasks, and that info can be corrupted.

IBM's Heron quantum processor boasts 133 qubits and a fivefold improvement in error reduction compared to its older Eagle chips – Click to enlarge

Heron will power IBM's Quantum System Two compute cluster, which combines quantum, classical, and qubit control electronics in a modular system designed for growth. While details are still thin, IBM's Quantum System Two compute cluster is 15 feet (five meters) tall and, like many quantum systems, requires cryogenic cooling to operate.

The initial system is located in Yorktown Heights, New York, and will initially feature three of IBM's Heron processors for a total of 399 local qubits.

However, IBM emphasizes that qubit count is only one factor. As we've discussed in the past, factors like coherence and the quality of the qubits often have a larger impact on the fundamental capabilities of a quantum machine. IBM is therefore prioritizing the size of quantum circuits it is able to achieve.

By the end of 2024, IBM claims each Heron processor will be capable of executing 5,000 operations in a single quantum circuit.

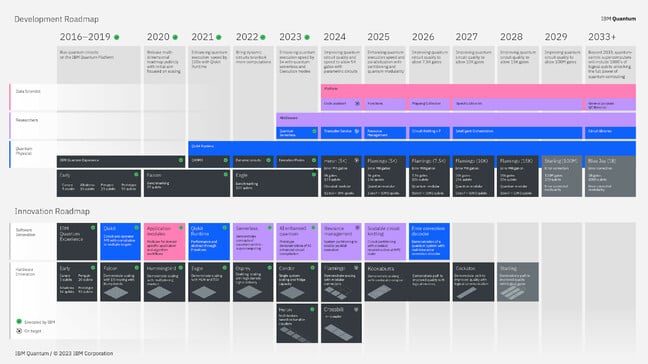

System Two is designed as a building block for future quantum supercomputers and is therefore designed to be forward compatible with future QPU designs. Greater modularity and an emphasis on circuit size is likely why IBM's roadmap doesn't call for a significant increase in qubit count between 2025 and 2028.

IBM expects to develop chips capable for 2,000 qubits and a billion gates as early as 2033 – Click to enlarge

Instead, IBM has four generations of its 156-qubit Flamingo chips planned. The major difference is each generation will push the maximum number of gates from 5,000 in 2024 to 15,000 in 2028. The chip will also eventually support cluster sizes of up to seven QPUs, totaling 1,092 qubits.

These chips are all classified as error mitigating, suggesting that each generation will also seek to reduce error rates to improve the efficiency of the qubits.

- Alibaba shuts down quantum lab, donates it to university

- Quantum computing next (very) cold war? US House reps want to blow billions to outrun China

- Fujitsu, RIKEN open Japan's first superconducting quantum 'puter to eggheads

- From vacuum tubes to qubits – is quantum computing destined to repeat history?

Looking beyond 2028, IBM expects to move into error correcting territory beginning in 2029 with its Starling QPU. IBM is predicting that chip will squeeze 100 million gates from 200 logical qubits. Looking out to 2033 and beyond, IBM aims to launch Blue Jay – a 2,000 qubit QPU capable of circuits with a billion gates.

Of course a lot can happen in ten years. In the meantime, IBM plans to make its Heron QPUs available for researchers to tinker with using its public cloud portal.

To support research into quantum algorithms, IBM has also launched a version 1.0 of its Qiskit quantum development kit to help developers write and optimize their code for quantum systems. According to IBM, the software works by mapping classic problems to quantum circuits, optimizing them, and then executing them using the Qiskit runtime.

IBM is also exploring the use of AI to accelerate the development of quantum algorithms using its WatsonX platform, which we looked at earlier this year. Among WatsonX's offerings is a foundational model for an AI code assistant – similar to Microsoft's GitHub Copilot – which can be tuned to specific applications. ®

From Chip War To Cloud War: The Next Frontier In Global Tech Competition

The global chip war, characterized by intense competition among nations and corporations for supremacy in semiconductor ... Read more

The High Stakes Of Tech Regulation: Security Risks And Market Dynamics

The influence of tech giants in the global economy continues to grow, raising crucial questions about how to balance sec... Read more

The Tyranny Of Instagram Interiors: Why It's Time To Break Free From Algorithm-Driven Aesthetics

Instagram has become a dominant force in shaping interior design trends, offering a seemingly endless stream of inspirat... Read more

The Data Crunch In AI: Strategies For Sustainability

Exploring solutions to the imminent exhaustion of internet data for AI training.As the artificial intelligence (AI) indu... Read more

Google Abandons Four-Year Effort To Remove Cookies From Chrome Browser

After four years of dedicated effort, Google has decided to abandon its plan to remove third-party cookies from its Chro... Read more

LinkedIn Embraces AI And Gamification To Drive User Engagement And Revenue

In an effort to tackle slowing revenue growth and enhance user engagement, LinkedIn is turning to artificial intelligenc... Read more