House Of Lords Push Internet Legend On Greater Openness And Transparency From Google. Nope, Says Vint Cerf

And he tells peers: 'I'm not sure showing you a neural network would be helpful'

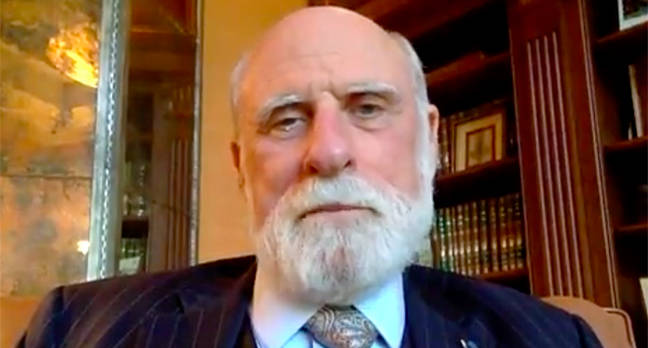

You've been Cerf'd ... 'Net legend Vint

The reverence in the House of Lords was palpable as Vint Cerf, a Google grandee and one of the, er, elders of the internet, was described during a committee meeting as technology's answer to Sir David Attenborough.

However, that did not stop the British Parliament's second chamber asking some pressing questions regarding the internet titan's transparency.

Having danced around the thorny problem of how de-facto standard search engine Google, and dominant video sharing platform YouTube, both owned by Alphabet, might influence public opinion in an internet age, Lord Mitchell finally got to the point: "I've been listening to this for nearly an hour. Now, one of the feelings I get from the responses is [you’re saying] ‘We’re Google, you should trust us.'"

The House of Lords' Democracy and Digital Technology Committee met on Monday to probe the search and advertising giant's use of algorithms and the controversy over its misreporting of advertising spending in the UK General Election.

Cerf and his colleague Katie O'Donovan, Google's head of UK government affairs and public policy, spent their time during the hearing defending two main lines of attack.

Firstly, that Google and YouTube should make more information about the workings of their search and recommendation algorithms open to regulators' scrutiny. Secondly, where human moderators are involved, either in selecting training data or deciding on the fate of flagged content, regulators should be able to interview them and see their working.

To both questions Cerf returned a polite, but firm no. To publish information about algorithms and neural networks would be too complicated and regulators wouldn't know what to do with them, was the response to the first probe. Or to give Cerf's charming answer:

"Basically, it's a complex interconnection of weights that take input in and pop something out to tell us you know what quality a particular web page is. I'm not sure that showing you a neural network would be helpful.

"It's not like a recipe that you would normally think of when you write a computer program. It's not an if-then-else kind of structure, it's a much more complex mathematical structure. And so, that particular manifestation of the decision making, may not be particularly helpful to look at. So, the real question is what else... would be useful in order to establish the trust that we've been talking about," he said.

Takedowns - for why?

Since Google and Alphabet use moderators, 10,000 of them it has said, which help make sure only "good quality" websites or videos become part of the training data and adjudicate in questions of whether content should be taken down or removed from recommendations, the Lords thought speaking to a few of them might be useful.

Committee chairman Lord Puttnam pressed on. "What would be your resistance to having your moderators talking about …the way they see the world and the kind of decisions they have to make? That is how you build trust, by having people on the ground, fairly consistently and openly talking about how difficult that job is. You don't build trust by ... creating a wall around the people and saying they mustn't talk to the public," he said.

But Google's representatives were largely unmoved. The moderators' guidelines were public, as were the search results, by putting the two together, authorities can assess whether the platforms are trustworthy. In any case, competition in the market, Cerf insisted, would keep Alphabet's two largest products trustworthy.

As far as competition goes, the company commands a 90 per cent market share in search, according to Statcounter and around a 70 per cent market share in video sharing, according to Datanyze. With popularity like that, the organisation must be doing something right, Cerf implied.

The Chocolate Factory is currently battling the EU in court over a €2.4bn fine for allegedly promoting its shopping search engine over smaller rivals. And over in the US last month, the Federal Trade Commission ordered Amazon, Apple, Facebook, Google and Microsoft to provide detailed information about their acquisitions of small companies.

EU competition chief Margrethe Vestager said last year, in relation to EU action around regulating Google: "[W]hen platforms do act as regulators, they ought to set the rules in a way that keeps markets open for competition. But experience shows that instead, some platforms use that power to harm competition, by helping their own services." ®

Sponsored: Webcast: Why you need managed detection and response

From Chip War To Cloud War: The Next Frontier In Global Tech Competition

The global chip war, characterized by intense competition among nations and corporations for supremacy in semiconductor ... Read more

The High Stakes Of Tech Regulation: Security Risks And Market Dynamics

The influence of tech giants in the global economy continues to grow, raising crucial questions about how to balance sec... Read more

The Tyranny Of Instagram Interiors: Why It's Time To Break Free From Algorithm-Driven Aesthetics

Instagram has become a dominant force in shaping interior design trends, offering a seemingly endless stream of inspirat... Read more

The Data Crunch In AI: Strategies For Sustainability

Exploring solutions to the imminent exhaustion of internet data for AI training.As the artificial intelligence (AI) indu... Read more

Google Abandons Four-Year Effort To Remove Cookies From Chrome Browser

After four years of dedicated effort, Google has decided to abandon its plan to remove third-party cookies from its Chro... Read more

LinkedIn Embraces AI And Gamification To Drive User Engagement And Revenue

In an effort to tackle slowing revenue growth and enhance user engagement, LinkedIn is turning to artificial intelligenc... Read more