Facing Stiff Competition, Intel's Lisa Spelman Reflects On Xeon Hurdles, Opportunities

Interview Intel's 5th-gen Xeon server processors have launched into the most competitive CPU market in years.

Changing market demands have created opportunities for chipmakers to develop workload-optimized components for edge, cloud, AI, and high-performance compute applications.

AMD's 4th-gen Epyc and Instinct accelerators address each of these markets. Meanwhile, Ampere Computing has found success with its Arm-compatible processors in the cloud, and Nvidia's AI and HPC-optimized Superchips are now in system manufacturers' hands.

We've also seen an increased reliance on custom silicon among the major cloud providers, like Amazon's Graviton or Microsoft's Cobalt — more on that later.

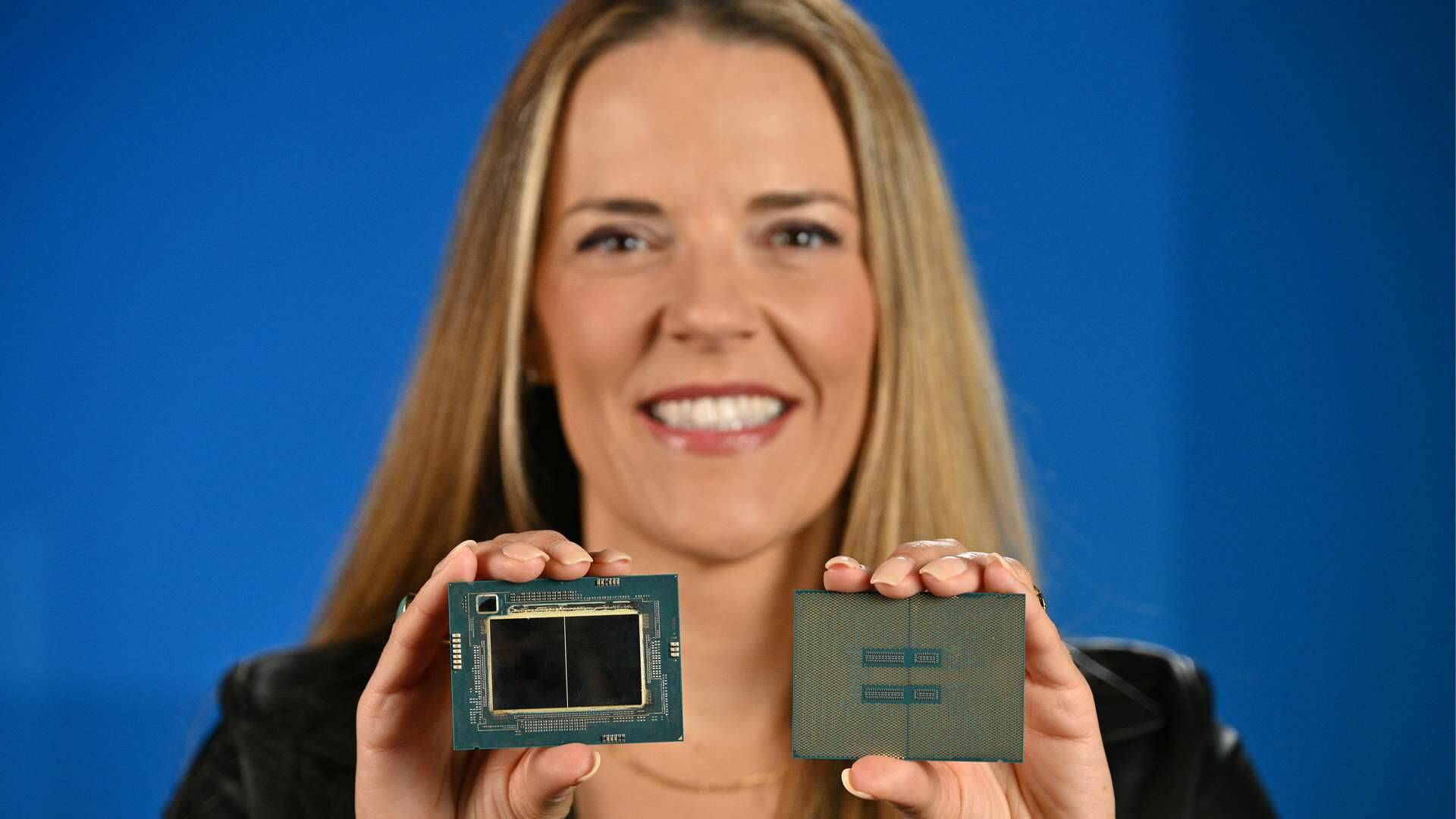

In an interview with The Register, Lisa Spelman, who leads Intel's Xeon division, discusses how changing market conditions and past roadblocks have influenced the trajectory of Intel's datacenter processors, as the chipmaker seeks to restore confidence in its roadmap.

Putting Sapphire Rapids in the rearview mirror

Among the Xeon team's biggest headaches in recent years is without a doubt Sapphire Rapids.

Originally slated to launch in 2021, the chip was Intel's most ambitious ever. It saw Intel affirm AMD's early investments in chiplets and was expected to be the first datacenter chip to support DDR5, PCIe 5.0, and the emerging compute express link (CXL) standard. And if that weren't enough, the chip also had to support large four-and eight-socket configurations, as well as a variant with on-package high-bandwidth memory (HBM) for its HPC customers.

As it turned out, Sapphire Rapids may have been a bit too ambitious, as Intel faced repeated delays, initially to the first quarter of 2022, and then the end of last year. Ongoing challenges eventually pushed its launch to January 2023 — a fact that has only served to erode faith in Intel's ability to adhere to its roadmaps.

While it would have been easy to chalk the whole thing up to "oh, we fell a bit behind on 10 nanometer, and then it all, you know, cascaded from there… That's a surface-level type of answer," Spelman says of the Sapphire Rapids launch.

She explains the missteps surrounding Sapphire Rapids development informed a lot of structural changes within the company. "We've gone through every single step in the process of delivering a datacenter CPU and made changes," Spelman says. "We realized we were fundamentally under invested in pre-silicon simulation and needed to have bigger clusters and more capacity."

Spelman also highlights changes to the way the Xeon team works with the foundry side of the house. In effect, Intel is now treated more like an IFS customer, a fact she insists forces engineers to think harder about how they approach CPU designs.

"It's been a tremendous learning journey. While you never would have chosen to go through it, I do believe it makes us a fundamentally better company," she says of Sapphire Rapids, adding that the Emerald Rapids, as well as the upcoming Sierra Forest and Granite Rapids processor families are already benefiting from these changes.

Deficits remain

Speaking of Intel's Emerald Rapids Xeons, Intel was able to deliver an average 20 percent performance uplift thanks in large part to a 3x bigger L3 cache and simplified chiplet architecture that used two dies instead of four on last gen.

These changes also allowed Intel to boost core counts to 64, a market improvement over the 56 cores available on the mainstream Sapphire Rapids platform, but still far short of the 96, 128, 144, and 196 available on competing platforms.

This isn't all that surprising. Intel has long prioritized per-core performance over core count and has thus lagged behind rival AMD in this respect for years. What's changing is the appetite for high-core count parts, particularly in the cloud.

Spelman claims that it's surprisingly rare to lose a deal over core count. "I'm not saying it never happens — look at high performance computing — but it often comes down to how that specific thing they're trying to do — the workload, the application — is going to run and perform, and how does it fit into the system of systems."

"There is a lot of Xeon capability that doesn't show up on a spec sheet." she adds.

Intel appears to have gotten the memo on many-cored CPUs, however. "I'm also driving our roadmap towards higher core count options, because I do want to be able to address those customers," Spelman says.

To her credit, Intel's first true many-cored CPU — not counting Xeon Phi, of course — won't just be competitive on core count, but, barring any surprises, looks like it'll surpass the competition by a wide margin.

Codenamed Sierra Forest, the cloud-optimized Xeon is scheduled to arrive in the first half of next year and is predicted to deliver up to 288 efficiency cores (E-core) on the top-specced part — 50 percent more than Ampere One's 192 cores.

Intel's Granite Rapids Xeons will be released later in 2024. Details are still thin on the chipmaker's next-gen performance-core (P-core) Xeon, but we're told it'll also boast higher core count, improved performance, and offer a substantial uplift in memory and I/O throughput.

"We're adding P-core and E-core products because we see the way the market is going," Spelman says.

Still room in the cloud for Intel

With more cloud providers turning to custom silicon and Arm pushing the shake-'n-bake CPU designs it calls Compute Subsystems (CSS), it remains unclear whether Intel has missed the boat on cloud-optimized processors.

While AWS is without a doubt the poster child for custom silicon, with its Graviton GPUs, Trainium and Inferentia AI accelerators, and Nitro smartNICs, they're far from the only ones building their own chips.

After years of industry talk, Microsoft finally revealed its Cobalt 100 CPU, which is based in part on Arm's CSS building blocks and features 128 processor cores. If you need a recap, our sibling site The Next Platform took a deep dive into the chip, as well as the Maia 100 AI accelerators Microsoft plans to use for training and inferencing.

- Intel wants to run AI on CPUs and says its 5th-gen Xeons are ones to do it

- US willing to compromise with Nvidia over AI chip sales to China

- New York set to host $10B semiconductor research facility with IBM and Micron

- AMD slaps together a silicon sandwich with MI300-series APUs, GPUs to challenge Nvidia's AI empire

Microsoft's use of Arm CSS is notable. This is the closest we've seen Arm come to designing an entire CPU. Arm clearly aims to woo more hyperscalers and cloud providers to develop their own custom Arm CPUs using CSS as a jumping off point.

AWS and Microsoft aren't the only ones opting for Arm cores either. Google is rumored to be working on a chip of its own, codenamed Maple, which will reportedly use designs developed by Marvell. And while Oracle hasn't built a custom CPU of its own, it is heavily invested in Ampere Computing's Arm-compatible processors.

While Spelman says AWS has done "strong work" with Graviton in the Arm ecosystem, she isn't too worried about Intel's prospects in the cloud.

The cloud providers are "focused on solving customer problems in the most efficient way possible," she explains. This means "you have the opportunity to win that every time, even if they have their own products."

Having said that, Spelman says it would have been nice to have started work on Sierra Forest a little earlier.

Intel's AI bet

Despite Intel's challenges getting its Xeon roadmap back on track, Spelman says some bets, particularly Intel's decision to build AI acceleration into its CPUs, are paying off.

"I look back seven, eight years ago to Ronak [Singhal], Sailesh [Kottapalli], and myself making that decision to start to take up die space to put AI acceleration in — I'd be lying if I told you that there weren't some people around here that thought we were crazy," she says.

Spelman is referring to the Advanced Matrix Extensions (AMX) that debuted with the launch of Sapphire Rapids earlier this year. AMX is designed to speed up common AI/ML inferencing workloads and reduce the need for standalone accelerators.

This capability is a major selling point behind Intel's Emerald Rapids Xeons introduced this week. The chips feature improvements to the AMX engine as well as faster memory and larger caches. Together, Intel says its CPUs can now run larger models at even lower latencies.

Not to undermine Intel's Habana team, which is responsible for developing the company's Gaudi AI accelerators, Spelman notes that this kind of hardware is still important to drive compute forward.

As we previously reported, Intel's Xeons can handle models up to about 20 billion parameters before latencies get untenable. Beyond this, accelerators start to make more sense.

Looking back, Spelman says she's happy with the progress the Xeon team has made. "My biggest objective now, from a leadership perspective, is just not letting our foot off the gas." ®

From Chip War To Cloud War: The Next Frontier In Global Tech Competition

The global chip war, characterized by intense competition among nations and corporations for supremacy in semiconductor ... Read more

The High Stakes Of Tech Regulation: Security Risks And Market Dynamics

The influence of tech giants in the global economy continues to grow, raising crucial questions about how to balance sec... Read more

The Tyranny Of Instagram Interiors: Why It's Time To Break Free From Algorithm-Driven Aesthetics

Instagram has become a dominant force in shaping interior design trends, offering a seemingly endless stream of inspirat... Read more

The Data Crunch In AI: Strategies For Sustainability

Exploring solutions to the imminent exhaustion of internet data for AI training.As the artificial intelligence (AI) indu... Read more

Google Abandons Four-Year Effort To Remove Cookies From Chrome Browser

After four years of dedicated effort, Google has decided to abandon its plan to remove third-party cookies from its Chro... Read more

LinkedIn Embraces AI And Gamification To Drive User Engagement And Revenue

In an effort to tackle slowing revenue growth and enhance user engagement, LinkedIn is turning to artificial intelligenc... Read more