AMD's Latest Epycs Are Bristling With Cores, Stacked To The Gills With Cache

AMD has unveiled a pair of Epyc processors aimed at cloud and technical applications and detailed plans for its Instinct MI300 family of accelerators during its Datacenter and AI event in San Francisco.

Up first is a fresh of Epyc processor designed with cloud providers and hyperscalers in mind. Codenamed Bergamo, the chip packs up to 128 cores per socket and has been optimized for a variety of containerized workloads, so AMD claims.

AMD is no stranger to core-heavy chips. Since the launch of its first-gen Epycs back in 2017, the company has maintained a steady core-count lead over long-time rival Intel. However, AMD's 128 core Epyc isn't aimed at Intel at all – at least not yet - so it seems.

Instead, AMD has positioned Bergamo as a sort of bulwark against Ampere Computing's Arm-compatible datacenter chips, which have seen widespread adoption among cloud and hyperscalers since hitting the market in 2020. Today, every major cloud provider – with the exception of AWS, of course – has deployed Ampere's chips.

With Bergamo, AMD aims to claw back some of this lost ground. The chipmaker went so far as to boast that Bergamo is unmatched in either single or dual-socket configurations, at least in the SPECrate2017 Integer benchmark.

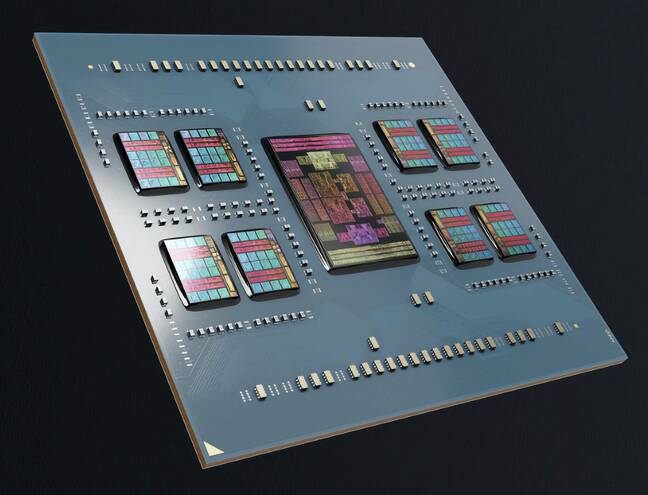

Peeking under the massive heat spreader reveals a chip that on the surface looks awfully like prior Epycs. In fact, you'd be forgiven for thinking this was the same as Rome or Milan, down to the central I/O die and eight surrounding core-complex dies (CCDs).

AMD's Bergamo Epyc processors feature 128 cores and 256 threads spread across eight core-complex dies

Despite the similarities, Bergamo is a very different beast with twice the cores per CCD as Genoa at 16, and an all-new core design called Zen 4c, where the "c" presumably stands for cloud.

“Zen 4c is actually optimized for the sweet spot of performance and power, and that actually is what gives us a much better density and energy efficiency,” AMD CEO Lisa Su said in her keynote speech. “The result is a design that has 35% smaller area and substantially better performance per watt.”

These cores also support simultaneous multithreading (SMT), something Ampere has shied away from, citing performance unpredictability and security implications as their rationale.

However, in containerized scale-out workloads SMT could pay dividends, where more threads should theoretically allow AMD to achieve greater densities. Three times higher densities in containerized workloads, according to AMD's internal benchmarks.

Unfortunately, AMD didn't disclose the chip's clock speeds or cache configuration in the materials it shared with us prior to the keynote. Because of this, it's hard to say what concessions the company was forced to make to fit the extra cores.

Beyond Bergamo's new core and chiplet architecture, the platform shares much in common with Genoa. It supports 12 lanes of DDR5 memory, the latest PCIe 5.0, and can be configured in either single or dual-socket configurations.

One notable advantage AMD has over Ampere, at least for now, is broad support for the x86 instruction set. Customers used to running workloads on Intel and AMD systems won't need to refactor their applications to take advantage of the greater core density – something that isn't necessarily true when migrating to Arm.

Bergamo won't be unique in this particular facet for much longer. Intel has promised a core-optimized Xeon, with even more cores for early 2024. The chip, code named Sierra Forest, will feature up to 144 of the company's efficiency cores.

AMD gets technical with another X-chip

AMD also revealed its latest cache-stacked X-chip, code named Genoa-X, during the event.

The chip is aimed at high-performance computing applications – what AMD likes to call technical workloads – including computational fluid dynamics, electronic design automation, finite element analysis, seismic tomography, and other bandwidth sensitive workloads that benefit a boatload of shared cache.

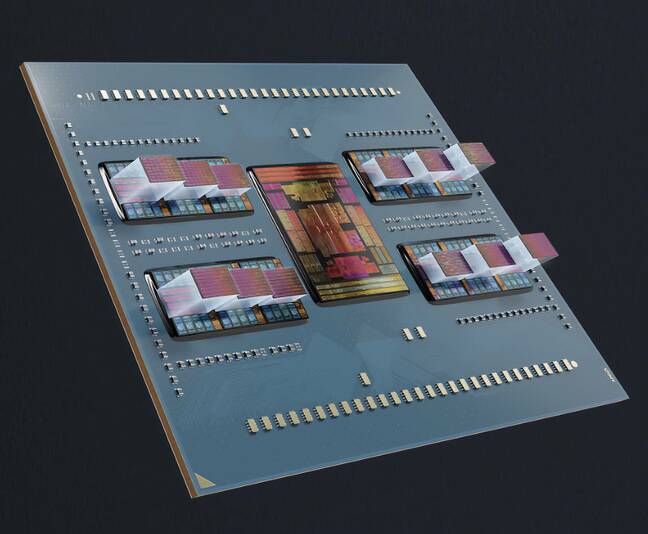

AMD's Genoa-X CPU stacks 64MB SRAM tiles atop each of the chips compute dies for a total of 1.1GB of L3 cache

As the name suggests, the chip is based on the company's standard Genoa platform, but features AMD's 3D V-Cache tech, which boosts the available L3 cache by vertically stacking SRAM modules on each of the CCDs.

AMD says Genoa-X is available with up to 96 cores and a total of "1.1GB" of L3 cache. This suggests the chip follows a similar configuration as we saw in last year's Milan-X, with a 64MB SRAM module stacked atop each CCD.

According to Dan McNamara, SVP of AMD’s server business the cache boost translates to anywhere from 2.2x and 2.9x higher performance compared to Intel’s top-specced 60-core Sapphire Rapids Xeons in a variety of computational fluid dynamics and finite element analysis workload loads.

Curiously, AMD avoided any comparisons to Intel’s own HPC focused Max SKUs, which arguably would have been the more appropriate comparison. Intel’s Xeon Max parts can be equipped with up to 64GB of on chip HBM.

At the time of publication, AMD hadn't shared pricing for the parts, but we expect them to command a premium over the bog-standard Epycs, something that could end up hurting their appeal. In past conversations with The Next Platform, several HPC vendors have cited Milan-X's high prices as being a pain point for customers.

- Intel abandons XPU plan to cram CPU, GPU, memory into one package

- AMD reveals Azure is offering its SmartNICs as-a-service

- Industrial design: AMD brings 4th gen Epyc power to embedded applications

- AMD probes reports of deep fried Ryzen 7000 chips

AMD reveals the MI250X's successor

Alongside its new CPUs, AMD once again took the opportunity to tease its next generation of Instinct MI300 accelerators.

This time the chipmaker confirmed plans to offer at least one version of the chip in a GPU-only configuration called the MI300X. Based on previously published performance claims, we expect we may end up with at least two X SKUs.

Up to this point, most of AMD's public disclosures have focused on the APU variant of the chip, called the MI300A. The APU is slated to power Lawrence Livermore National Laboratory's upcoming El Capitan supercomputer and stitches together multiple CPU, GPU, and high-bandwidth memory dies onto a single package.

Back in January, AMD revealed the 146 billion transistor APU would deliver roughly 8x faster AI performance while also achieving 5x better performance per watt than the outgoing MI250X.

The MI300X, revealed this week, is a simpler design, ditching the APU's 24 Zen cores and I/O die in favor of more CDNA 3 GPUs and an even larger 192GB supply of HBM3. AMD notes this is large enough to fit the Falcon-40B large language model on a single GPU.

Alongside the new GPU, the chipmaker announced the AMD Infinity Architecture, which connects eight MI300X accelerators in a single standard system designed with AI inferencing and training in mind. The concept bears several similarities to Nvidia's HGX mainboard, which supports up to eight of its own GPUs.

However it appears that neither MI300 variant is ready for public debut just yet. AMD says its MI300A APU is already sampling to customers, while the GPU-only version will start making its way to vendors sometime in calendar Q3.

New DPUs on the way

Finally, AMD offered a glimpse at its networking roadmap, which includes a new data processing unit (DPU), code named Giglio, due out later this year.

If you don't recall, AMD bought its way into the emerging DPU market with the $1.9 billion buy of Pensando last spring.

While details are thin, AMD claims its latest DPU, which is designed to offload networking, security, and virtualization tasks from the CPU, will offer improved performance and power efficiency compared to its current generation P4 DPUs.

The company's DPUs are already supported by a number of major cloud providers — Microsoft, IBM Cloud, and Oracle Cloud — and software suites like VMware's hypervisor.

AMD hopes to expand the list of compatible software ahead of Giglio's launch later this year with a "software-on-silicon developer kit" designed to make it easier to deploy workloads on the company's DPUs. ®

From Chip War To Cloud War: The Next Frontier In Global Tech Competition

The global chip war, characterized by intense competition among nations and corporations for supremacy in semiconductor ... Read more

The High Stakes Of Tech Regulation: Security Risks And Market Dynamics

The influence of tech giants in the global economy continues to grow, raising crucial questions about how to balance sec... Read more

The Tyranny Of Instagram Interiors: Why It's Time To Break Free From Algorithm-Driven Aesthetics

Instagram has become a dominant force in shaping interior design trends, offering a seemingly endless stream of inspirat... Read more

The Data Crunch In AI: Strategies For Sustainability

Exploring solutions to the imminent exhaustion of internet data for AI training.As the artificial intelligence (AI) indu... Read more

Google Abandons Four-Year Effort To Remove Cookies From Chrome Browser

After four years of dedicated effort, Google has decided to abandon its plan to remove third-party cookies from its Chro... Read more

LinkedIn Embraces AI And Gamification To Drive User Engagement And Revenue

In an effort to tackle slowing revenue growth and enhance user engagement, LinkedIn is turning to artificial intelligenc... Read more