Why Nvidia And AMD Are Roasting Each Other Over AI Performance Claims

Analysis Any time we write about vendor supplied benchmarks and performance claims they're accompanied by a warning to take them with a grain of salt.

This is because vendors aren't in the habit of pointing out where their chips or products fall short of the competition, so the results are usually cherry picked, tuned, and optimized to present them in the best light possible.

These comparisons usually don't elicit a response from rival chipmakers, but in the case of AMD's newly launched MI300X GPUs, Nvidia felt it necessary to speak out. In a blog post last week, the accelerator specialist rejected the accuracy and relevance of AMD's benchmarks.

By Friday, AMD had responded, sharing a further set of optimized figures, claiming a performance advantage even when taking into account Nvidia's optimized software libraries and support for lower precision.

Why Nvidia is so worked up

During AMD's launch event earlier this week, the chipmaker claimed its MI300X – that's the GPU variant of the chip – was able to achieve 40 percent lower latency than the H100 when inferencing Meta's Llama 2 70 billion parameter model.

The problem, according to Nvidia, is AMD's benchmarks don't take advantage of its optimized software or the H100's support for FP8 data types, and were instead conducted using vLLM at FP16. Generally speaking, lower precision data types trade accuracy for performance. In other words, Nvidia says AMD was holding the H100 back.

Nvidia claims its H100 outperforms AMD's MI300X when using the chipmaker's prefered software stack and FP8 precision

Nvidia claims that when benchmarked using its closed source TensorRT LLM framework and FP8, the H100 is actually twice as fast as the MI300X.

Nvidia also argues that AMD is presenting the best-case scenario for performance here by using a batch size of one – in other words, by handling one inference request at a time. This, Nvidia contends, is unrealistic as most cloud providers will trade latency for larger batch sizes.

Using Nvidia's optimized software stack, it says a DGX H100 node with eight accelerators is able to handle a batch size of 14 in the time it takes a similarly equipped node with eight of AMD's MI300X to handle one.

SemiAnalysis chief analyst Dylan Patel agrees that single batch latency is a "pointless" metric. However, he does see Nvidia's blog post as an admission that AMD's latest accelerators have it spooked.

"Nvidia is clearly threatened by the performance of AMD's MI300X and volume orders from their two largest customers, Microsoft and Meta," he told The Register. "In gaming, Nvidia hasn't compared themselves so openly to AMD in multiple generations because AMD is not competitive. They've never cared to in datacenter either when AMD racked up datacenter wins. But now, they have to fight back because AMD is winning deals in multiple clouds."

AMD's rebuttal

Within a day of the Nvidia post going live, AMD had responded with a blog post of its own arguing that Nvidia's benchmarks aren't an apples-to-apples comparison.

In addition to using its own optimized software stack, AMD points out Nvidia is comparing the H100's FP8 performance against the MI300X at FP16. Every time you halve the precision you double the chip's floating point operations – so this discrepancy can't be understated.

MI300X does support FP8. vLLM, which was used in AMD's testing, however, doesn't support the data type yet, so for inferencing on the MI300X we're stuck with FP16 benchmarks for now.

Finally, AMD called out Nvidia for inverting AMD's performance data from relative latency to absolute throughput.

While the AMD blog post didn't address Nvidia's criticism regarding single batch latencies, a spokesperson told The Register this is standard practice.

"Batch size 1 is the standard when measuring the lowest latency performance and max batch size is used to highlight the highest throughput performance. When measuring throughput, we typically set batch to max size that fits within a customer's SLA."

The spokesperson added that AMD's launch day performance claims did include throughput performance at "max batch size" for the 176 billion parameter Bloom model. In that case, AMD claimed a 1.6x advantage over Nvidia's H100, but as we'll get to later a lot of that is down to MI300X's more robust memory configuration.

Even when using Nvidia's prefered software stack, AMD says its MI300X is 30 percent more performant in FP16 AI inference

In addition to picking apart Nvidia's blog post and performance claims, AMD presented updated performance figures that take advantage of new optimizations. "We have made a lot of progress since we recorded data in November that we used at our launch event," the post read.

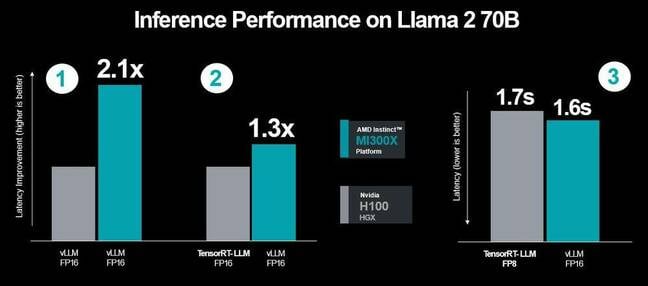

AMD claimed these improvements boosted the MI300X's latency lead in Llama 2 70B from 1.4x to 2.1x when using the common vLLM framework at FP16 accuracy.

Even using Nvidia's TensorRT-LLM framework on the H100-equipped node, AMD claimed the MI300X platform still offers a 30 percent improvement in latency at FP16.

And even when pitting MI300X at FP16 against the H100 at FP8 and Nvidia's preferred software stack, AMD claimed to be able to achieve comparable performance.

Nvidia didn't directly address our questions regarding AMD's most recent blog post, directing us instead to its GitHub page for details on key benchmarks.

Software's growing role in AI

Nvidia and AMD's benchmarking spat highlights a couple of important factors – including the role software libraries and frameworks play in boosting AI performance.

One of Nvidia's chief arguments is that by using vLLM rather than TensorRT-LLM, the H100 was put at a disadvantage.

Announced back in September and released in late October, TensorRT-LLM is a combination of software functions including a deep learning compiler, optimized kernels, pre- and post-processing steps, as well as multi-GPU and multi-node communication primitives.

Using the optimized software, Nvidia claimed it was able to effectively double the H100's inferencing performance when running the six billion parameter GPT-J model. Meanwhile, in Llama 2 70B, Nvidia claimed a 77 percent performance uplift.

AMD made similar claims with the launch of its ROCm 6 framework earlier this month. The chipmaker claimed its latest AI framework was able to achieve between 1.3x and 2.6x improvement in LLM performance thanks to optimizations to vLLM, HIP Graph, and Flash Attention.

Compared to the MI250X running on ROCm 5, AMD argued the MI300X running on the new software framework was 8x faster.

But while software as an enabler of performance shouldn't be overlooked, hardware remains a major factor – as evidenced by a push toward faster, larger capacity memory configurations on current and upcoming accelerators.

AMD's memory advantage

At both FP8 and FP16m AMD's MI300X holds about a 30 percent performance advantage over the H100. However, AI inferencing workloads are complex and performance depends on a variety of factors including FLOPS, precision, memory capacity, memory bandwidth, interconnect bandwidth, and model size – to name just a few.

AMD's biggest advantage isn't floating point performance – it's memory. The MI300X's high bandwidth memory (HBM) is 55 percent faster, offering 5.2TB/sec, and it has more than twice the capacity at 192GB, compared to the H100's 80GB.

This is important for AI inferencing, because the size of the model is directly proportional to the amount of memory required to run it. At FP16 you're looking at 16 bits or 2 bytes per parameter. So for Llama 70B you'd need about 140GB plus room for KV Cache, which helps to accelerate inference workloads, but requires additional memory.

So at FP16, AMD's MI300X automatically has an advantage, since the entire model can fit within a single accelerator's memory with plenty of room left over for KV Cache. The H100 on the other hand is at a disadvantage since the model needs to be distributed across multiple accelerators.

At FP8, on the other hand, Llama 2 70B only requires about 70GB of the H100's 80GB memory. While it is possible to fit a 70 billion parameter model into a single H100's memory, Patel notes that it leaves very little room for KV Cache. This, he explains, severely limits the batch size – the number of requests that can be served.

And from Nvidia's blog post, we know the chipmaker doesn't consider a batch size of one to be realistic.

At a system level, this is particularly apparent – especially looking at larger models like the the 176 billion parameter Bloom model highlighted in AMD's performance claims.

AMD's MI300X platform can support systems with up to eight accelerators for a total of 1.5TB of HBM. Nvidia's HGX platform meanwhile tops out at 640GB. As SemiAnalysis noted in its MI300X launch coverage, at FP16, Bloom needs 352GB of memory – leaving AMD more memory for larger batch sizes.

- AWS unveils core-packed Graviton4 and beefier Trainium accelerators for AI

- When it comes to AMD's latest AI chips, it's follow the money and the memory

- Intel wants to run AI on CPUs and says its 5th-gen Xeons are ones to do it

- AMD slaps together a silicon sandwich with MI300-series APUs, GPUs to challenge Nvidia's AI empire

H200 and Gaudi3 on the horizon

If you need more evidence that memory is the limiting factor here, just look at Nvidia's next-gen GPU, the H200. The part is due out in the first quarter of the new year and will boast 141GB of HBM3e good for 4.8TB/sec of bandwidth.

In terms of FLOPS, however, the chip doesn't offer any tangible performance uplift. Digging into the spec sheet shows identical performance to the H100 it replaces.

Despite this, Nvidia claims the H200 will deliver roughly twice the inferencing performance in Llama 2 70B compared to the H100.

While the MI300X still has more memory and bandwidth than the H200, the margins are far narrower.

"Customers choose the Nvidia full-stack AI platform for its unmatched performance and versatility. We deliver continual performance increases through innovation across every layer of our technology stack, which includes chips, systems and software," a spokesperson told The Register.

To this end, the H200 isn't Nvidia's only GPU due early next year. The reigning GPU champ recently made the switch to a yearly release cadence for accelerators and networking equipment beginning with the B100 in 2024.

And while we don't know much about the chip yet, it's safe to assume it'll be even faster and better tuned for AI workloads than its predecessor.

It's a similar story for Intel's upcoming Gaudi3 accelerators. Intel hasn't shared much about Habana Lab's third-gen chips, but we do know it'll boast memory bandwidth 1.5x that of its predecessor. The chip will also double the networking and is said to offer 4x the brain float 16 (BF16) performance. The latter is a particularly strange claim, since Intel won't tell us what Gaudi2's BF16 performance actually was – preferring to talk about real-world performance.

Intel declined our offer to weigh in on its competitors' benchmarking shenanigans. In any case, AMD's MI300X won't just have to compete with Nvidia in the new year. ®

From Chip War To Cloud War: The Next Frontier In Global Tech Competition

The global chip war, characterized by intense competition among nations and corporations for supremacy in semiconductor ... Read more

The High Stakes Of Tech Regulation: Security Risks And Market Dynamics

The influence of tech giants in the global economy continues to grow, raising crucial questions about how to balance sec... Read more

The Tyranny Of Instagram Interiors: Why It's Time To Break Free From Algorithm-Driven Aesthetics

Instagram has become a dominant force in shaping interior design trends, offering a seemingly endless stream of inspirat... Read more

The Data Crunch In AI: Strategies For Sustainability

Exploring solutions to the imminent exhaustion of internet data for AI training.As the artificial intelligence (AI) indu... Read more

Google Abandons Four-Year Effort To Remove Cookies From Chrome Browser

After four years of dedicated effort, Google has decided to abandon its plan to remove third-party cookies from its Chro... Read more

LinkedIn Embraces AI And Gamification To Drive User Engagement And Revenue

In an effort to tackle slowing revenue growth and enhance user engagement, LinkedIn is turning to artificial intelligenc... Read more