Upstart Retrofits An Nvidia GH200 Server Into A €47,500 Workstation

Nvidia's long-teased GH200 CPU-GPU Superchips are finally going on sale, and the 1,000-Watt chip – built to run in servers and handle hefty AI training and inference tasks – is even available in a workstation from German startup gptshop.ai.

Bernhard Guentner, the brains behind the upstart, built the GH200 into a workstation after becoming unsatisfied with the performance of Nvidia's consumer grade RTX 4090s for running large models and due to his preference for keeping work out of the cloud.

He therefore modded a QCT server to fit into a consumer PC case. And if you happen to have between €47,500 ($50,900) and €59,500 ($63,700) handy, he says he'll build one for you too.

"I started experimenting with Nvidia's RTX 4090s. I bought a bunch of them and put them into a mining rack and just ran some tests. I quickly figured out that is not the way to go," Guentner explained in an interview with The Register.

While many large language models available from repositories like Hugging Face, Guentner quickly ran into a problem familiar to anyone who has tinkered with AI inference: the bigger the model, the more video memory you need.

This led Guentner to the GH200. First teased at GTC in 2022, the chip combines Nvidia's 72-core Arm Neoverse V2-based CPU and 480GB of LPDDR5x memory with an H100 GPU equipped with 96GB of HBM3 or 144GB of HBM3e memory.

That's enough memory to run a model like Meta's LLama 2 70B at FP8 – or potentially FP16 in the case of the 144GB config – entirely within the HBM. In theory, the high-speed interconnect between the CPU and GPU should allow you to fit even larger models into LPDD5x memory, if you're willing to sacrifice performance.

Performance and memory wise, the GH200 was exactly what Guentner was looking for. However, fitting it into a more traditional workstation platform with fans that don't spin at a deafening 20,000-plus RPM was another matter entirely.

"I looked at the power figures, and it's not more than 1,000 watts, so it was clear to me that it was totally possible to put it in a desktop," he noted.

While system builders have been packing server components into custom workstations for years, getting the GH200 and its motherboard to fit into the case wasn’t simple. Unlike most server platforms which have a high-degree of modularity – for example you can usually take a CPU or power supply from one system and swap it into another compatible one – Guentner was stuck using components sourced from the QCT system.

"I asked them if you can buy the super chip and everything separately, but they say not even that is possible," he said of OEM systems – adding that in the longer term he hopes to transition to more traditional ATX-style components where possible.

Getting everything into the case – an off the shelf full tower – took about two weeks and some modding.

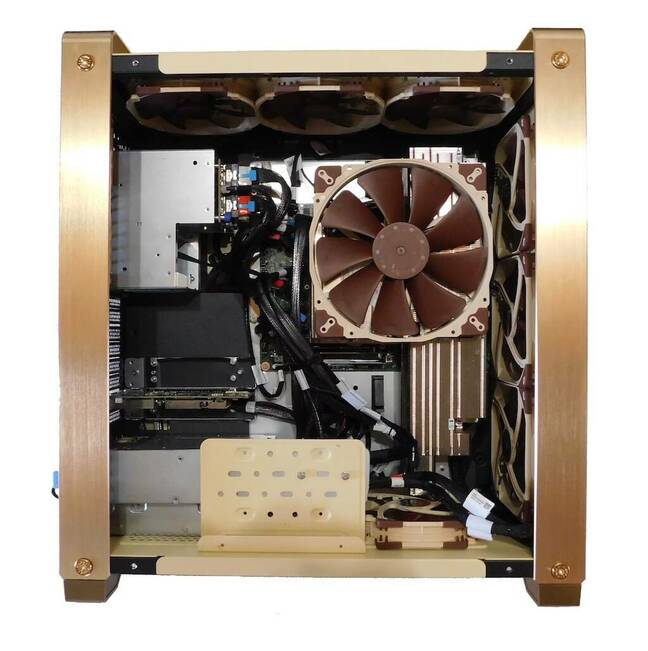

As you might expect, cooling a 1kW chip like Nvidia's GH200 requires a lot of air flow – Click to enlarge

Guentner rated cooling the toughest part of his build.

The GH200 isn't a cool chip. As we mentioned earlier, it's rated for a kilowatt of power and thermal dissipation when fully loaded up. If that weren't enough, the GH200 pulled from the QCT system features a heat sink designed for a low profile server – and you can't exactly go out and buy an aftermarket cooler for the thing.

But with enough Noctua fans lining the walls of the case, and even one blowing directly down onto the motherboard, Guentner was able to bring temps down to acceptable levels without the risk of hearing loss.

To validate the effectiveness of the cooling setup, Guentner has also provided FOSS-friendly site Phoronix remote access to the system for benchmarking. So far the team has only tested the GH200's Grace CPU, which has proven to be quite competitive against a variety of Epyc and Xeon Scalable-based systems.

- Nvidia reckons this itty-bitty workstation GPU won't run up your power bill

- Nvidia wants a piece of the custom silicon pie, reportedly forms unit to peddle IP

- How thermal management is changing in the age of the kilowatt chip

- AMD slaps together a silicon sandwich with MI300-series APUs, GPUs to challenge Nvidia's AI empire

For the moment, the system is just a prototype. But Guentner figures there's probably a market for high-performance workstations powered by Nvidia's Superchips, and plans to build and sell systems based on the design.

"There is demand – even higher than I expected. When I started, I expected to maybe sell one a month or so because it's highly specialized and the price tag is pretty significant," he told us.

These systems really won't come cheap. However, Guentner insists that there's not much he can do considering the prices Nvidia and its OEM/ODM partners are charging for the GH200.

The systems start at €47,500 and can be configured with a variety of add-on cards and storage – including Nvidia's BlueField 3 data processing units and RTX 4060 for video output. Guentner is also working on a liquid-cooled variant of the system.

Guentner may not have the market for GH200 workstations to himself for long, as Nvidia has previously built workstations based on its top-specced accelerators. ®

From Chip War To Cloud War: The Next Frontier In Global Tech Competition

The global chip war, characterized by intense competition among nations and corporations for supremacy in semiconductor ... Read more

The High Stakes Of Tech Regulation: Security Risks And Market Dynamics

The influence of tech giants in the global economy continues to grow, raising crucial questions about how to balance sec... Read more

The Tyranny Of Instagram Interiors: Why It's Time To Break Free From Algorithm-Driven Aesthetics

Instagram has become a dominant force in shaping interior design trends, offering a seemingly endless stream of inspirat... Read more

The Data Crunch In AI: Strategies For Sustainability

Exploring solutions to the imminent exhaustion of internet data for AI training.As the artificial intelligence (AI) indu... Read more

Google Abandons Four-Year Effort To Remove Cookies From Chrome Browser

After four years of dedicated effort, Google has decided to abandon its plan to remove third-party cookies from its Chro... Read more

LinkedIn Embraces AI And Gamification To Drive User Engagement And Revenue

In an effort to tackle slowing revenue growth and enhance user engagement, LinkedIn is turning to artificial intelligenc... Read more