Oxide Reimagines Private Cloud As... A 3,000-pound Blade Server?

Analysis Over the past few years we've seen a number of OEMs, including Dell, HPE, and others trying to make on-prem datacenters look and feel more like the public cloud.

However, at the end of the day, the actual hardware behind these offerings is usually just a bunch of regular servers and switches sold on a consumption-based model, not the kinds of OCP-style systems you'll find in a modern cloud or hyperscale datacenter.

Yet one of the stranger approaches to the concept we've seen in recent memory is from Oxide Computer, a company founded by a bunch of former Joyent and Sun Microsystems folks, who are trying to reframe the rack, not the server, as the unit of compute for the datacenter.

Starting from scratch

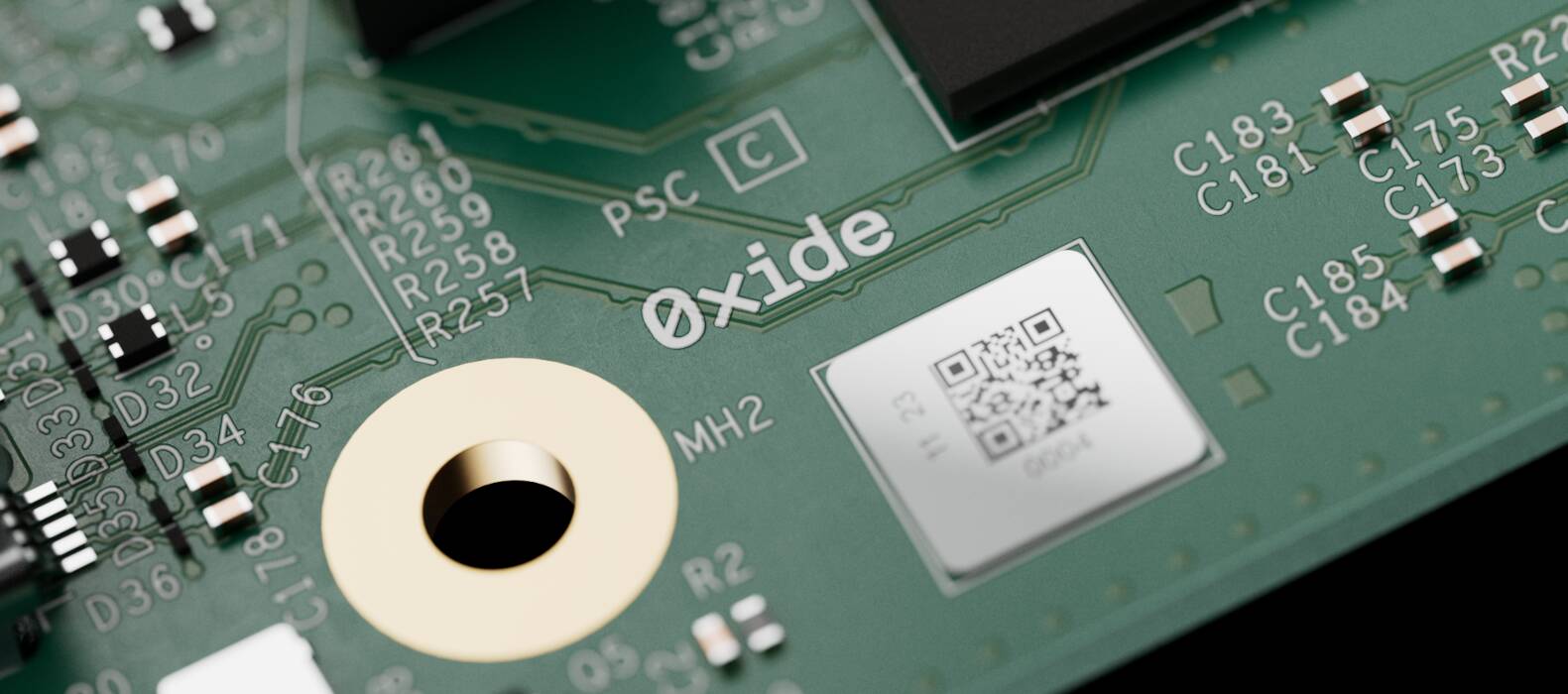

Looking at Oxide's rackscale hardware, you'd honestly be forgiven for thinking it's just another cabinet full of 2U systems. But slide out one of the hyperscale-inspired compute sleds, and it becomes apparent this is something different.

The 3,000 pound system stands nine feet tall (2.74 meters), draws 15kW under load, and connects up to 32 compute nodes and 12.8Tbps of switching capacity using an entirely custom software stack.

While it might look like any other rack, Oxide's rackscale systems are more like giant blade servers ... Source: Oxide Computer

In many respects, the platform answers the question: what would happen if you just built a rack-sized blade server? As strange as that sounds, that's not far off from what Oxide has actually done.

Rather than kludging together a bunch of servers, networking, storage, and all of the different software platforms required to use and manage them, Oxide says its rackscale systems can handle all of that using a consistent software interface.

As you might expect, actually doing that isn't as simple as it might sound. According to CTO Bryan Cantrill, achieving this goal meant building most of the hardware and software stack from scratch.

Bringing hyperscale convenience home

In terms of form factor, this had a couple of advantages as Cantrill says Oxide was able to integrate several hyperscale niceties, like a blind-mate backplane for direct current (DC) power delivery, which isn't the kind of thing commonly found on enterprise systems.

"It's just comical that everyone deploying at scale has a DC bus bar and yet you cannot buy a DC bus-bar-based system from Dell, HP, or Supermicro, because, quote unquote, no one wants it," Cantrill quipped.

Because the rack itself functions as the chassis, Oxide was also able to get away with non-standard form factors for its compute nodes. This allowed the company to use larger, quieter and less power hungry fans.

As we've covered in the past, fans can account for up to 20 percent of a server's power draw. But in Oxide's rack, Cantrill claims that figure is closer to 2 percent during normal operation.

Where things really get interesting is how Oxide is actually going about managing the compute hardware. Each rack can be equipped with up to 32 compute sleds, each equipped with a 64-core Epyc 3 processor, your choice of 512GB or 1TB of DDR4, and up to 10 2.5-inch U.2 NVMe drives. We're told Oxide plans to upgrade to DDR5 with the launch of AMD's Turin platform later this year, and those blades will be backwards compatible with existing racks.

These resources are divided up to things like virtual machines using a custom hypervisor based on Bhyve and Illumos unix. This might seem like a strange choice over KVM or Xen, which are used extensively by cloud providers, but sticking with a unix-based hypervisor makes sense considering Cantrill's history at Sun Microsystems.

For lights-out-style management of the underlying hardware, Oxide went a step further and developed a home-grown baseboard management chip (BMC). "There's no ASpeed BMC on the system. That's gone," Cantrill said. "We've replaced it with a slimmed down service processor that runs and operates a de novo operating system of our design called Hubris. It's an all Rust system that has really low latency and is on its own dedicated network."

"Because we have super low latency to the rack, we can actually do meaningful power management," he added, explaining that this means Oxide's software platform can take advantage of power management features baked into AMD's processors in a way that's not possible without tracking things like power draw in real time.

This, he claims, means a customer can actually take a 15kW rack and configure it to run in an 8kW envelope by forcing the CPU to run on less power.

In addition to power savings, Oxide says the integration between the service processor and hypervisor allow it manage workloads proactively. For example, if one of the nodes starts throwing an error — we imagine this could be something as simple as a fan failing — it could automatically migrate any workloads running on that node to another system before it fails.

This co-design ethos also extends to the company's approach to networking.

Where you might expect to see a standard white box switch from Broadcom or Marvell, Oxide has instead built its own based on Intel's Tofino 2 ASICs and capable of a combined 12.8 Tbps of throughput. And as you might have guessed at this point, this too is running a custom network operating system (NOS), which ironically runs on an AMD processor.

We'll note that Oxide's decision to go with the Tofino line is interesting since as of last year, Intel has effectively abandoned it. But neither Cantrill or CEO Steve Tuck seem too worried and Dell'Oro analyst Sameh Boujelbene has previously told us that 12.8 Tbps is still quite a bit for a top-of-rack switch.

Like DC power, networking for the systems is actually pre-plumbed into the backplane providing 100 Gbps of connectivity to each of the systems. "Once that is done correctly in manufacturing and validated, and verified, and shipped you never have to re-cable the system," Cantrill explained.

That said, we wouldn't want to be the techie charged with pulling apart the backplane if anything did go wrong.

Limited options

Obviously, Oxide's rackscale platform does come with a couple limitations: namely, you're stuck working with the hardware it supports.

At the moment, there aren't all that many options. You've got a general-purpose compute node with onboard NVMe for storage. In the base configuration, you're looking at a half-rack system with a minimum of 16 nodes totaling 1,024 cores and 8TB of RAM — twice that for the full config.

Depending on your needs, we can imagine there are going to be a fair number of people for whom even the minimum configuration might be a bit much.

For the moment, if you need support for alternative storage, compute, or networking, you're not going to be putting it in an Oxide rack just yet. Oxide's networking is still Ethernet so there's nothing stopping you from plunking a standard 19-inch chassis down next to one of these things and cramming it full of GPU nodes, storage servers, or any other standard rack mount components you might need.

As we mentioned earlier, Oxide will be launching new compute nodes based on AMD's Turin processor family, due out later this year, so we may see more variety in terms of compute and storage then.

- Upstart retrofits an Nvidia GH200 server into a €47,500 workstation

- Backblaze's geriatric hard drives kicked the bucket more in 2023

- Sam Altman's chip ambitions may be loonier than feared

- IBM pitches bite-sized $135k LinuxONE box for smaller biz types

All CPUs and no GPUs? What about AI?

With all the hype around AI, the importance of accelerated computing hasn't been lost on Cantrill or Tuck. However, he notes that while Oxide has looked at supporting GPUs, he's actually much more interested in APUs like AMD's newly launched MI300A.

The APU, which we explored at length back in December, combines 24 Zen 4 cores, six CDNA 3 cores, and 128GB of HBM3 memory into a single socket. "To me the APU is the mainstreaming of this accelerated computer where we're no longer having to have these like islands of acceleration," Cantrill said.

In other words, by having the CPU and GPU share memory, you can cut down on a lot of data movement, making APUs more efficient. It probably doesn't hurt that APUs also cut down on complexity of supporting multi-socket systems in the kinds of a space and thermally constrained form factor Oxide is working with.

While the hardware may be unique to Oxide, its software stack is at least being developed in the open on GitHub. So, if you do buy one of these things and the company folds or gets acquired, you should at least be able to get your hands on the software required to keep otherwise good hardware from turning into a very expensive brick. ®

From Chip War To Cloud War: The Next Frontier In Global Tech Competition

The global chip war, characterized by intense competition among nations and corporations for supremacy in semiconductor ... Read more

The High Stakes Of Tech Regulation: Security Risks And Market Dynamics

The influence of tech giants in the global economy continues to grow, raising crucial questions about how to balance sec... Read more

The Tyranny Of Instagram Interiors: Why It's Time To Break Free From Algorithm-Driven Aesthetics

Instagram has become a dominant force in shaping interior design trends, offering a seemingly endless stream of inspirat... Read more

The Data Crunch In AI: Strategies For Sustainability

Exploring solutions to the imminent exhaustion of internet data for AI training.As the artificial intelligence (AI) indu... Read more

Google Abandons Four-Year Effort To Remove Cookies From Chrome Browser

After four years of dedicated effort, Google has decided to abandon its plan to remove third-party cookies from its Chro... Read more

LinkedIn Embraces AI And Gamification To Drive User Engagement And Revenue

In an effort to tackle slowing revenue growth and enhance user engagement, LinkedIn is turning to artificial intelligenc... Read more