Nvidia's 900 Tons Of GPU Muscle Bulks Up Server Market, Slims Down Wallets

The server market for the near future is going to be about GPUs, GPUs, and more GPUs, according to Omdia. The market researcher estimates the volume of Nvidia H100 GPUs alone shipped during calendar Q2 added up to more than 900 tons in weight.

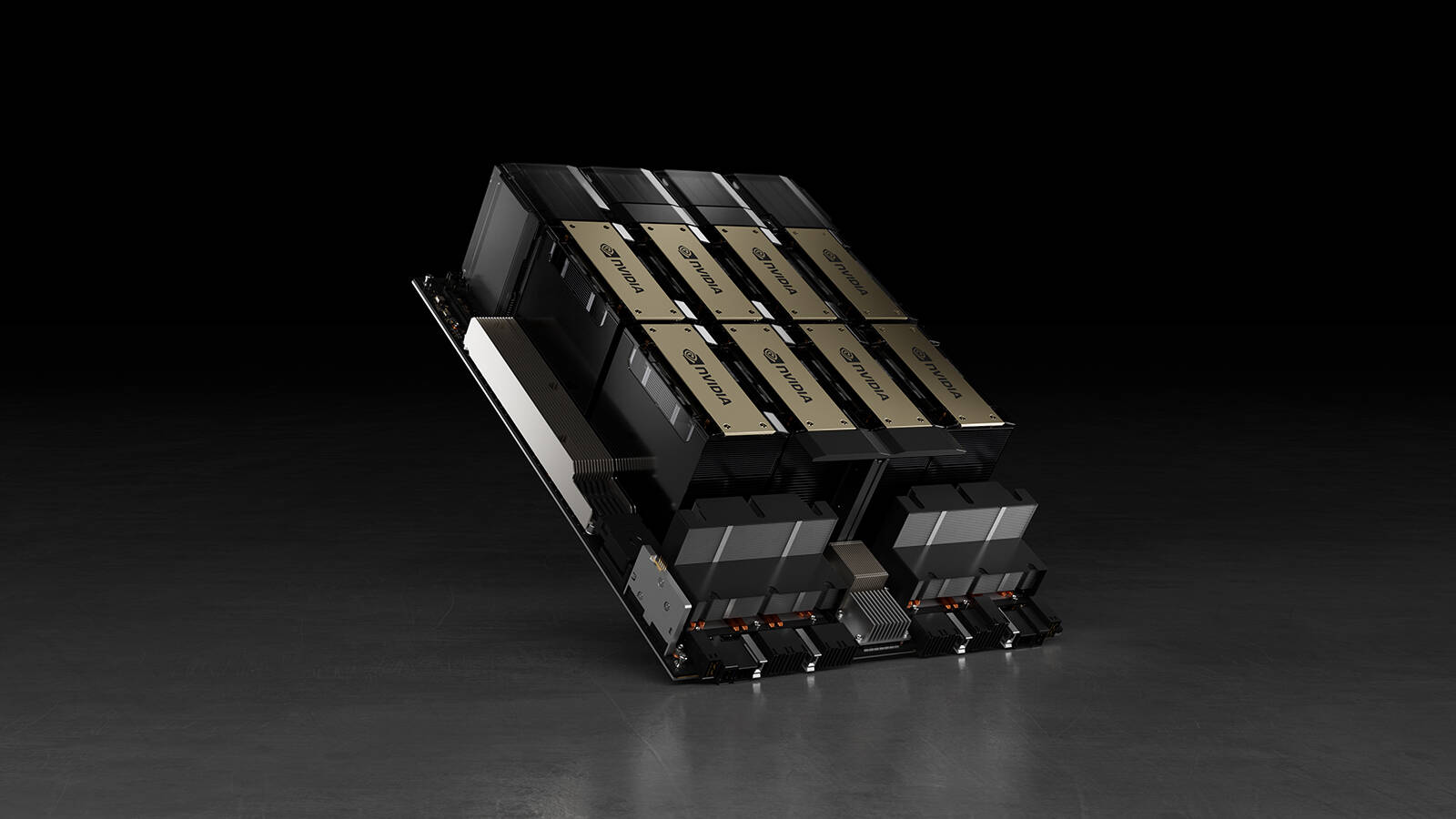

This headline-grabbing figure comes from the company's latest Cloud and Data Center Market Update, which notes the shift in datacenter investment priorities it previously highlighted continues apace. The report says demand for servers fitted with eight GPUs for AI processing work has had the effect of pushing up average prices, while simultaneously putting the squeeze on investment in other areas.

As a consequence, Omdia has once again lowered its estimate for annual server shipments for 2023 by another 1 million units to 11.6 million, a 17 percent decline over last year's figure. At the same time, however, the average price of a server has risen by more than 30 percent quarter-on-quarter and year-on-year because hyperscalers are pouring investment into these high-spec boxes.

The report states that just over 300,000 of Nvidia's H100 GPUs found their way into the assembly lines of server makers, with the 900 tons figure based on each GPU with its heatsink weighing in at about 3kg.

With each H100 carrying an eye-watering price tag of approximately $21,000 each, this paradoxically means that Omdia now expects total server market revenue for 2023 to come in at $114 billion, up 8 percent year-on-year, despite unit shipments being down markedly.

This huge demand for AI servers bristling with GPUs is largely driven by the hyperscale companies and cloud providers, according to Omdia, with the beneficiaries being the original design manufacturer (ODM) server makers – the so-called white box vendors.

It claims that a large fraction of those eight-GPU servers shipped in the second quarter was built by ZT Systems, a US-based cloud server manufacturer that is described as a fast-growing, privately owned company. Evidence for this is based on a claim that as much as 17 percent of Nvidia's $13.5 billion second quarter revenue was driven by a single vendor, and that Nvidia CEO Jensen Huang showcased a DGX server manufactured by ZT Systems during Computex Taipei.

Omdia also believes that much of the growth in demand for eight-GPU servers during Q2 was likely down to Meta, based on Nvidia previously saying that 22 percent of the quarter's revenue was driven by a single cloud service provider.

- Mention AI in earnings calls ... and watch that share price leap

- Morgan Stanley values Tesla's super-hyped supercomputer at up to $500B

- d-Matrix bets on in-memory compute to undercut Nvidia in smaller AI models

- Now Middle East nations banned from getting top-end Nvidia AI chips

And there is more of this to come. The report predicts the rapid adoption of servers configured for AI processing will continue for the second half of this year and the first half of 2024.

However, Omdia forecasts a better-than-seasonal rebound in demand for general-purpose servers during the current third quarter and the next as enterprise demand picks up. This will see revenue in 3Q23 up by 13 percent year-on-year, accelerating to 29 percent in 4Q23, it predicts.

In particular, Omdia expects the continued deployment of eight-GPU servers to result in server market revenue growing by 51 percent year-over-year during the first half of 2024, with a million H100 GPUs forecast to find their way into systems.

Yet the analyst cautions that the rapid investment in AI training capabilities it is seeing among hyperscalers and cloud providers should not be confused for rapid adoption. AI usage is still generally low, it said, citing a survey that found that only 18 percent of US adults have used ChatGPT. Generative AI also currently makes up only a small fraction of cloud computing costs for enterprises as well, as The Register reported recently.

There are also downsides to this huge investment in power-hungry GPU systems for AI training. One media industry exec recently warned that AI will "burn the world" unless radical action is taken to implement more sustainable practices.

Speaking at the International Broadcasting Convention (IBC) in Amsterdam this month, Ad Signal CEO and founder Tom Dunning said that while we can't ask organizations to give up the commercial benefits of AI, it is clear that "there's an urgent need to minimise its carbon impact."

Datacenters are now responsible for 2.5 to 3.7 percent of all carbon dioxide emissions, Dunning claimed, and adoption of AI is projected to grow at a 37 per cent compound annual growth rate (CAGR) between now and 2030. ®

From Chip War To Cloud War: The Next Frontier In Global Tech Competition

The global chip war, characterized by intense competition among nations and corporations for supremacy in semiconductor ... Read more

The High Stakes Of Tech Regulation: Security Risks And Market Dynamics

The influence of tech giants in the global economy continues to grow, raising crucial questions about how to balance sec... Read more

The Tyranny Of Instagram Interiors: Why It's Time To Break Free From Algorithm-Driven Aesthetics

Instagram has become a dominant force in shaping interior design trends, offering a seemingly endless stream of inspirat... Read more

The Data Crunch In AI: Strategies For Sustainability

Exploring solutions to the imminent exhaustion of internet data for AI training.As the artificial intelligence (AI) indu... Read more

Google Abandons Four-Year Effort To Remove Cookies From Chrome Browser

After four years of dedicated effort, Google has decided to abandon its plan to remove third-party cookies from its Chro... Read more

LinkedIn Embraces AI And Gamification To Drive User Engagement And Revenue

In an effort to tackle slowing revenue growth and enhance user engagement, LinkedIn is turning to artificial intelligenc... Read more