Nvidia Creates Open Server Spec To House Its Own Chips – And The Occasional X86

Computex Nvidia has created an open design for servers to house its accelerators, arguing that CPU-centric server designs aren't up the job of housing multiple GPUs and SmartNICs.

Nvidia CEO Jensen Huang announced the design – called MGX – at the Computex 2023 conference in Taiwan on Monday. He argued it's necessary because existing server designs weren't created to cope with the heat produced – and power consumed – by Nvidia's accelerators.

The chassis design was unveiled during a keynote that saw Huang bound onto stage for his first public speech in four years, leading him to ask the audience to wish him luck.

The crowd was ready to do that, and much more: punters literally ran to the front of the room to be closer to Huang. Wearing his trademark black leather jacket, the CEO delivered a two-hour speech peppered with jokes in Chinese and even an expression of appreciation for a notorious Taiwanese snack called "stinking tofu." The crowd ate it up. A Taiwanese software project manager – who cheekily occupied a seat reserved for the press so she could see Huang more clearly – told us she attended because the Nvidia boss was sure to offer unparalleled insights on AI. She was rapt throughout.

Huang's pitch was that an era of computing history that started with the 1965 debut of IBM's System 360 has come to an end. In Huang's telling, the System 360 gave the world the primacy of the CPU and the ability to scale systems.

That architecture has dominated the world since, he opined, but CPU performance improvement has plateaued, and accelerator-assisted computing is the future.

That argument is, of course, Nvidia's core tenet.

But Huang backed it up with data about the effort required to produce a large language model (LLM) citing a hypothetical 960-server system that cost $10 million and consumed 11GWh to train one LLM.

The CEO asserted just a pair of Nvidia-powered servers costing $400,000 and packing GPUs can do the same job while consuming just 0.13 GWh. He also suggested a $34m Nvidia-powered rig of 172 servers could produce 150 LLMs while consuming 11GWh.

Huang's theory is that this sort of rig will soon be on many organizations' shopping lists because, while datacenters are being built at a furious rate, competition for space on racks and electricity will remain fierce – many users will therefore look to rearchitect their datacentres for greater efficiency and density.

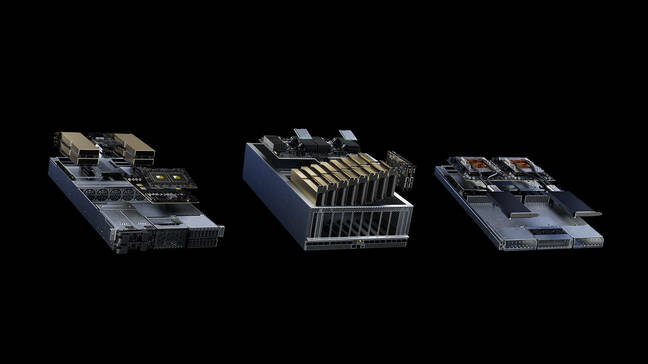

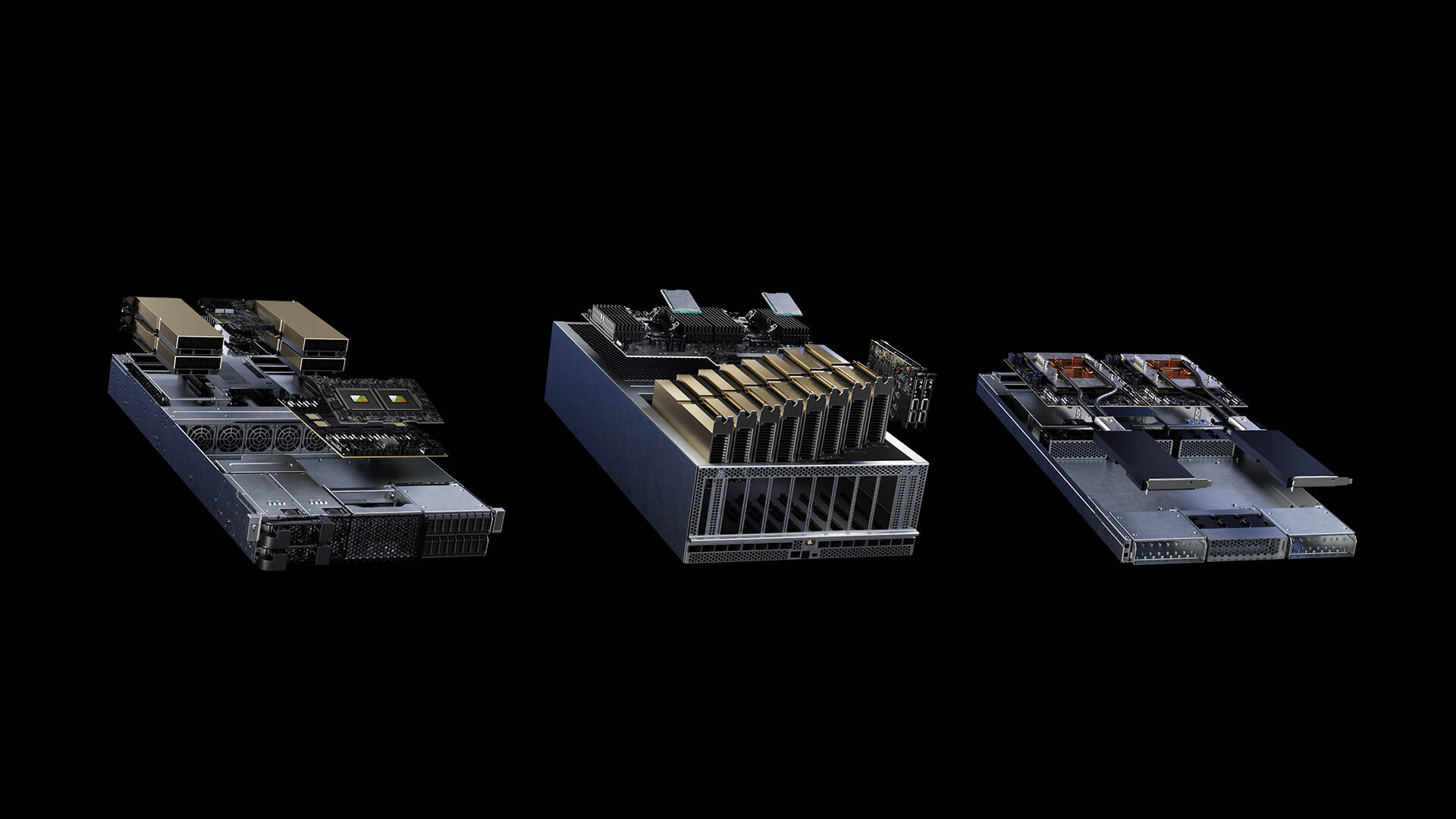

Which is where the MGX spec comes in – by offering a design that can use more of Nvidia's wares in a smaller footprint than would be the case if they were shoehorned into a machine powered by a CPU alone. Huang suggested MGX delivers the density users require. And it can happily house boring old x86 CPUs if required, alongside all those lovely Nvidia accelerators.

- Look mom, no InifiniBand: Nvidia’s DGX GH200 glues 256 superchips with NVLink

- Nvidia GPUs fly out of the fabs – and right back into them

- Nvidia CEO pay falls ten percent in FY23 on missed sales targets

- Nvidia CEO promises sustainability salvation in the cult of accelerated computing

Taiwanese OEMs ASRock Rack, ASUS, GIGABYTE, Pegatron, QCT and Supermicro have all signed up to produce the servers, which Huang said can be deployed in over 100 configurations in 1U, 2U, and 4U form factors.

In August QCT will deliver an MGX design named the S74G-2U that will offer the GH200 Grace Hopper Superchip. In the same month Supermicro's ARS-221GL-NR will include the Grace CPU Superchip.

Huang also announced that Grace Hopper production is now in full swing. The CEO suggested it could find a home running 5G networks – and some generative AI at the same time – to tidy up video chats as they pass through the network instead of leaving client devices to do all the work of compressing and decompressing video.

He also outlined a massive switch – the Spectrum-X Networking Platform – to improve the efficiency of ethernet in large-scale clouds running AI workloads.

And he made vague references to Nvidia improving support for its AI software stack so it's more suitable for enterprise use. He suggested Red Hat as the model Nvidia intends to emulate.

Robotics were also on the CEO's mind. Nvidia has opened a platform called Isaac that combines software and silicon to enable the creation of autonomous bots for industrial use – especially in warehouses, where Huang said they'll roll around to move goods.

Those bots will be able to do so after being created on digital twins of real-world spaces developed using servers packed full of Nvidia acceleration hardware.

Which again illustrates why Nvidia has created MGX. ®

From Chip War To Cloud War: The Next Frontier In Global Tech Competition

The global chip war, characterized by intense competition among nations and corporations for supremacy in semiconductor ... Read more

The High Stakes Of Tech Regulation: Security Risks And Market Dynamics

The influence of tech giants in the global economy continues to grow, raising crucial questions about how to balance sec... Read more

The Tyranny Of Instagram Interiors: Why It's Time To Break Free From Algorithm-Driven Aesthetics

Instagram has become a dominant force in shaping interior design trends, offering a seemingly endless stream of inspirat... Read more

The Data Crunch In AI: Strategies For Sustainability

Exploring solutions to the imminent exhaustion of internet data for AI training.As the artificial intelligence (AI) indu... Read more

Google Abandons Four-Year Effort To Remove Cookies From Chrome Browser

After four years of dedicated effort, Google has decided to abandon its plan to remove third-party cookies from its Chro... Read more

LinkedIn Embraces AI And Gamification To Drive User Engagement And Revenue

In an effort to tackle slowing revenue growth and enhance user engagement, LinkedIn is turning to artificial intelligenc... Read more