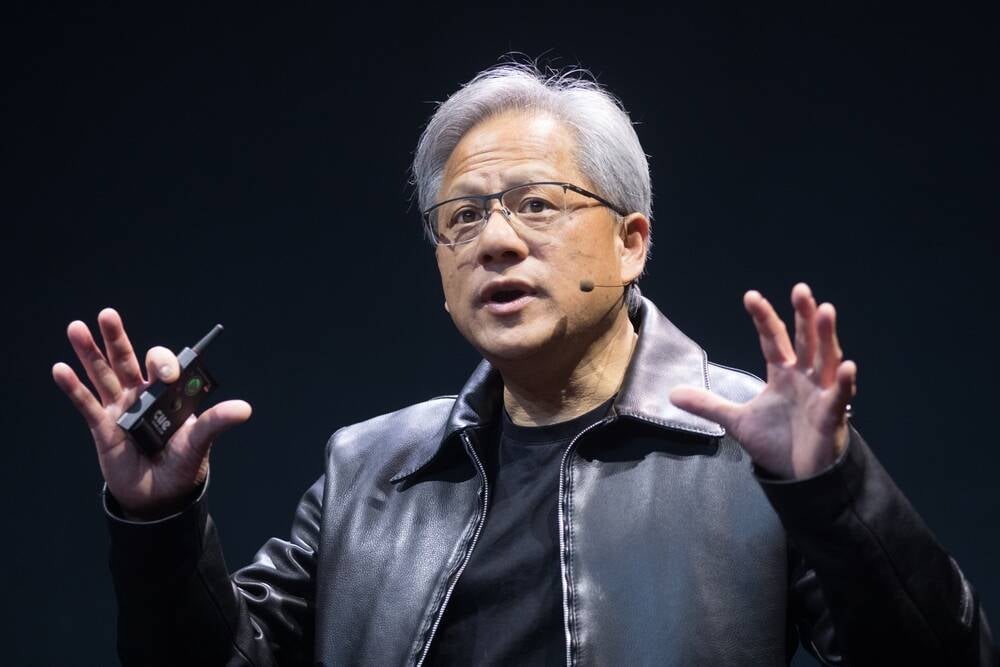

Nvidia CEO To Nervous Buyers And Investors: Chill Out, Blackwell Production Is Heating Up

Nvidia CEO Jensen Huang has attempted to quell concerns over the reported late arrival of the Blackwell GPU architecture, and the lack of ROI from AI investments.

"Demand is so great that delivery of our components and our technology and our infrastructure and software is really emotional for people because it directly affects their revenues, it directly affects their competitiveness," Huang explained, according to a transcript of remarks he made at the Goldman Sachs Tech Conference on Wednesday. "It's really tense. We've got a lot of responsibility on our shoulders and we're trying the best we can."

The comments follow reports that Nvidia's next-generation Blackwell accelerators won't ship in the second half of 2024, as Huang has previously promised. The GPU giant's admission of a manufacturing defect – which necessitated a mask change – during its Q2 earnings call last month hasn't helped this perception. However, speaking with Goldman Sachs's Toshiya Hari on Wednesday, Huang reiterated that Blackwell chips were already in full production and would begin shipping in calendar Q4.

Unveiled at Nvidia's GTC conference last northern spring, the GPU architecture promises between 2.5x and 5x higher performance and more than twice the memory capacity and bandwidth of the H100-class devices it replaces. At the time, Nvidia said the chips would ship sometime in the second half of the year.

Despite Huang's reassurance that Blackwell will ship this year, talk of delays has sent Nvidia's share price on a roller coaster ride – made more chaotic by disputed reports that the GPU giant had been subpoenaed by the DoJ and faces a patent suit brought by DPU vendor Xockets.

According to Huang, demand for Blackwell parts has exceeded that for the previous-generation Hopper products which debuted in 2022 – before ChatGPT's arrival made generative AI a must-have.

Huang told the conference that extra demand appears to be the source of many customers' frustrations.

"Everybody wants to be first and everybody wants to be most … the intensity is really, really quite extraordinary," he said.

Accelerating ROI

Huang also addressed concerns about the ROI associated with the pricey GPU systems powering the AI boom.

From a hardware standpoint, Huang's argument boils down to this: the performance gains of GPU acceleration far outweigh the higher infrastructure costs.

"Spark is probably the most used data processing engine in the world today. If you use Spark and you accelerate it, it's not unusual to see a 20:1 speed-up," he claimed, adding that even if that infrastructure costs twice as much, you're still looking at a 10x savings.

According to Huang, this also extends to generative AI. "The return on that is fantastic because the demand is so great that every dollar that they [service providers] spend with us translates to $5 worth of rentals."

However, as we've previously reported, the ROI on the applications and services built on this infrastructure remains far fuzzier – and the long-term practicality of dedicated AI accelerators, including GPUs, is up for debate.

Addressing AI use cases, Huang was keen to highlight his own firm's use of custom AI code assistants. "I think the days of every line of code being written by software engineers, those are completely over."

Huang also touted the application of generative AI on computer graphics. "We compute one pixel, we infer the other 32," he explained – an apparent reference to Nvidia’s DLSS tech, which uses frame generation to boost frame rates in video games.

Technologies like these, Huang argued, will also be critical for the success of autonomous vehicles, robotics, digital biology, and other emerging fields.

- Oracle boasts zettascale 'AI supercomputer,' just don't ask about precision

- Amazon to pour £8B into UK datacenters through to 2028

- Mainframes aren't dead, they're just learning AI tricks

- We're in the brute force phase of AI – once it ends, demand for GPUs will too

Densified, vertically integrated datacenters

While Huang remains confident the return on investment from generative AI technologies will justify the extreme cost of the hardware required to train and deploy it, he also suggested smarter datacenter design could help drive down costs.

"When you want to build this AI computer people say words like super-cluster, infrastructure, supercomputer for good reason – because it's not a chip, it's not a computer per se. We're building entire datacenters," Huang noted in apparent reference to Nvidia's modular cluster designs, which it calls SuperPODs.

Accelerated computing, Huang explained, allows for a massive amount of compute to be condensed into a single system – which is why he says Nvidia can get away with charging millions of dollars per rack. "It replaces thousands of nodes."

However, Huang made the case that putting these incredibly dense systems – as much as 120 kilowatts per rack – into conventional datacenters is less than ideal.

"These giant datacenters are super inefficient because they're filled with air, and air is a lousy conductor of [heat]," he explained. "What we want to do is take that few, call it 50, 100, or 200 megawatt datacenter which is sprawling, and you densify it into a really, really small datacenter."

Smaller datacenters can take advantage of liquid cooling – which, as we've previously discussed, is often a more efficient way to cool systems.

How successful Nvidia will be at driving this datacenter modernization remains to be seen. But it's worth noting that with Blackwell, its top-specced parts are designed to be cooled by liquids. ®

From Chip War To Cloud War: The Next Frontier In Global Tech Competition

The global chip war, characterized by intense competition among nations and corporations for supremacy in semiconductor ... Read more

The High Stakes Of Tech Regulation: Security Risks And Market Dynamics

The influence of tech giants in the global economy continues to grow, raising crucial questions about how to balance sec... Read more

The Tyranny Of Instagram Interiors: Why It's Time To Break Free From Algorithm-Driven Aesthetics

Instagram has become a dominant force in shaping interior design trends, offering a seemingly endless stream of inspirat... Read more

The Data Crunch In AI: Strategies For Sustainability

Exploring solutions to the imminent exhaustion of internet data for AI training.As the artificial intelligence (AI) indu... Read more

Google Abandons Four-Year Effort To Remove Cookies From Chrome Browser

After four years of dedicated effort, Google has decided to abandon its plan to remove third-party cookies from its Chro... Read more

LinkedIn Embraces AI And Gamification To Drive User Engagement And Revenue

In an effort to tackle slowing revenue growth and enhance user engagement, LinkedIn is turning to artificial intelligenc... Read more