Intel Promises Next Year's Xeons Will Challenge AMD On Memory, IO Channels

Hot Chips Intel today for the Hot Chips 2023 conference shed light on the architecture changes, including improvements to memory subsystems and IO connectivity, coming to next-gen Xeon processors.

While the x86 giant's fifth-gen Xeon Scalable processors are still a few months off, the chipmaker is already looking ahead to its next-gen Sierra Forest and Granite Rapids Xeons to catch up with long-time rival AMD, particularly when it comes to memory and IO.

Intel's current crop of Xeon Scalable processors — code-named Sapphire Rapids — top out at eight channels of DDR5 DRAM at 4,800MT/s and 80 lanes of PCIe 5.0 / CXL 1.1 connectivity. That's compared to 12 channels and 128 PCIe lanes on AMD's Epyc 4 platform.

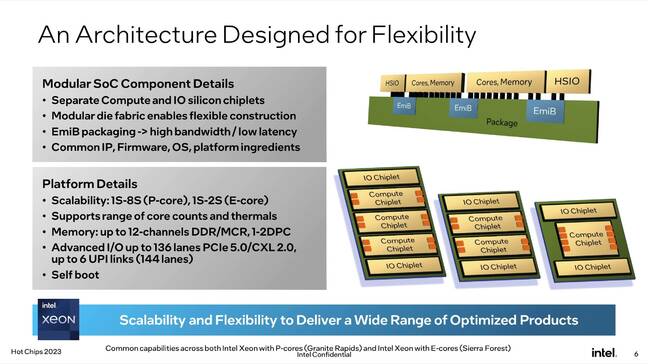

Intel's next-gen Xeons – likely the sixth generation – will move to a 12-channel configuration with support for both DDR5 and MCR DRAM DIMMs as well as 136 lanes of PCIe 5.0 / CXL 2.0 interfacing. What's more, Intel says its processor family will support two-DIMM-per-channel (2DPC) configurations out the gate. This is something AMD ran into some trouble with when moving to 12 memory channels for Epyc 4 last November.

Multiplexer combined rank (MCR) DIMMs are interesting as they promise substantial bandwidth improvements over traditional DDR5 DRAM. Intel previously demoed a pre-production Granite Rapids Xeon connected to MCR modules at 8,800MT/s in March. That's nearly twice the speed of modern DDR5 (4,400 to 4,800MT/s) available on server platforms today.

"We'll get just under a three-times improvement in memory bandwidth going from Sapphire Rapids to this new platform," Intel Fellow Ronak Singhal said in a briefing ahead of Hot Chips.

Intel's 6th-Gen Xeon Scalable processors will come in E-core (Sierra Forest) and P-core (Granite Rapids) versions and support up to 12 channels of DDR5 ... Source for slides: Intel. Click to enlarge

Another notable change coming to Intel's next-gen Xeon Scalable processors is greater consolidation of functionality at the platform level. Over the years chipmakers have worked to move functionality off the motherboard and into the socket. AMD integrated the chipset with its Epyc family years ago, and with Sierra Forest and Granite Rapids, Intel plans to do the same.

This particular change will see Intel move to an AMD-style chiplet architecture with separate compute and IO dies within the processor package. As you may recall, while Sapphire Rapids was Intel's first Xeon to embrace a chiplet architecture, they were essentially four CPUs, each with their own memory and IO controllers stuck together under one integrated heat spreader.

Disaggregating IO functionality from the compute die has become quite popular among chipmakers over the past few generations. AMD, Ampere, and AWS's Graviton3 all feature one or more distinct IO chiplets.

- AMD adds 4th-gen Epycs to AWS in HPC and normie workload flavors

- Intel's Tower bid has shuffled off this mortal coil – so what about foundry plans?

- AVX10: The benefits of AVX-512 without all the baggage

- Gelsinger: Intel should get more CHIPS Act funding than rivals

More details arise on Intel's dueling DC architectures

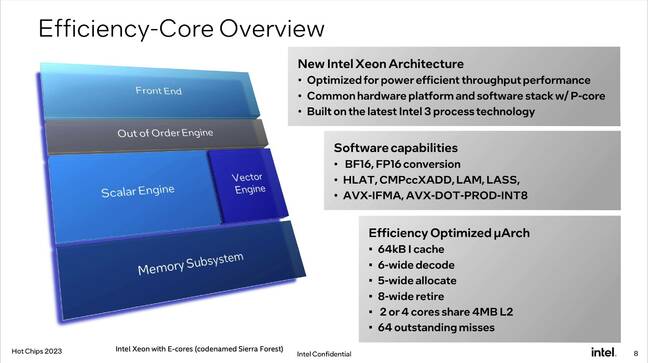

At Hot Chips intel also offered some insights into the features and capabilities we can expect to see from the corp's first efficiency-core Xeon.

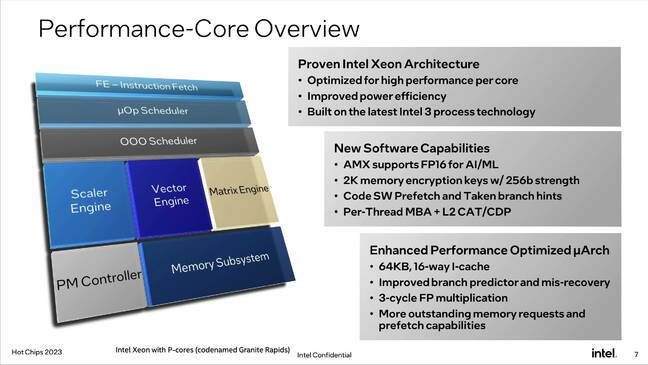

As we learned in March, Intel's next-gen Xeon Scalable processors will come in two variants: the all-E-core (or all-efficiency-core) Sierra Forest for high-density scale-out workloads, and all-P-core (or all-performance-core) Granite Rapids for compute-intensive applications. And unlike AMD's Bergamo, which used a cut-down version of the core found in Genoa, Intel's parts will use two different core architectures.

"We think having two separate micro architectures gives us better coverage of that continuum we're looking at, versus trying to use one micro-architecture," Singhal explained.

So, while both chips will be fabbed using the chipmaker's long-delayed 7nm process — now called Intel 3 — the two will have different feature sets tuned to their target workloads. For example, Intel's P-cores feature its Advanced Matrix Extensions (AMX) while this functionality appears to be absent on the E-cores.

Intel is taking steps to minimize the potential headaches that enterprises could run into due to these differences, with its AVX10 instruction set, which we took a look at in detail earlier this month.

The E-cores used in Intel's Sierra Forest Xeons will feature a streamlined core architecture optimized for efficiency and throughput, we're promised

While details on Sierra Forest remain thin, we know the processor line will feature up to 144 cores and will be available in both single and dual socket configurations.

We've also learned that Intel will offer cache-optimized versions of the chip with either two or four cores per a 4MB pool of L2. "There are some customers that will be happier with a lower core count at a higher per-core performance level. In that case, you would look at the two-cores sharing the 4MB," Singhal explained.

Meanwhile those running floating-point-heavy operations, including AI and ML, will be happy to know Sierra Forest will support both BF16 and FP16 acceleration. As we understand it, this is related to the inclusion of AVX10 support this generation.

In terms of performance, Intel is making some bold claims regarding its E-cores. At the rack level, Intel claims Sierra Forest will deliver about 2.5x more threads at 240 percent higher performance-per-watt versus Sapphire Rapids.

"We're basically saying that you get that density at almost the exact same per-thread performance as the most recent Xeon," Singhal said.

Intel says its P-core-equipped Granite Rapids Xeons will offer higher core counts and AMX performance enhancements compared to Sapphire Rapids

As for the chipmaker's P-core-toting Granite Rapids chips, Intel is promising higher core counts than Sapphire Rapids and improvement to the AMX engine which extend support for FP16 calculations for AI/ML workloads. How many more cores we can expect to see, Intel hasn't said.

Other improvements detailed this week include support for larger memory encryption keys, improved prefetch and branch prediction, and much faster floating-point multiplication to name a few.

According to Intel, Sierra Forest is slated to launch in the "first half of 2024" while Granite Rapids will follow "shortly after." ®

From Chip War To Cloud War: The Next Frontier In Global Tech Competition

The global chip war, characterized by intense competition among nations and corporations for supremacy in semiconductor ... Read more

The High Stakes Of Tech Regulation: Security Risks And Market Dynamics

The influence of tech giants in the global economy continues to grow, raising crucial questions about how to balance sec... Read more

The Tyranny Of Instagram Interiors: Why It's Time To Break Free From Algorithm-Driven Aesthetics

Instagram has become a dominant force in shaping interior design trends, offering a seemingly endless stream of inspirat... Read more

The Data Crunch In AI: Strategies For Sustainability

Exploring solutions to the imminent exhaustion of internet data for AI training.As the artificial intelligence (AI) indu... Read more

Google Abandons Four-Year Effort To Remove Cookies From Chrome Browser

After four years of dedicated effort, Google has decided to abandon its plan to remove third-party cookies from its Chro... Read more

LinkedIn Embraces AI And Gamification To Drive User Engagement And Revenue

In an effort to tackle slowing revenue growth and enhance user engagement, LinkedIn is turning to artificial intelligenc... Read more