Intel Abandons XPU Plan To Cram CPU, GPU, Memory Into One Package

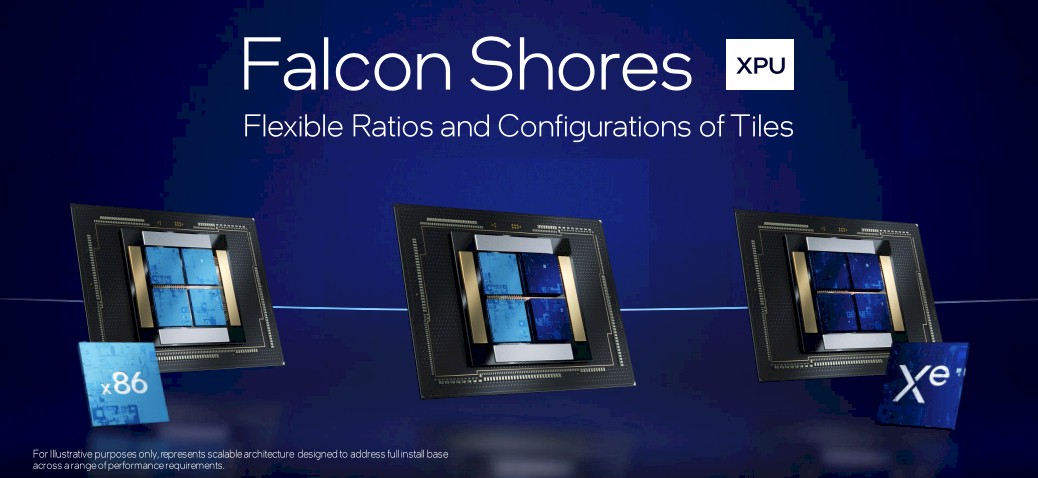

ISC Intel's grand plan to stitch CPU, GPU, and memory dies together on a single package called an XPU is dead in the water.

In a press conference ahead of this week's International Supercomputing Conference (ISC), Jeff McVeigh VP of Intel's Supercomputing Group revealed that the company's Falcon Shores platform wouldn't just be late, but it wouldn't be an XPU all.

"My prior push to integrate a CPU and GPU into an XPU was premature," he said, making the case the market had changed so much in the year since it detailed Falcon Shores that it no longer made sense to proceed.

In a somewhat contrived analogy, McVeigh compared the situation to mountain climbing. "The thing about climbing mountains is you want to go for the summit when the right window is available. If the weather is turning bad, you're not feeling right, you don't push for the summit just because it's there. You push when you're ready, when the ecosystem is ready, when the climate is ready."

According to McVeigh, today's AI and HPC workloads are too dynamic for integration to make sense. "When the workloads are fixed, when you have really good clarity that they're not going to be changing dramatically, integration is great," he added.

Yet you may recall that the flexibility of Falcon Shores' modular chiplet architecture was supposed to be an advantage as Intel planned to offer it in multiple SKUs with more or less CPU or GPU resources, depending on the use case. This was the whole point of moving to a chiplet architecture and using packaging to stitch everything together.

With that said, the revelation shouldn't come as a shock. Intel gutted its Accelerated Computing Group in March, and later that month division chief Raja Koduri jumped ship to an AI startup. The restructure saw the cancellation of Rialto Bridge, the planned successor to the Ponte Vecchio GPUs powering Argonne National Lab's Aurora supercomputer. However, by all appearances, it looked like Intel's Falcon Shores XPUs, while delayed until 2025, had been spared the chopping block. We now know that Falcon Shores as it exists today looks very little like the vision presented by Intel this time last year. Instead of CPU, GPU, and CPU+GPU configurations, we're just getting a GPU.

McVeigh told The Register that while Falcon Shores won't be an XPU, that doesn't mean that Intel won't revive the project when the time is right.

AMD MI300 to go unchallenged

Intel's decision not to pursue its combined CPU-GPU architecture leaves AMD's MI300, which follows a similar pattern and is expected to debut next month, largely unchallenged – more on that later.

AMD has been teasing the MI300 for months now. In January, the company offered its best look yet at the accelerated processing unit (APU) – AMD's preferred term for CPU-GPU architectures.

Based on the package shots shared by AMD in January, the chip will feature 24 Zen 4 cores – the same used in AMD's Epyc 4 Genoa platform from November – spread across two chiplets that are meshed together with six GPU dies and eight high-bandwidth memory modules good for a total of 128GB.

- Microsoft offers electrical engineers a lifeline as it pursues custom cloud silicon

- Ampere heads off Intel, AMD's cloud-optimized CPUs with a 192-core Arm chip

- It's time for IT teams, vendors to prioritize efficiency; here's where they should start

- Money starts to flow as liquid cooling gets hot in datacenters

On the performance front, AMD claims the chip offers 8x the "AI performance" over the MI250X used in the Frontier supercomputer, while also achieving 5x greater performance per watt. According to our sister site The Next Platform, this would put the chip's performance on par with four MI250X GPUs, when taking into account support for 8-bit floating point (FP8) mathematics with sparsity, and likely put the chip in the neighborhood of 900W.

If true, MI300A is shaping up to be one spicy chip that will almost certainly require liquid cooling to tame. This shouldn't be a problem for HPC systems, most of which use direct liquid cooling already, but could force legacy datacenters to upgrade their facilities or risk being left behind.

Don't forget Grace Hopper

Technically speaking, AMD isn't the only one pursing a combined CPU-GPU architecture for the datacenter. AMD will have some competition from Nvidia's Grace Hopper superchip, announced back in March last year.

However, MI300 and Grace Hopper are very different beasts. Nvidia's approach to this particular problem was to pair its 72-core Arm-compatible Grace CPU with an GH100 die using its proprietary 900GBps NVLink-C2C interconnect. While this eliminates PCIe as a bottleneck between the two components, they are distinct, each with their own memory. The GH100 die has its own HBM3 memory while the Grace GPU is coupled to 512GB of LPDDR5, good for 500GBps of memory bandwidth.

The MI300A, on the other hand, looks to be an honest to goodness APU capable of addressing the same HBM3 memory without the need to copy it back and forth over an interconnect.

Which approach will render better performance and in which workloads is not yet answered, but it's a fight Intel won't have a seat at the table for. ®

From Chip War To Cloud War: The Next Frontier In Global Tech Competition

The global chip war, characterized by intense competition among nations and corporations for supremacy in semiconductor ... Read more

The High Stakes Of Tech Regulation: Security Risks And Market Dynamics

The influence of tech giants in the global economy continues to grow, raising crucial questions about how to balance sec... Read more

The Tyranny Of Instagram Interiors: Why It's Time To Break Free From Algorithm-Driven Aesthetics

Instagram has become a dominant force in shaping interior design trends, offering a seemingly endless stream of inspirat... Read more

The Data Crunch In AI: Strategies For Sustainability

Exploring solutions to the imminent exhaustion of internet data for AI training.As the artificial intelligence (AI) indu... Read more

Google Abandons Four-Year Effort To Remove Cookies From Chrome Browser

After four years of dedicated effort, Google has decided to abandon its plan to remove third-party cookies from its Chro... Read more

LinkedIn Embraces AI And Gamification To Drive User Engagement And Revenue

In an effort to tackle slowing revenue growth and enhance user engagement, LinkedIn is turning to artificial intelligenc... Read more