Inflection AI Enterprise Offering Ditches Nvidia GPUs For Intel's Gaudi 3

In breaking trends news, Inflection AI revealed its latest enterprise platform would ditch Nvidia GPUs for Intel's Gaudi 3 accelerators.

"While Inflection AI's Pi customer application was previously run on Nvidia GPUs, Inflection 3.0 will be powered by Gaudi 3 with instances on-premises or in the cloud powered by [the Tiber] AI Cloud," according to Intel.

Inflection AI got its start in 2022 as a model builder developing a conversational personal assistant called Pi. However, following the departure of key founders, Mustafa Suleyman and Karén Simonyan, for Microsoft this spring, the startup has since shifted its focus on building custom fine-tuned models for enterprises using their data.

The latest iteration of the startup's platform - Inflection 3.0 - targets fine-tuning of its models using their own proprietary datasets with the goal of building entire enterprise-specific AI apps. Intel itself will be one of the first customers to adopt the service, which does make us wonder whether Inflection is paying full price for the accelerators.

While Inflection will be running the service on Gaudi 3 accelerators, it doesn't appear it'll be racking up systems anytime soon. Similar to Inflection 2.5, which was hosted in Azure, the latest iteration will run on Intel's Tiber AI Cloud service.

The outfit does, however, see the need for physical infrastructure, at least for customers who would rather keep their data on-prem. Beginning in Q1 2025, Inflection plans to offer a physical system based on Intel's AI accelerators.

We'll note that just because the AI startup is using Gaudi 3 accelerators to power its enterprise platform, it doesn't mean customers are stuck with them to run their finished models.

AI model and software development isn't exactly cheap and compared to Nvidia's H100, Intel's Gaudi 3 is a relatively bargain. "By running Inflection 3.0 on Intel, we're seeing up to 2x improved price performance… compared with current competitive offerings," Inflection AI CEO Sean White wrote in a blog post on Monday.

And at least on paper, Gaudi 3 promises to be not only faster for training and inference than Nvidia's venerable H100, but cheaper at that.

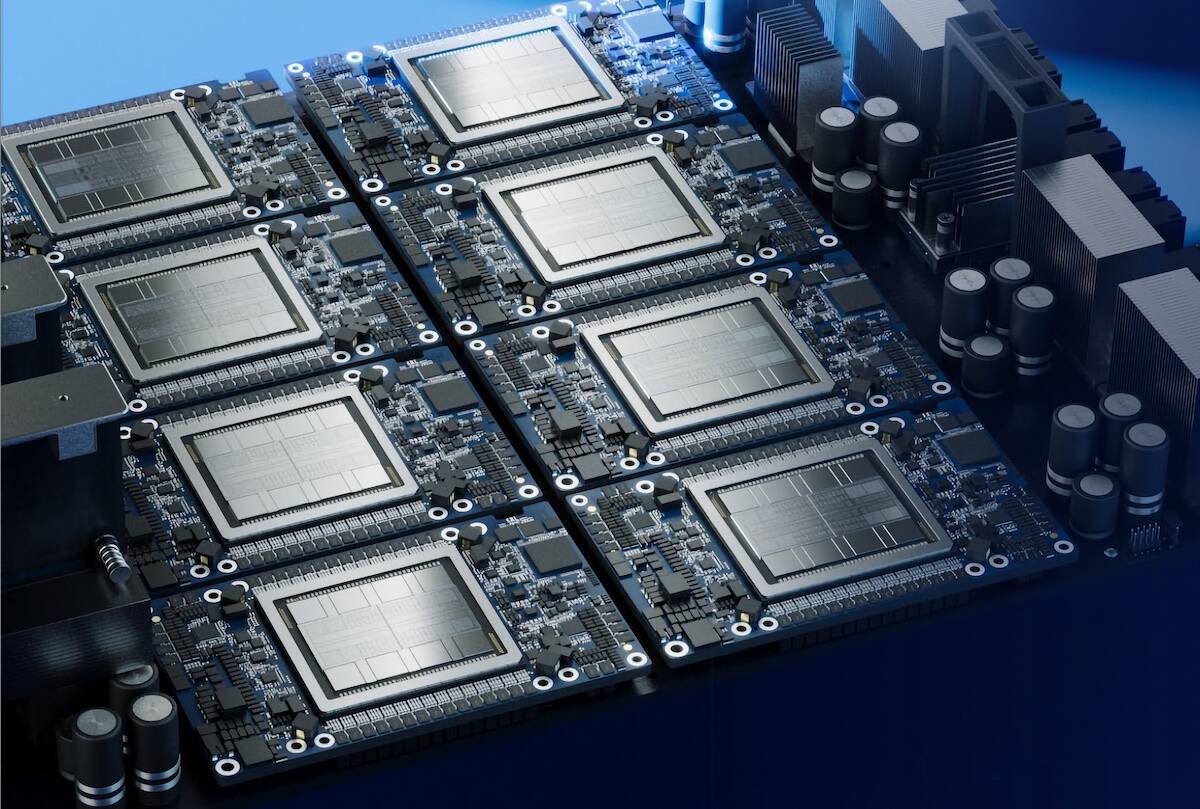

Announced at Intel Vision in April, Habana Lab's Gaudi 3 accelerators boast 128 GB of HBM2e memory, good for 3.7 Tbps of bandwidth and 1,835 teraFLOPS of dense FP8 or BF16 performance.

While at 8-bit precision it's roughly on par with the H100, at 16-bit precision, it offers nearly twice the dense floating point perf, which makes a big difference for the training and fine-tuning workloads that Inflection is targeting.

Intel is among the underdogs in the AI arena, and mainstream availability of the chip is rather poorly timed with the launch of Nvidia's Blackwell and AMD 288GB MI325X GPUs, both of which are due out in Q4. As such, Intel is pricing its accelerators rather aggressively.

At Computex this spring, Intel revealed a single Gaudi 3 system with eight accelerators would cost just $125,000 or about two thirds of an equivalent H100 system, according to CEO Pat Gelsinger.

- You're right not to rush into running AMD, Intel's new manycore monster CPUs

- If Dell's Qualcomm-powered Copilot+ PC is typical of the genre, other PCs are toast

- Two years after entering the graphics card game, Intel has nothing to show for it

- Hands up who hasn't made an offer to buy some part of Intel

Inflection isn't the only win Intel has notched in recent memory. In August, Big Blue announced it would deploy Intel's Gaudi 3 accelerators in IBM Cloud with availability slated for early 2025.

Going forward, IBM plans to extend support for Gaudi 3 to its watsonx AI platform. Meanwhile, Intel tells El Reg, the accelerator is already shipping to OEMs, including Dell Technologies and Supermicro.

While getting the major OEMs to take Gaudi seriously is a win for Intel, the future of the platform is anything but certain. As we previously reported, Gaudi 3 is the last hurrah for the Habana-Labs-developed accelerator.

Starting next year, the Gaudi will give way to a GPU called Falcon Shores, which will fuse Intel's Xe graphics DNA with Habana's chemistry, leading to understandable questions about the migration path.

Intel has maintained that for customers coding in high-level frameworks like PyTorch, the migration will be mostly seamless. For those building AI apps at a lower level, the chipmaker has promised to provide additional guidelines prior to Falcon Shores' debut. ®

From Chip War To Cloud War: The Next Frontier In Global Tech Competition

The global chip war, characterized by intense competition among nations and corporations for supremacy in semiconductor ... Read more

The High Stakes Of Tech Regulation: Security Risks And Market Dynamics

The influence of tech giants in the global economy continues to grow, raising crucial questions about how to balance sec... Read more

The Tyranny Of Instagram Interiors: Why It's Time To Break Free From Algorithm-Driven Aesthetics

Instagram has become a dominant force in shaping interior design trends, offering a seemingly endless stream of inspirat... Read more

The Data Crunch In AI: Strategies For Sustainability

Exploring solutions to the imminent exhaustion of internet data for AI training.As the artificial intelligence (AI) indu... Read more

Google Abandons Four-Year Effort To Remove Cookies From Chrome Browser

After four years of dedicated effort, Google has decided to abandon its plan to remove third-party cookies from its Chro... Read more

LinkedIn Embraces AI And Gamification To Drive User Engagement And Revenue

In an effort to tackle slowing revenue growth and enhance user engagement, LinkedIn is turning to artificial intelligenc... Read more