IBM Asks UChicago, UTokyo For Help Building A 100K Qubit Quantum Supercomputer

IBM plans spend $100 million to build a 100,000 qubit "quantum-centric supercomputer" allegedly capable of solving the world's most intractable problems by 2033 and it's tapped the Universities of Tokyo and Chicago for help.

Quantum computing today is a bit of a catch-22. The jury is still out as to whether the tech will ever amount to anything more than a curiosity – but if it does, nobody wants to be the last to figure it out. And IBM – which already plans to invest $20 billion into its Poughkeepsie, New York, campus to accelerate the development of, among other things, quantum computers – clearly doesn't want to be left behind.

If IBM is to be believed, its quantum supercomputer will be the foundation on which problems too complex for today's supercomputers might be solved. In a promotional video published Sunday, Big Blue claimed the machine might unlock novel materials, help develop more effective fertilizers, or discover better ways to sequester carbon from the atmosphere. You may have heard this before about Watson.

But before IBM can do any of that, it actually has to build a machine capable of wrangling 100,000 qubits – and then find a way to get the system to do something useful. This is by no means an easy prospect. Even if it can be done, the latest research suggests that 100,000 qubits may not be enough – more on that later.

IBM solicits help for quantum quest

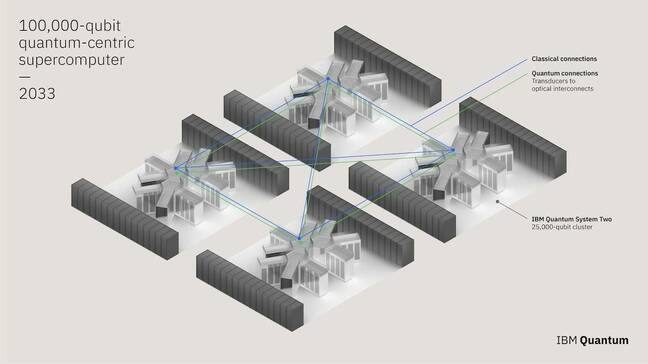

To put things in perspective, IBM's most powerful quantum system to date is called Osprey. It came online late last year and featured a whopping 433 qubits. At least as it's imagined today, the quantum part of IBM's quantum supercomputer will be made up of four 25,000 qubit clusters.

IBM's concept for a quantum supercomputer involves meshing classical computers to four 25,000 qubit clusters – Click to enlarge

This means to achieve the stated 2033 timetable, IBM's quantum systems will need to increase the number of usable qubits by roughly 50 percent every year for the next decade, and then build and connect them using both quantum and classical networks.

The situation may actually be worse. It appears that the system will be based on Big Blue's upcoming 133 qubit Heron system which, while smaller, employs a two-qubit gate architecture IBM claims offers greater performance.

To help IBM reach its 100,000 qubit goal, the biz has solicited the Universities of Tokyo and Chicago for help. The University of Tokyo will lead efforts to "identify, scale, and run end-to-end demonstrations of quantum algorithms." The university will also tackle supply chain and materials development for large-scale quantum systems, like cryogenics and control electronics.

Meanwhile, researchers at the University of Chicago will lead the effort to develop quantum-classical networks and apply them to hybrid-quantum computational environments. As we understand it, this will also involve the development of quantum middleware to allow workloads to be distributed across both classical and quantum compute resources.

- Microsoft wants you to build quantum apps in Azure, the cloud that's both up and down

- Quantum computing: Hype or reality? OVH says businesses would be better off prepared

- The Osprey has landed: IBM's 433-qubit quantum processor

- You can cross 'Quantum computers to smash crypto' off your list of existential fears for 30 years

To be clear, IBM's 100,000 qubit target is based entirely on its roadmap and the rate at which its boffins believe they can scale the system while also avoiding insurmountable roadblocks.

"We think that together with the University of Chicago and the University of Tokyo, 100,000 connected qubits is an achievable goal by 2033," the lumbering giant of big-iron computing declared in a blog post Sunday.

What good is a 100,000 qubit quantum computer?

Even if IBM and friends can pull it off and build their "quantum-centric" supercomputer, that doesn't necessarily mean it will be all that super. Building a quantum system is one thing – developing the necessary algorithms to take advantage of it is another entirely. In fact, numerous cloud providers, including Microsoft and OVH Cloud have taken steps to help develop quantum algorithms and hybrid-quantum applications in preparation for when such utility-scale systems become available.

And according to a paper penned by researchers from Microsoft and the Scalable Parallel Computing Laboratory, there's reason to believe Big Blue's 100,000 qubit quantum computer might not be that useful.

The researchers compared a theoretical quantum system with 10,000 error correcting qubits – or about a million physical qubits – to a classical computer equipped with a solitary Nvidia A100 GPU. The comparison revealed that for the quantum system to make sense, the algorithms involved need to achieve a greater-than-quadratic speed up.

Assuming IBM is talking about 100,000 physical qubits – it's not specified — that'd make its machine about one tenth the size of the theoretical system described in the research paper. We've reached out to IBM for clarification and we'll let you know if we hear anything back.

That said, there are some workloads that show promise – as long as the I/O bottlenecks can be overcome. While the researchers found that drug design, protein folding, and weather prediction are probably out of the question, chemical and material sciences could benefit from an adequately large quantum system.

So, IBM's pitch of using a quantum supercomputer to develop lower-cost fertilizers might not be the craziest idea ever. ®

From Chip War To Cloud War: The Next Frontier In Global Tech Competition

The global chip war, characterized by intense competition among nations and corporations for supremacy in semiconductor ... Read more

The High Stakes Of Tech Regulation: Security Risks And Market Dynamics

The influence of tech giants in the global economy continues to grow, raising crucial questions about how to balance sec... Read more

The Tyranny Of Instagram Interiors: Why It's Time To Break Free From Algorithm-Driven Aesthetics

Instagram has become a dominant force in shaping interior design trends, offering a seemingly endless stream of inspirat... Read more

The Data Crunch In AI: Strategies For Sustainability

Exploring solutions to the imminent exhaustion of internet data for AI training.As the artificial intelligence (AI) indu... Read more

Google Abandons Four-Year Effort To Remove Cookies From Chrome Browser

After four years of dedicated effort, Google has decided to abandon its plan to remove third-party cookies from its Chro... Read more

LinkedIn Embraces AI And Gamification To Drive User Engagement And Revenue

In an effort to tackle slowing revenue growth and enhance user engagement, LinkedIn is turning to artificial intelligenc... Read more