How Thermal Management Is Changing In The Age Of The Kilowatt Chip

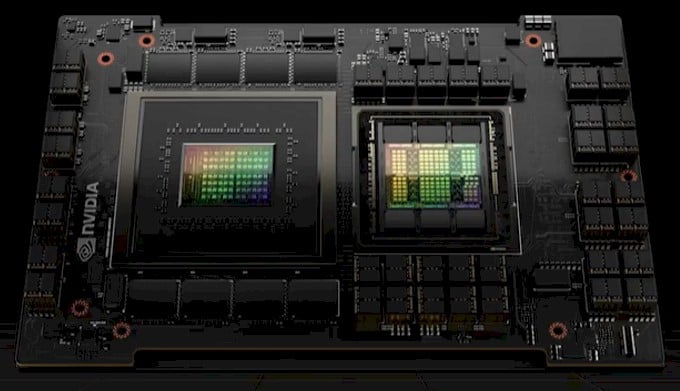

Analysis As Moore's Law slowed to a crawl, chips, particularly those used in AI and high-performance computing (HPC), have steadily gotten hotter. In 2023 we saw accelerators enter the kilowatt range with the arrival of Nvidia's GH200 Superchips.

We've known these chips would be hot for a while now – Nvidia has been teasing the CPU-GPU franken-chip for the better part of two years. What we didn't know until recently is how OEMs and systems builders would respond to such a power-dense part. Would most of the systems be liquid cooled? Or, would most stick to air cooling? How many of these accelerators would they try to cram into a single box, and how big would the box be?

Now that the first systems based on the GH200 make their way to market, it's become clear that form factor is very much being dictated by power density than anything else. It essentially boils down to how much surface area you have to dissipate the heat.

Dig through the systems available today from Supermicro, Gigabyte, QCT, Pegatron, HPE, and others and you'll quickly notice a trend. Up to about 500 W per rack unit (RU) – 1 kW in the case of Supermicro's MGX ARS-111GL-NHR – these systems are largely air cooled. While hot, it's still a manageable thermal load to dissipate, working out to about 21-24 kW per rack. That's well within the power delivery and thermal management capacity of modern datacenters, especially those making use of rear door heat exchangers.

However, this changes when system builders start cramming more than a kilowatt of accelerators into each chassis. At this point most of the OEM systems we looked at switched to direct liquid cooling. Gigabyte's H263-V11, for example, offers up to four GH200 nodes in a single 2U chassis.

That's two kilowatts per rack unit. So while a system like Nvidia's air-cooled DGX H100 with its eight 700 W H100s and twin Sapphire Rapids CPUs has a higher TDP at 10.2 kW, it's actually less power dense at 1.2 kW/RU.

There are a couple advantages to liquid cooling beyond a more efficient transfer of heat from these densely packed accelerators. The higher the system power is, the more static pressure and air flow you need to remove the heat from the system. This means using hotter, faster fans that use more power – potentially as much as 20 percent of system power in some cases.

Beyond about 500 W per rack unit, most of the OEMs and ODMs seem to be opting for liquid cooled chassis as fewer, slower fans are required to cool lower-power components like NICs, storage, and other peripherals.

You only have to look at HPE's Cray EX254n blades to see just how much a liquid-cooled chassis has. That platform can support up to four GH200s. That's potentially 4 kW in a 1U compute blade and that's not even counting the NICs used to keep the chips fed with data.

Of course, the folks at HPE's Cray division do know a thing or two about cooling ultra-dense compute components. It does, however, illustrate the amount of thought systems builders put into their servers, not just at a systems level but at the rack level.

Rack level takes off

As we mentioned before with the Nvidia DGX H100 systems, cooling a multi-kilowatt server on its own is something OEMs are well acquainted with. But as soon as you try and fill a rack with these systems, things become a bit more complicated with factors like rack power and facility cooling coming into play.

We dove into the challenges that datacenter operators like Digital Reality have had to overcome in order to support dense deployments of these kinds of systems over on our sibling publication The Next Platform.

In many cases, the colocation provider needed to rework its power and cooling infrastructure to support 40-plus kilowatts of power and heat required to pack four of these DGX H100 systems into a single rack.

But if your datacenter or colocation provider can't supply that kind of power rack or contend with the heat, there's not much sense in trying to make these system's this dense when most of the rack is going to sit empty.

- Money starts to flow as liquid cooling gets hot in datacenters

- Supermicro CEO predicts 20 percent of datacenters will adopt liquid cooling

- When it comes to liquid and immersion cooling, Nvidia asks: Why not both?

- As liquid cooling takes off in the datacenter, fortune favors the brave

With the launch of the GH200, we've seen Nvidia focus less on the individual systems and more on rack-scale deployments. We caught our first glimpse of this during Computex this spring with its DGX GH200 cluster.

Rather than a bunch of dense GPU-packed nodes, the system is actually comprised of 256 2U nodes each with a single GH200 accelerator inside. Combined, the system is capable of pumping out an exaFLOPS of FP8 performance, but should be much easier to deploy at a facilities level. Instead of 1.2 kW/RU now you're looking at closer to 500 W/RU, which is right about where most OEMs are landing with their own air-cooled systems.

More recently we've seen Nvidia condense a smaller version of this down to a single rack with the GH200-NVL32 announced in collaboration with AWS at Re:Invent this fall.

That system packs 16 1U chassis, each equipped with two GH200 nodes, into a single rack and meshes them together using nine NVLink switch trays. Needless to say, at 2 kW/RU of compute these are dense little systems and thus were designed to be liquid cooled from the get-go.

Hotter chips on the way

While we've focused on Nvidia's Grace Hopper Superchips, the chipmaker is hardly the only one pushing TDPs to new limits in pursuit of performance and efficiency.

Earlier this month, AMD spilled the tea on its latest AI and HPC GPUs and APUs, which see the company's Instinct accelerators jump from 560 W last gen to as high as 760 W. That's still not the kilowatt of power demanded by the GH200, but it's a sizable increase.

More importantly, AMD CTO Mark Papermaster told The Register that there's still plenty of headroom to push TDPs even higher over the next few years.

Whether this will eventually drive chipmakers to mandate liquid cooling for their flagship parts remains unanswered. According to Papermaster, AMD is going to support air and liquid cooling on their platforms. But as we've seen with AMD's new MI300A APU, continuing to opt for air cooling will almost certainly mean performance concessions.

The MI300A is nominally rated for 550 W. That's far less than the 850 W we'd been led to believe it'd suck down under load, but given adequate cooling, it can run even hotter. In an HPC-tuned system, like those developed by HPE, Eviden (Atos), or Lenovo, the chip can be configured to run at 760 W.

Intel, meanwhile, is exploring novel methods for cooling 2 kW chips using two-phase coolants and coral-inspired heatsinks designed to promote the formation of bubbles.

The chipmaker has also announced extensive partnerships with infrastructure and chemical vendors to expand the use of liquid cooling technologies. The company's latest collab aims to develop a cooling solution for Intel's upcoming Guadi3 AI accelerator using Vertiv's pumped two-phase cooling tech. ®

From Chip War To Cloud War: The Next Frontier In Global Tech Competition

The global chip war, characterized by intense competition among nations and corporations for supremacy in semiconductor ... Read more

The High Stakes Of Tech Regulation: Security Risks And Market Dynamics

The influence of tech giants in the global economy continues to grow, raising crucial questions about how to balance sec... Read more

The Tyranny Of Instagram Interiors: Why It's Time To Break Free From Algorithm-Driven Aesthetics

Instagram has become a dominant force in shaping interior design trends, offering a seemingly endless stream of inspirat... Read more

The Data Crunch In AI: Strategies For Sustainability

Exploring solutions to the imminent exhaustion of internet data for AI training.As the artificial intelligence (AI) indu... Read more

Google Abandons Four-Year Effort To Remove Cookies From Chrome Browser

After four years of dedicated effort, Google has decided to abandon its plan to remove third-party cookies from its Chro... Read more

LinkedIn Embraces AI And Gamification To Drive User Engagement And Revenue

In an effort to tackle slowing revenue growth and enhance user engagement, LinkedIn is turning to artificial intelligenc... Read more