From Vacuum Tubes To Qubits – Is Quantum Computing Destined To Repeat History?

Analysis The early 1940s saw the first vacuum tube computers put to work solving problems beyond the scope of their human counterparts. These massive machines were complex, specific, and generally unreliable.

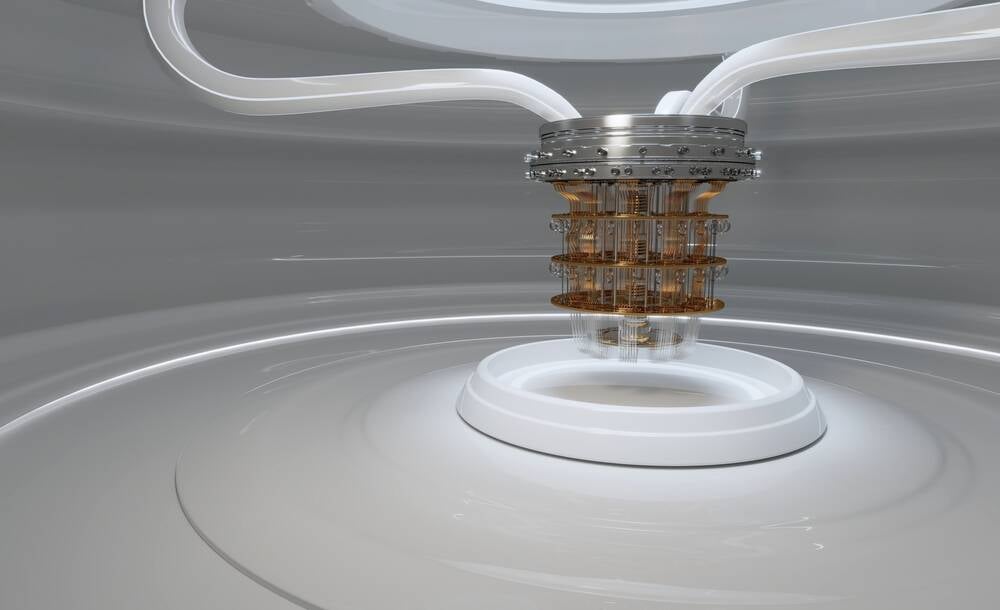

In many respects, today's quantum systems bear remarkable similarities to early vacuum tube computers in that they're also incredibly expensive, specialized, and not intuitive.

Later computers like UNIVAC I in 1951 or the IBM 701 presented the possibility of a competitive advantage for the few companies with the budgets and expertise necessary to deploy, program, and maintain such beasts. According to Gartner analyst Matthew Brisse, a similar phenomenon is taking place with quantum systems today as companies seek to eke out efficiencies by any means necessary.

The topic of quantum supremacy – that is, scenarios where quantum systems outperform classical computers – is a topic of ongoing debate. But Brisse emphasizes "there is not a single thing that quantum can do today that you can't do classically."

However, he notes that by combining classical and quantum computing, some early adopters – particularly in the financial and banking industry – have been able to achieve some kind of advantage over classical computing alone. Whether these advantages rise to the level of a competitive edge isn't always clear, but it does contribute to a fear that those who don't invest early may risk missing out.

Quantum FOMO is real

Should history repeat itself, as it so often does, it will be the early adopters of quantum systems who'll stand to gain the most, hence the FOMO. But is that fear well placed?

Governments, for example, have poured a significant amount into the possibility that quantum will materialize as a true competitive threat without having the "killer app" for quantum defined yet. Earlier this year, the Defense Advanced Research Projects Agency, better known as DARPA, launched the Underexplored Systems for Utility-Scale Quantum Computing (US2QC) initiative to speed the development and application of quantum systems. The idea behind it is that if a quantum system becomes capable of cracking modern encryption the way Colossus did to the German cyphers all those years ago, Uncle Sam doesn't want to be left playing catch up.

- HSBC banks on quantum to lock down comms network

- IBM asks UChicago, UTokyo for help building a 100K qubit quantum supercomputer

- China suggests America 'carefully consider' those chip investment bans

- It's not just spin – boffins give quantum computing a room-temp makeover

Whether encryption-cracking quantum systems are something we actually have to worry about is still open for debate, but the same logic applies to enterprises – especially those looking to get a leg up on their competitors in the medium to long term.

"It's not about what you can get today. It's about getting ready for innovations that are going to happen next," according to Brisse. "We are out of the lab and we are now looking at commercialization."

This is why companies like Toyota, Hyundai, BBVA, BSAF, ExxonMobil and others have teamed up with quantum computing vendors on the off chance the tech can help develop better batteries, optimize routes and logistics, and/or reduce investment risk.

But while commercialization of quantum computing may be underway, recent developments around generative AI may end up hampering adoption of the tech – at least in the short term.

Brisse notes that most CIOs are looking to invest in technologies with relatively short returns on investment. With GPUs and other accelerators used to power AI models, they can expect near-term results, while quantum computing remains a long-term investment.

Still, Brisse says he hasn't seen enterprises abandon their quantum investments, he's certainly seen a shift in priority toward generative AI.

Quantum comparisons are a bit of a mess

Making matters worse for those trying to get hands-on with quantum, cross-shopping systems can be a bit of a minefield.

There are dozens of vendors claiming to offer quantum services on systems ranging anywhere from a few dozen qubits to thousands of them. While this might seem like an obvious metric to judge the maturity and performance of a quantum system, it really depends on a number of factors – including things like decoherence and the quality of the qubits themselves.

We liken this to the "core wars" on modern processors. Those on an Intel CPU are going to have vastly different performance characteristics compared to CUDA cores on an Nvidia GPU. Depending on what you're doing, a job that might run just fine on a handful of Intel cores might require thousands of CUDA cores – if it runs at all.

The same is true of quantum systems, which are often optimized to specific workloads. For example, Brisse argues that an IBM system might perform better at computational chemistry, while D-Wave systems may be better tuned for optimization tasks like route planning. "Different quantum systems solve quantum problems differently," he explained.

The high cost and often exotic conditions – like near-absolute-zero operating temperatures – mean that many quantum systems up to this point have been rented in a cloud-like "as-a-service" fashion. However some providers, like IonQ, have recently teased rackmount quantum systems that can be deployed in enterprise datacenters. It remains to be seen when these "forthcoming" machines will actually ship.

With that said, Brisse doesn't see much benefit to on-prem deployments apart from latency-sensitive applications just yet. He expects most on-prem deployments will focus on scientific research – likely in conjunction with high performance computing deployments.

We've already seen this to a degree with Europe's Lumi supercomputer, which got a small quantum computing upgrade last autumn.

For Brisse this research is still important to move quantum computing beyond conventional problems.

"Today, we're only solving classical problems quantumly, but real innovation … is going to come when we solve quantum problems quantumly with quantum algorithms," he opined. "That I believe is the big 'a-ha' in quantum: not whether we can go faster, whether we can actually solve new classes of problems." ®

From Chip War To Cloud War: The Next Frontier In Global Tech Competition

The global chip war, characterized by intense competition among nations and corporations for supremacy in semiconductor ... Read more

The High Stakes Of Tech Regulation: Security Risks And Market Dynamics

The influence of tech giants in the global economy continues to grow, raising crucial questions about how to balance sec... Read more

The Tyranny Of Instagram Interiors: Why It's Time To Break Free From Algorithm-Driven Aesthetics

Instagram has become a dominant force in shaping interior design trends, offering a seemingly endless stream of inspirat... Read more

The Data Crunch In AI: Strategies For Sustainability

Exploring solutions to the imminent exhaustion of internet data for AI training.As the artificial intelligence (AI) indu... Read more

Google Abandons Four-Year Effort To Remove Cookies From Chrome Browser

After four years of dedicated effort, Google has decided to abandon its plan to remove third-party cookies from its Chro... Read more

LinkedIn Embraces AI And Gamification To Drive User Engagement And Revenue

In an effort to tackle slowing revenue growth and enhance user engagement, LinkedIn is turning to artificial intelligenc... Read more