Engineers Are Supposed To Solve Difficult Problems. Waiting In A Queue For Time On The Supercomputer Shouldnt Be One Of Them

Sponsored Feature High performance computing (HPC) continually sharpens the cutting edge of technology. Tackling the biggest problems in science or industry, whether at planetary or micro scale is, by definition, going to drive innovation in all aspects of computer infrastructure and the software that runs on it.

The engineers and admins managing HPC systems often perform a delicate balancing act, matching the capacity available to them with the demands of researchers or developers eager to solve their particular challenges. And as those problems get bigger and more complex, the associated workloads and datasets become ever larger and more complex too.

This makes it increasingly difficult for traditional on-premises architectures to keep up. While datasets might be expanding all the time, there are hard limits on just how much work any given system can deliver, even if it is running 24 hours a day.

Different team priorities compete for those resources. While we can assume that developers and scientists are always going to want more power, things become particularly fraught as they get closer to a launch or prepare to put a project or product into production. Running an on-premises system at full capacity doesn't leave room for agility or responsiveness.

What is more, those varied workloads all have slightly different requirements, meaning HPC system administrators and architects can have the additional overhead of tuning and optimizing their installations accordingly. That's on top of monitoring, power management, and of course security, a particular concern where HPC workloads incorporate extremely valuable IP.

Even if HPC system administrators have the budget for upgrades, they face the mundane challenge of obtaining the appropriate, cutting-edge equipment in the first place. Long procurement cycles slow down projects and leave them exposed to price hikes. In the meantime, operators are left little choice but to rely on legacy systems for longer to get the results they need. The cloud provides an alternative, with the promise of scalability and agility, as well as more predictable and flexible pricing.

But as we've seen, HPC workloads are incredibly varied. Some are compute intensive, meaning raw CPU performance is the key element for engineers. Others are data intensive meaning storage, IO, and scalability are more important factors. And some problems combine both requirements. Finite element analysis (FEA), for example, tackles problems around liquids and solids. Solving for solids is memory heavy, while solving for fluids is compute intensive.

Finite resources

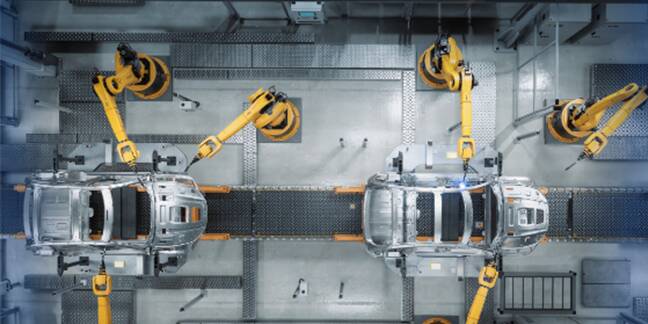

FEA is critical in any form of engineering, from major infrastructure, such as wind turbines, down to medical devices for use in the human body. It is central to vehicle crash test simulations - now even more of a priority with the shift to electric vehicles that present different safety challenges due to the location of batteries and other components. Likewise, as nations look to upgrade their energy infrastructure, seismic workloads and simulations are more important than ever.

All of which means that generic, undifferentiated compute instances are unlikely to appeal to scientists and engineers looking to tune their FEA workloads to get as many answers as quickly as possible. It is for this reason that the most recent re:Invent conference saw AWS unveil new HPC-optimized instances specifically for FEA workloads, offering a varied menu of underlying compute including CPUs, GPUs and FPGAs as well as DRAM, storage and IO.

For workloads such as FEA, which are challenging both from a data and compute perspective, AWS has designed Amazon EC2 Hpc6id instances around Intel's 3rd Gen Intel® Xeon® Scalable processors, which feature 64 physical cores, running at up to 3.5GHz.

The Intel architecture features Advanced Vector Extensions 512 (Intel® AVX-512) which accelerate high performance workloads, including cryptographic algorithms, scientific simulations, and 3D modeling and analysis. It also removes the need to offload certain workloads from the CPU to dedicated hardware.

Likewise, Intel's oneAPI Math Kernel Library (OneMKL) is optimized for scientific computing, and helps developers fully exploit the core count, to provide higher optimization and parallelization, and boost scientific and engineering applications. Bearing in mind the high likelihood of HPC workloads involving sensitive data and IP, EC2 Hpc6id instances also feature Intel's Total Memory Encryption (Intel® TME).

Intel TME encrypts the system's entire memory with a single transient key, ensuring all data passing between memory and the CPU is protected against physical memory attacks.

Because EC2 Hpc6id instances are powered by Intel architecture, engineers are already well-versed in taking advantage of technologies like Intel AVX-512. Plenty of applications have been written to utilize it, so if their software package already uses it, engineers do not have to make modifications.

EC2 Hpc6id instances include up to 15.2TB of local NVMe storage to provide sufficient capacity and support data-intensive workloads. With HPC workloads it is not just a question of having "enough" storage, it has to be fast enough to ensure that the processors are kept fully loaded with data and able to write data quickly. This is matched by 1TB of memory, with 5GB/s memory capacity per vCPU which further speeds processing of the massive datasets these sorts of problems require.

HPC in an instance

It is a combination that delivers an incredible amount of power in a single instance. But because these are workloads are distributed, they have multiple instances that need to communicate with each other. Which is where the AWS 200Gbps interconnect comes in.

That interconnect is based on AWS' Elastic Fabric Adapter (EFA) network interface, powered by AWS' Nitro System which offloads virtualization functions to dedicated hardware and software, further boosting performance and scalability.

Customers can also reap the advantage of AWS' own massive scale. They can run their EC2 Hpc6id instances in a single Availability Zone, improving node-to-node communications and further shaving latency, for example. They can use EC2 Hpc6id instances with AWS ParallelCluster, which is AWS' cluster management tool, to provision EC2 Hpc6id instances alongside other AWS instances in the same cluster, further extending the ability to run multiple workloads, or parts of workloads, on the most appropriate instance. And it works with batch schedulers such as AWS Batch, which many of these clusters require.

Customers also get the opportunity of accessing AWS' other applications and services. This ranges from help with setting up their HPC infrastructure, through boosting resilience with secure, extensive, and reliable AWS Global Infrastructure, to taking advantage of AWS' visualization applications to help them make sense of the results their HPC runs produce.

In performance terms, Amazon EC2 Hpc6id instances deliver up to 2.2X better price-performance over comparable x86-based instances for data-intensive HPC workloads, such as finite element analysis (FEA).

There's also a software licensing benefit as well, as the software packages used for HPC workloads are typically priced by node. If engineers can get the same job done with fewer nodes, as they can with EC2 Hpc6id instances, there are both time and cost savings. And by having the ability to run more analysis in less time, they simply are able to do more simulation.

And this has a very real-world impact. Because at some point, the system being simulated, whether a medical device, a car, a turbine blade, or a reservoir has to be built and physically tested in the real world. By running more analysis and simulation on AWS more quickly, engineers are able to narrow the cases for real-world physical testing and carry these out with more precision.

Sponsored by AWS.

From Chip War To Cloud War: The Next Frontier In Global Tech Competition

The global chip war, characterized by intense competition among nations and corporations for supremacy in semiconductor ... Read more

The High Stakes Of Tech Regulation: Security Risks And Market Dynamics

The influence of tech giants in the global economy continues to grow, raising crucial questions about how to balance sec... Read more

The Tyranny Of Instagram Interiors: Why It's Time To Break Free From Algorithm-Driven Aesthetics

Instagram has become a dominant force in shaping interior design trends, offering a seemingly endless stream of inspirat... Read more

The Data Crunch In AI: Strategies For Sustainability

Exploring solutions to the imminent exhaustion of internet data for AI training.As the artificial intelligence (AI) indu... Read more

Google Abandons Four-Year Effort To Remove Cookies From Chrome Browser

After four years of dedicated effort, Google has decided to abandon its plan to remove third-party cookies from its Chro... Read more

LinkedIn Embraces AI And Gamification To Drive User Engagement And Revenue

In an effort to tackle slowing revenue growth and enhance user engagement, LinkedIn is turning to artificial intelligenc... Read more