China's GPU Contender Moore Threads Reveals Card That Can Cope With Nvidias CUDA

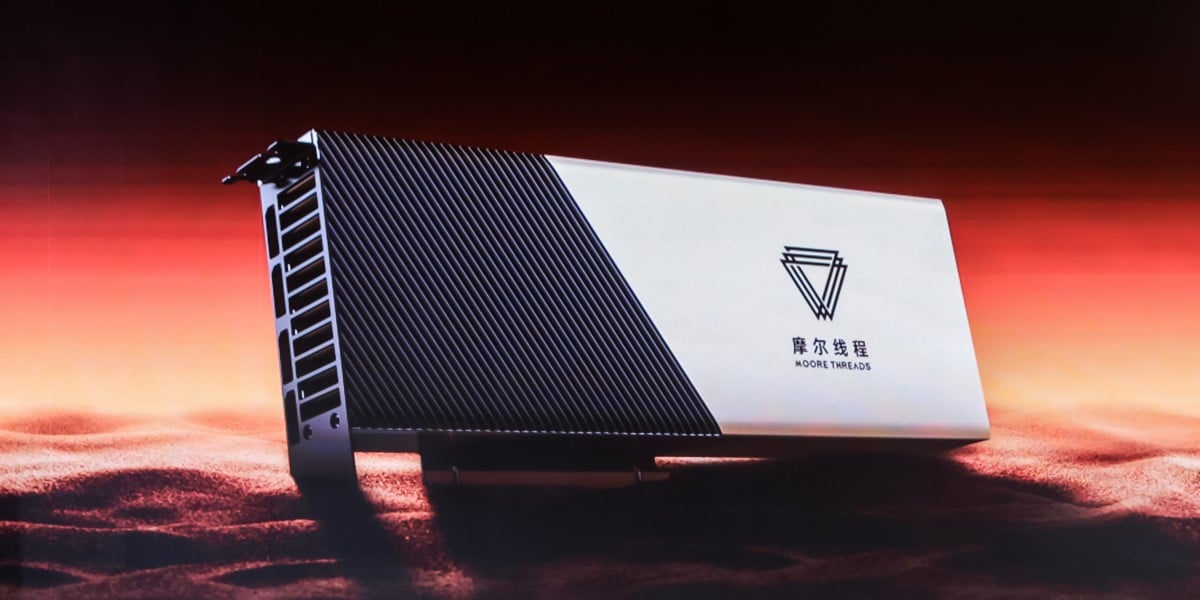

Moore Threads, a Chinese purveyor of GPUs, has unveiled its mightiest model to date – and it may even give market leader Nvidia a little to worry about.

The MTT S4000 packs 48GB of video memory and 768GB/sec of video memory bandwidth on each card. But Moore Threads hasn't detailed core count or frequency, saying only that its homebrew Moore Threads Unified System Architecture (MUSA) powers the device. MUSA is said to be compatible with both x86 and Arm, but the silicon slinger has been cagey about its exact capabilities and composition.

Moore Threads rates the GPU's FP32 performance at 25 TFLOPs, and INT8 capabilities at 200 TOPs.

Those are numbers that won't worry Nvidia, or make Intel and AMD feel they've fallen behind.

Not that any of the three big US chipmakers have much reason to worry about Moore Threads, given the Chinese company was added to the US's entity list of companies that are persona non grata stateside. Moore Threads products are not going to appear on the shelves at Best Buy any time soon, and major hyperscalers won't want them either.

- There's no Huawei Chinese chipmakers can fill Nvidia's shoes... anytime soon

- US willing to compromise with Nvidia over AI chip sales to China

- US lawmakers want blanket denial for sensitive tech export licenses to China

- Nvidia sees Huawei, Intel in rear mirror as it grapples with China ban

The upstart chip shop may, however, irritate Nvidia with its "MUSIFY" tool that's promised to allow easy migration of CUDA code to the MTT S4000. CUDA is Nvidia's flagship development environment for GPU-centric apps, and the company does enjoy selling integrated bundles. Patriotic Chinese developers porting code to Moore Threads is entirely plausible.

As are scenarios in which Chinese devs use the devices to build AI services: the MTT S4000 supports the LLaMA and GPT models, plus many more.

The Chinese company also revealed a "kilocard cluster" that puts 1,000 of its GPUs in harness, and claimed that China's Zhiyuan Research Institute has used it to train a 70 billion parameter model in 33 days, while a 130 billion parameter model would be done in 56 days.

China's major clouds are all-in on AI. As US chip sanctions bite, perhaps Moore Threads will be able to sell them some kilocard clusters. But with the likes of Baidu having stockpiled sufficient accelerators to sustain its AI chatbot for a year or more, and forbidden Nvidia kit constantly crossing the border, the Chinese GPU-maker will need to accelerate its accelerators just to catch up. ®

From Chip War To Cloud War: The Next Frontier In Global Tech Competition

The global chip war, characterized by intense competition among nations and corporations for supremacy in semiconductor ... Read more

The High Stakes Of Tech Regulation: Security Risks And Market Dynamics

The influence of tech giants in the global economy continues to grow, raising crucial questions about how to balance sec... Read more

The Tyranny Of Instagram Interiors: Why It's Time To Break Free From Algorithm-Driven Aesthetics

Instagram has become a dominant force in shaping interior design trends, offering a seemingly endless stream of inspirat... Read more

The Data Crunch In AI: Strategies For Sustainability

Exploring solutions to the imminent exhaustion of internet data for AI training.As the artificial intelligence (AI) indu... Read more

Google Abandons Four-Year Effort To Remove Cookies From Chrome Browser

After four years of dedicated effort, Google has decided to abandon its plan to remove third-party cookies from its Chro... Read more

LinkedIn Embraces AI And Gamification To Drive User Engagement And Revenue

In an effort to tackle slowing revenue growth and enhance user engagement, LinkedIn is turning to artificial intelligenc... Read more