AMD Tries To Spoil Nvidia's Week By Teasing High-end Accelerators, Epyc Chips With 3D L3 Cache, And More

AMD teased a bunch of enterprise-class developments today, from claiming the AI and HPC accelerator crown to word of 3D-stacked cache, all perhaps to spoil rival Nvidia's forthcoming GPU conference.

Here's a summary of what AMD said on Monday ahead of Nv's GTC this week:

3D-stacked Level 3 cache

AMD said it has crafted a server microprocessor code-named Milan-X, which is a third-generation Epyc with a layer of SRAM stacked on top of dies within the IC package. It's likely Milan-X will, as far as customers are concerned, be a handful of SKUs extending today's 7nm Epyc 7003s, and will sport up to 64 cores per socket without any architectural changes.

The added layer of memory in the Milan-X is essentially a vertically positioned L3 cache that AMD calls the V-Cache. The chip designer gave us a glimpse of this approach in June albeit with the RAM stacked in a Ryzen prototype part. AMD co-designed the 3D structure with TSMC, which manufactures the components.

The additional layer of L3 cache sits right over the L3 cache on the CPU dies, adding 64MB of SRAM to the 32MB below resulting in a roughly 10 per cent increase in overall cache latency. AMD said the additional cache will increase performance of the processor for certain engineering and scientific workloads, at least.

The Milan-X can be a drop-in replacement for third-gen Epycs, with some BIOS updates required to make use of the added technology, according to AMD. Microsoft's Azure cloud is the first to offer access to these stacked processors in a private preview, and it is expected to widen this service over the next few weeks.

If you want to get your hands on the physical silicon, be aware it is not due to launch until the first quarter of next year. Cisco, Dell, Lenovo, HPE, Supermicro, and others are expected to sell data-center systems featuring the chips.

For a top-end Milan-X SKU, you're looking at up to 768 MB of L3 cache total per socket, or 1.5GB per dual-socket system.

You can find more info and analysis of the Milan-X here, and what it means for Microsoft here, by our friends at The Next Platform.

AMD claims accelerator crown

AMD launched its MI200 series of accelerators for AI and HPC systems, and claimed they are "the most advanced" of their kind in the world. AMD reckons its devices can outdo the competition (ie, Nvidia) in terms of FP64 performance in supercomputer applications, and top 380 tera-FLOPS of peak theoretical half-precision (FP16) performance for machine-learning applications.

The US Dept of Energy's Oak Ridge Laboratory is going to use the hardware, along with third-gen Epyc processors, in a 1.5 exa-FLOPS HPE-built super called Frontier, according to AMD. Frontier is due to power up next year.

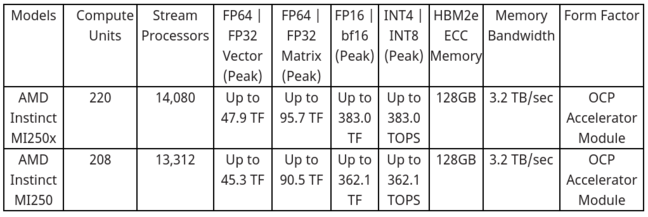

The MI200 series uses AMD's CDNA 2 architecture and multiple GPU dies within a package, and will come in two form factors: the MI250X and MI250 as OCP accelerator modules, and the MI210 as a PCIe card. The given specifications are as follows:

The MI250X is available now if you're in the market for an HPE Cray EX Supercomputer; otherwise, you'll have to wait until Q1 2022 to get the add-ons from a system builder.

Zen 4 roadmap teased

AMD also didn't want you to forget about its upcoming Zen 4 processor families. We're told to look out for a 96-core 5nm Zen 4-based server chip code-named Genoa. This will support DDR5 memory, CXL interconnects, and PCIe 5 devices, and will launch next year for data-center-class environments, according to AMD.

Then there's the Bergamo, a 5nm 128-core Zen 4c component due to arrive in 2023. The c in 4c means it's optimized for cloud providers – higher core density and performance per socket.

You can find more from AMD here, and more analysis coming up on The Next Platform here. ®

From Chip War To Cloud War: The Next Frontier In Global Tech Competition

The global chip war, characterized by intense competition among nations and corporations for supremacy in semiconductor ... Read more

The High Stakes Of Tech Regulation: Security Risks And Market Dynamics

The influence of tech giants in the global economy continues to grow, raising crucial questions about how to balance sec... Read more

The Tyranny Of Instagram Interiors: Why It's Time To Break Free From Algorithm-Driven Aesthetics

Instagram has become a dominant force in shaping interior design trends, offering a seemingly endless stream of inspirat... Read more

The Data Crunch In AI: Strategies For Sustainability

Exploring solutions to the imminent exhaustion of internet data for AI training.As the artificial intelligence (AI) indu... Read more

Google Abandons Four-Year Effort To Remove Cookies From Chrome Browser

After four years of dedicated effort, Google has decided to abandon its plan to remove third-party cookies from its Chro... Read more

LinkedIn Embraces AI And Gamification To Drive User Engagement And Revenue

In an effort to tackle slowing revenue growth and enhance user engagement, LinkedIn is turning to artificial intelligenc... Read more