AMD Thinks It Can Solve The Power/heat Problem With Chiplets And Code

Interview Semiconductors have been getting progressively hotter over the past few years as Moore's Law has slowed and more power is required to push higher performance gen over gen.

Because of this, chipmakers are having to get creative about how they design and build chips so that even if they consume more power they are doing so in the most efficient way possible. It's not enough for a chip designer to pack more transistors into a chip and call it a day, AMD CTO Mark Papermaster tells The Register.

"That doesn't work anymore... That was back in the Moore's law era where the new node would give me the ability to pack in more transistors that are more performant and it wouldn't increase the energy… that's long gone."

This is a problem AMD has been exploring for years. The company launched the 30x25 initiative in 2021 with the goal to deliver a 30-fold improvement in compute efficiency from a 2020 baseline by 2025.

And while these efforts present obvious advantages for the sustainability of computing, AMD's push to bolster the performance per watt of its chips is really a matter of survival.

As CEO Lisa Su illustrated so starkly in her ISSC keynote earlier this year, given the current pace of technology, while a zetaFLOP class supercomputer is certainly possible within around 10 years, it would require so much power to be completely practical. By her estimate, such a machine would require in excess of 500 MW to operate.

With AMD's deadline fast approaching the chip biz has made significant progress, but it still has a long way to go, having achieved just 13.5x improvement so far.

Pulling the advanced packing lever

This is an incredibly complex problem to solve and there is no one big lever you can pull to solve it, Papermaster explains. "We're on such an exponential curve of both compute and higher energy consumption that what [you] have to think about is what are the levers you have to bend the curve."

From the beginning, AMD has emphasized a mix of general, accelerated, and domain specific compute capabilities, addressed largely by its portfolio of CPUs, GPUs, FPGAs, and accelerator IP.

AMD has also invested heavily in a number of technologies including chiplets and advanced packaging to engineer around the limits of modern semiconductor manufacturing techniques.

One of the first ways AMD optimized power efficiency was by desegregating compute from I/O and memory and then using the best available process tech for each. The thinking is that certain elements scale better with process shrinks than others. This is the reason AMD's Epyc 4 CPUs use a 6nm process node for I/O and a 5nm node for the compute dies.

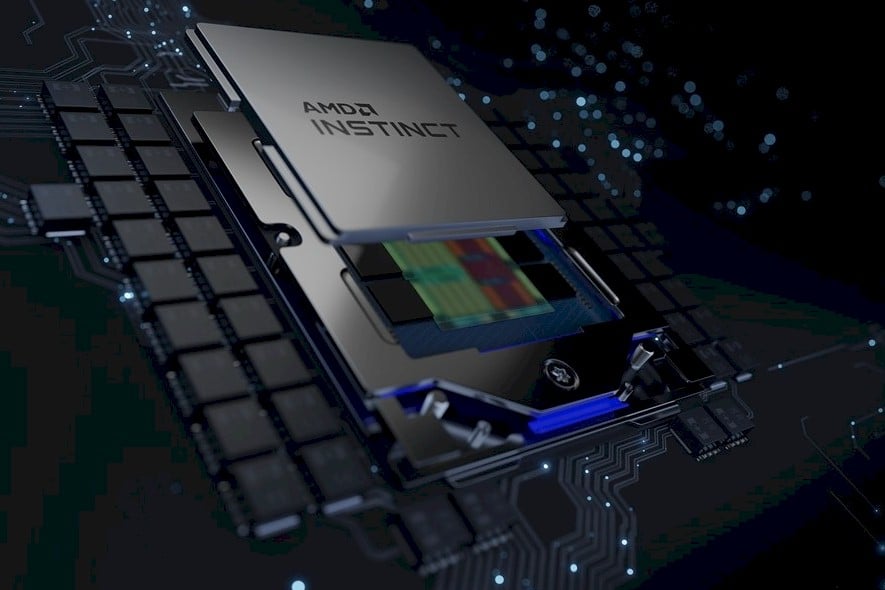

This approach can be extended through the use of advanced packaging to increase the density of a single product beyond the reticle limit. This is exactly what AMD did with its MI300-series accelerators announced this week. Available in an APU and GPU form factor the chip is assembled from as many as 13 smaller chiplets — not counting the eight high-bandwidth memory stacks — and meshing them together using high-performance silicon interconnects.

Speaking of the MI300A — the "A" here standing for APU — AMD actually developed a technology called Smart Shift to dynamically divvy up power between the chip's 24 Zen 4 cores and its six CDNA 3 GPU dies depending on the workload.

Hots chips are only gonna get hotter

This approach doesn't change the fact Moore's Law is slowing down. Packing more compute into a single package is going to require more power, but it does help to reduce the amount needed to move data around.

"The more you can integrate, the less energy you're having to expend to go to [Serializer Deserializers] — that drives quite a bit of energy… — but there's innovation coming," he said.

Even so, hotter chips still pose a challenge with regard to thermal management. As we've previously reported, higher TDPs are already causing headaches for data center operators, especially those looking to deploy AI infrastructure at scale.

- AMD slaps together a silicon sandwich with MI300-series APUs, GPUs to challenge Nvidia's AI empire

- Google unveils TPU v5p pods to accelerate AI training

- DoE watchdog warns of poor maintenance at home of Frontier exascale system

- World's largest nuclear fusion reactor comes online in Japan

Papermaster argues these challenges aren't insurmountable and represent an opportunity with regard to next-gen thermal management and datacenter infrastructure

"As they build up that datacenter, it's worth it for them to invest in advanced cooling. It's worth it for them to have a leading edge, new sources of renewable energy, and new geographic locations that are more ideal to place these datacenters," he said. "I think there's a whole new area of innovation in advanced cooling, better thermal materials, better heat removal systems."

And with these technologies, Papermaster expects AMD and others will be able to push power targets even higher. "I don't see that we're at max wattage by any means," he says.

A software opportunity

However, beyond architectural, packaging and systems-level improvements, Papermaster emphasizes the opportunity presented by developing better software.

"The next frontier is getting a deeper partnership through the software stack. We're already started working closely with the leading edge AI practitioners… companies like Microsoft, like Oracle, Lamini and what we've done with Mosaic ML," he says. "Those kinds of partnerships really give us insights as to what we can do optimizing with the players who are providing the software solution."

We saw some of AMD's progress driving higher performance through software improvements with the launch of the ROCm 6 software platform this week. Just by optimizing the underlying software frameworks, AMD says it was able to improve LLM performance for models leveraging vLLM, HIP Graph, and Flash Attention by anywhere from 1.3x and 2.6x.

ROCm 6, combined with the architectural improvements brought by the MI300-series accelerators, translated into an 8x improvement in inferencing latency for the Llama 2 70 billion parameter model compared to the MI250 on ROCm 5. ®

From Chip War To Cloud War: The Next Frontier In Global Tech Competition

The global chip war, characterized by intense competition among nations and corporations for supremacy in semiconductor ... Read more

The High Stakes Of Tech Regulation: Security Risks And Market Dynamics

The influence of tech giants in the global economy continues to grow, raising crucial questions about how to balance sec... Read more

The Tyranny Of Instagram Interiors: Why It's Time To Break Free From Algorithm-Driven Aesthetics

Instagram has become a dominant force in shaping interior design trends, offering a seemingly endless stream of inspirat... Read more

The Data Crunch In AI: Strategies For Sustainability

Exploring solutions to the imminent exhaustion of internet data for AI training.As the artificial intelligence (AI) indu... Read more

Google Abandons Four-Year Effort To Remove Cookies From Chrome Browser

After four years of dedicated effort, Google has decided to abandon its plan to remove third-party cookies from its Chro... Read more

LinkedIn Embraces AI And Gamification To Drive User Engagement And Revenue

In an effort to tackle slowing revenue growth and enhance user engagement, LinkedIn is turning to artificial intelligenc... Read more