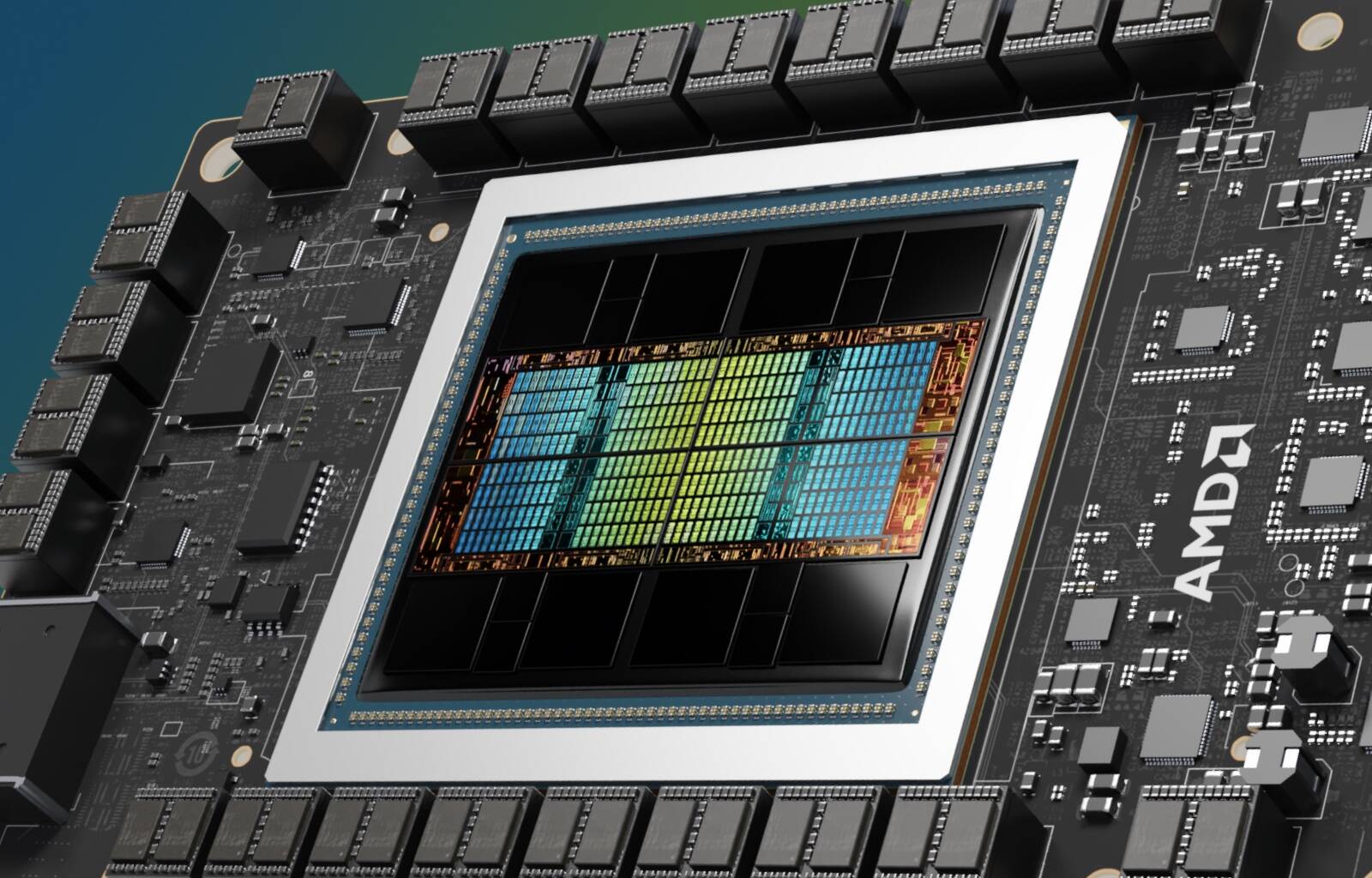

AMD Targets Nvidia H200 With 256GB MI325X AI Chips, Zippier MI355X Due In H2 2025

AMD boosted the VRAM on its Instinct accelerators to 256 GB of HBM3e with the launch of its next-gen MI325X AI accelerators during its Advancing AI event in San Francisco on Thursday.

The part builds upon AMD's previously announced MI300 accelerators introduced late last year, but swaps out its 192 GB of HBM3 modules for 256 GB of faster, higher capacity HBM3e. This approach is similar in many respects to Nvidia's own H200 refresh from last year, which kept the compute as is but increased memory capacity and bandwidth.

For many AI workloads, the faster the memory and the more you have of it, the better performance you'll extract. AMD has sought to differentiate itself from Nvidia by cramming more HBM onto its chips, which is making them an attractive option for cloud providers like Microsoft looking to deploy trillion parameter-scale models, like OpenAI's GPT4o, on fewer nodes.

AMD's latest Instinct GPU boasts 256 GB of HBM3e, 6 TB/s of memory bandwidth, and 1.3 petaFLOPS of dense FP16 performance - Click to enlarge

However, the eagle-eyed among you may be scratching your heads wondering: wasn't this chip supposed to ship with more memory? Well, yes. When the part was first teased at Computex this spring, it was supposed to ship with 288 GB of VRAM on board — 50 percent more than its predecessor, and twice that of its main competitor Nvidia's 141 GB H200.

Four months later, AMD has apparently changed its mind and is instead sticking with eight 32 GB stacks of HBM3e. Speaking to us ahead of AMD's Accelerating AI event in San Francisco where we were on-site on Thursday, Brad McCredie, VP of GPU platforms at AMD, said the reason for the change came down to architectural designs made early on in the products development.

"We actually said at Computex up to 288 GB, and that was what we were thinking at the time," he said. "There are architectural decisions we made a long time ago with the chip design on the GPU side that we were going to do something with software we didn't think was a good cost-performance trade off, and we've gone and implemented at 256 GB."

"It is what the optimized design point is for us with that product," VP of AMD's Datacenter GPU group Andrew Dieckmann reiterated.

While maybe not as memory-dense as they might have originally hoped, the accelerator does still deliver a decent uplift in memory bandwidth at 6 TB/s compared to 5.3 TB/s on the older MI300X. Between the higher capacity and memory bandwidth — 2 TB and 48 TB/s per node — that should help the accelerator support larger models while maintaining acceptable generation rates.

Curiously all that extra memory comes with a rather large increase in power draw, which is up 250 watts to 1,000 watts. This puts it in the same ball park as Nvidia's upcoming B200 in terms of TDP.

Unfortunately for all that extra power, it doesn't seem the chip's floating point precision has increased much from the 1.3 petaFLOPS of dense FP16 or 2.6 petaFLOPS at FP8 of its predecessor.

Still, AMD insists that in real-world testing, the part manages a 20-40 percent lead over Nvidia's H200 in inference performance in Llama 3.1 70B and 405B respectively. Training Llama 2 70B, performance is far closer with AMD claiming a roughly 10 percent advantage for a single MI325X and equivalent performance at a system level.

AMD Instinct MI325X accelerators are currently on track for production shipments in Q4 with systems from Dell Technologies, Eviden, Gigabyte Technology, Hewlett Packard Enterprise, Lenovo, Supermicro and others hitting the market in Q1 2025.

More performance coming in second half of 2025

While the MI325X may not ship with 288 GB of HBM3e, AMD's next Instinct chip, the MI355X, due out in the second half of next year, will.

Based on AMD's upcoming CDNA 4 architecture, it also promises higher floating point performance, up to 9.2 dense petaFLOPS when using the new FP4 or FP6 data types supported by the architecture.

If AMD can pull it off, that'll put it in direct contention with Nvidia's B200 accelerators, which are capable of roughly 9 petaFLOPS of dense FP4 performance.

For those still running more traditional AI workloads (funny that there's already such a thing) using FP/BF16 and FP8 data types, AMD says it's boosted performance by roughly 80 percent to 2.3 petaFLOPS and 4.6 petaFLOPS, respectively.

AMD's next-gen MI355X will reportedly deliver on its 288 GB promise and boost system performance to 74 petaFLOPS of FP4 - Click to enlarge

In an eight-GPU node, this will scale to 2.3 terabytes of HBM and 74 petaFLOPS of FP4 performance — enough to fit a 4.2 billion parameter model into a single box at that precision, according to AMD.

- PC shipments stuck in neutral despite AI buzz

- Supermicro crams 18 GPUs into a 3U AI server that's a little slow by design

- TensorWave bags $43M to pack its datacenter with AMD accelerators

- Inflection AI Enterprise offering ditches Nvidia GPUs for Intel's Gaudi 3

AMD inches closer to first Ultra Ethernet NICs

Alongside its new accelerators, AMD also teased its answer to Nvidia's InfiniBand and Spectrum-X compute fabrics and BlueField data processors, due out early next year.

Developed by the AMD Pensando network team, the Pensando Pollara 400 is expected to be the first NIC with support for the Ultra Ethernet Consortium specification.

In an AI cluster, these NICs would support the scale-out compute network used to distribute workloads across multiple nodes. In these environments, packet loss can result in higher tail latencies and therefore slower time to train models. According to AMD, on average, 30 percent of training time is spent waiting for the network to catch up.

Pollara 400 will come equipped with a single 400 GbE interface while supporting the same kind of packet spraying and congestion control tech we've seen from Nvidia, Broadcom and others to achieve InfiniBand-like loss and latencies.

One difference the Pensando team was keen to highlight was the use of its programmable P4 engine versus a fixed function ASIC or FPGA. Because the Ultra Ethernet specification is still in its infancy, it's expected to evolve over time. So, a part that can be reprogrammed on the fly to support the latest standard offers some flexibility for early adopters.

Another advantage Pollara may have over something like Nvidia's Spectrum-X Ethernet networking platform is the Pensando NIC, which won't require a compatible switch to achieve ultra-low loss networking.

In addition to the backend network, AMD is also rolling out a DPU called Salina, which features twin 400 GbE interfaces, and is designed to service the front-end network by offloading various software defined networking, security, storage, and management functions from the CPU.

Both parts are already sampling to customers with general availability slated for the first half of 2025. ®

From Chip War To Cloud War: The Next Frontier In Global Tech Competition

The global chip war, characterized by intense competition among nations and corporations for supremacy in semiconductor ... Read more

The High Stakes Of Tech Regulation: Security Risks And Market Dynamics

The influence of tech giants in the global economy continues to grow, raising crucial questions about how to balance sec... Read more

The Tyranny Of Instagram Interiors: Why It's Time To Break Free From Algorithm-Driven Aesthetics

Instagram has become a dominant force in shaping interior design trends, offering a seemingly endless stream of inspirat... Read more

The Data Crunch In AI: Strategies For Sustainability

Exploring solutions to the imminent exhaustion of internet data for AI training.As the artificial intelligence (AI) indu... Read more

Google Abandons Four-Year Effort To Remove Cookies From Chrome Browser

After four years of dedicated effort, Google has decided to abandon its plan to remove third-party cookies from its Chro... Read more

LinkedIn Embraces AI And Gamification To Drive User Engagement And Revenue

In an effort to tackle slowing revenue growth and enhance user engagement, LinkedIn is turning to artificial intelligenc... Read more