AMD Downplays Risk Of Growing Blast Radius, Licensing Fees From Manycore Chips

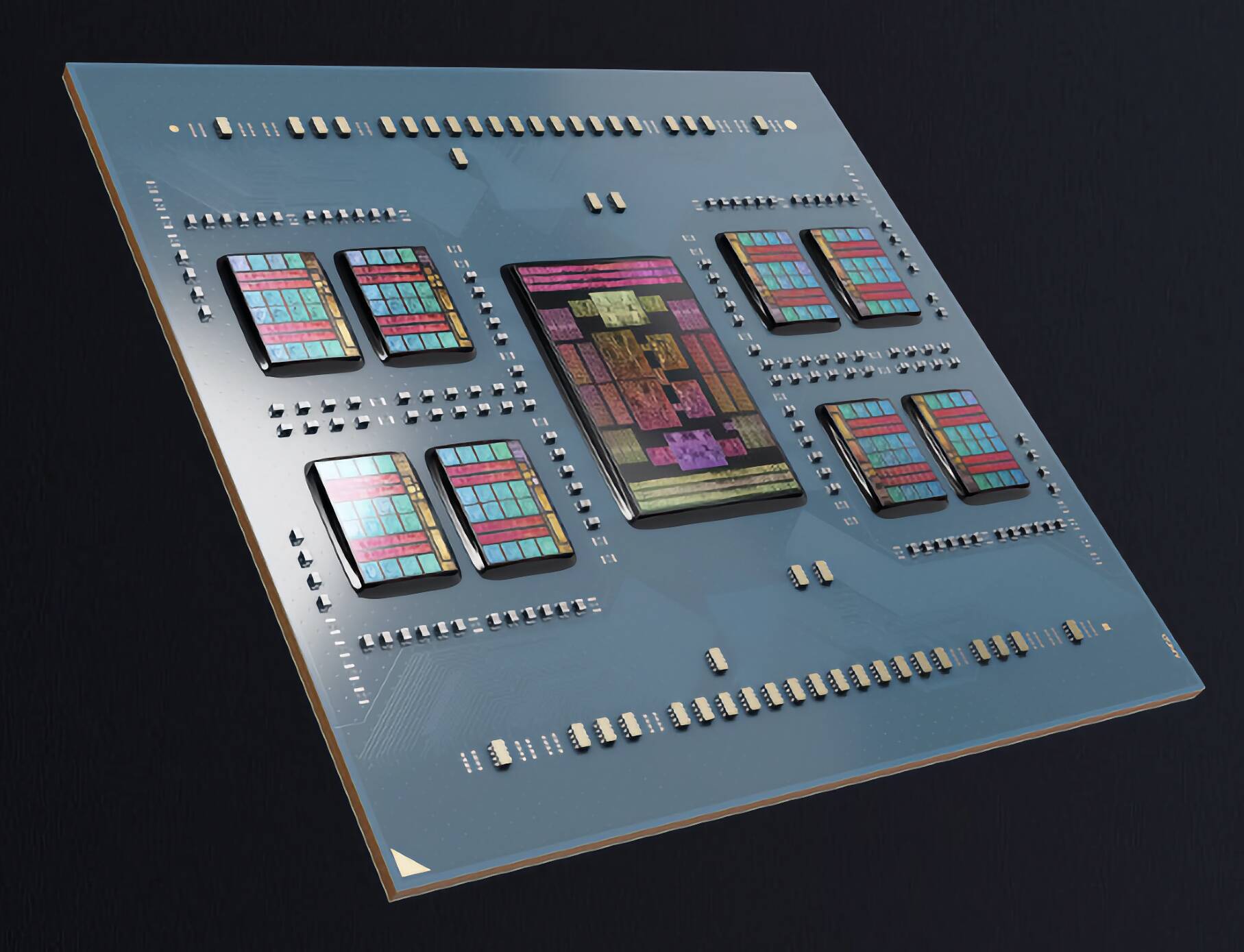

As AMD pushes to extend share of the datacenter CPU market, it's pushing CTOs to consider how many of their aging Intel systems can be condensed down to just one of its manycore chips.

However, there are legitimate concerns about the blast radius of these manycore systems. A single Epyc box can now be had with as many as 384 cores and 768 threads, which means a motherboard, NIC, PSU, or memory failure has the potential to do a lot more damage than ever.

Speaking with press during AMD's Advancing AI event late last week, Dan McNamara, who leads the company's server business, argued that many of these concerns are overblown.

"We've done a lot of analysis on this concern around blast radius, and we find it's a little bit unfounded," he said during a press Q&A. "We hear that a fair amount in the enterprise as people are trying to go from 16 or 24 cores to even 64. We can show a lot of data to show that it's actually more resilient and tolerant as you go up in terms of core counts, whether you go 1P or 2P."

The conversation around server modernization and consolidation is by no means new or unique to AMD. "The entire industry is going here when you look at our competitors," McNamara emphasized.

Just a few weeks ago, Intel revealed its Granite Rapids Xeon 6 processors with 128 of its performance cores, and this spring, the x86 giant touted a 144-core E-core part aimed at cloud and web-scale deployments that benefit from more cores rather than high clocks or vector acceleration.

However, what has changed is just how dense these chips are getting. Over the past two years, x86 processors have gone from 64 cores at the high end to 96, 128, and now 144 and 192. By Q1 2025, the densest parts will reach 288 cores in a single socket.

Because of this, we're no longer talking about a 2:1 or 3:1 consolidation but as much as a 7:1 ratio. On stage, AMD CEO Lisa Su boasted that just 131 Epyc servers could now replace 1,000 top-specced Intel Cascade Lake systems, resulting in a 68 percent reduction in energy consumption.

And while, yes, those chips aren't cheap with tray prices for AMD's 192-core flagship coming in at $14,813, not to mention the potential terabytes of DDR5 a system would need to achieve such a high consolidation ratio, that's also a lot of components, power, and space you no longer need or can repurpose for other endeavors.

What about per-core licensing?

Having said all that, AMD's Epyc lineup isn't all high-core-count parts. The lineup still includes SKUs with as few as eight processor cores.

Part of this is down to per-core licensing schemes, which can push enterprise customers toward certain SKUs even if they aren't necessarily the best-suited to the job.

"As we go forward, there are two things happening: One is that 'boy I've got an aging fleet, how do I do this consolidation,' but then the other one is, how do you get around, whether it's Broadcom or Ansys or any one of these ISVs that are basically charging per core," McNamara said.

- With Granite Rapids, Intel is back to trading blows with AMD

- AMD pumps Epyc core count to 192, clocks up to 5 GHz with Turin debut

- AMD targets Nvidia H200 with 256GB MI325X AI chips, zippier MI355X due in H2 2025

- You're right not to rush into running AMD, Intel's new manycore monster CPUs

He said customers facing these challenges have a couple of options. "One is there are areas where there's a fixed fee for XYZ number of cores, or there's what Lisa showed today. We drive higher perf per core so you can get more work done, or, in a virtualization environment, get more vCPUs per core, therefore you can save money on a core basis."

In other words, more cores isn't the only way to get more work done, and if you're limited to a certain number of cores due to licensing requirements, whether it be HPC or virtualization, a higher-performance core may allow you to circumvent these challenges.

McNamara was keen to highlight AMD's 64-core 9575F, a 400 W part that can drop into existing Epyc 4 boards and boasts clock speeds up to 5 GHz.

"While we're talking about that [part] for GPU hosts, it plays extremely well in some of these technical compute and even some virtualized applications," he said.

The all-new Epyc 9175F is another interesting example. The CPU is a 16-core part also capable of clocking to 5 GHz. That might not sound that exciting until you realize it boasts half a gigabyte of L3 cache. This means the part is composed of 16 core complex dies with 112 of the 128 cores fused off allowing for 32 MB of L3 per core.

We're told this part is designed to address a specific group of HPC customers that prioritize large caches and high clock speeds due to core-licensing limitations.

So, while AMD may be pushing its manycore parts in the enterprise, it's clearly not the chip designer's only trick for stealing share from Intel.

"We're pretty excited about where we are with respect to enterprise traction going forward. It's either scale up with the very, very highest-performing cores or the highest density," McNamara said. ®

From Chip War To Cloud War: The Next Frontier In Global Tech Competition

The global chip war, characterized by intense competition among nations and corporations for supremacy in semiconductor ... Read more

The High Stakes Of Tech Regulation: Security Risks And Market Dynamics

The influence of tech giants in the global economy continues to grow, raising crucial questions about how to balance sec... Read more

The Tyranny Of Instagram Interiors: Why It's Time To Break Free From Algorithm-Driven Aesthetics

Instagram has become a dominant force in shaping interior design trends, offering a seemingly endless stream of inspirat... Read more

The Data Crunch In AI: Strategies For Sustainability

Exploring solutions to the imminent exhaustion of internet data for AI training.As the artificial intelligence (AI) indu... Read more

Google Abandons Four-Year Effort To Remove Cookies From Chrome Browser

After four years of dedicated effort, Google has decided to abandon its plan to remove third-party cookies from its Chro... Read more

LinkedIn Embraces AI And Gamification To Drive User Engagement And Revenue

In an effort to tackle slowing revenue growth and enhance user engagement, LinkedIn is turning to artificial intelligenc... Read more