AMD Adds 4th-gen Epycs To AWS In HPC And Normie Workload Flavors

AMD's fourth-gen Eypc processors have arrived on Amazon Web Services in your choice of general-purpose and high-performance compute (HPC) tasks.

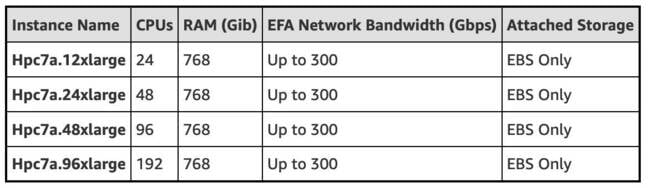

Kicking things off with Amazon's Hpc7a instances, the VMs are said to be optimized for compute and memory bandwidth-constrained workloads, such as computational fluid dynamics and numerical weather prediction. Compared to its older Hpc6a instances — which used AMD's 3rd-gen Epycs — Amazon claims its latest instances are as much as 2.5x faster than the older kit.

The VMs are available with your choice of 24, 48, 96, and 192 cores. Normally the vCPU count listed would refer to threads, but in this case, Amazon says simultaneous multi-threading (SMT) has been disabled to maximize performance. This means Amazon is using a pair of 96-core CPUs — likely the Epyc 9654, which can boost to 3.7GHz.

What we do know is that Amazon isn't using AMD's cache-stacked Genoa-X CPUs. Those chips were launched at the chipmaker's datacenter and AI event in June, and can be had with up to 1.1GB of L3 cache. Even without the ocean of extra L3, AMD's 4th-gen Epycs added a number of features likely to benefit HPC and AI-based workloads. These include support for AVX-512, Vector Neural Network Instructions (VNNI), BFloat 16, and higher memory bandwidth courtesy of up to 12 lanes of DDR5 per socket.

Amazon's Hpc7a instances are available in four SKUs with between 24 and 192 vCPUs each ... Click to enlarge

While you can tune the core count of these instances — something that will benefit those running workloads with per-core licensing — the rest of the specs remain the same. All four SKUs come equipped with 768GB of memory, 300Gbps of inter-node networking courtesy of Amazon's Elastic Fabric Adapter, and 25Gbps external bandwidth.

This standardization is no accident. By keeping the memory configuration consistent, customers can tune for a specific ratio of memory or bandwidth per core. This is beneficial as HPC workloads are more often limited by memory bandwidth than core count. This isn't unique to AMD's instances either, Amazon has done the same on its previously announced Graviton3E-based Hpc7g instances.

The high-speed internode network is also key, as Amazon expects customers to distribute their workloads across multiple instances, like nodes in a cluster, as opposed to running them in a single VM. As such, they support Amazon's Batch and ParallelCluster orchestration platforms as well as its FSx for Lustre storage service.

- AWS is running a 96-core, 192-thread, custom Xeon server

- AMD's latest Epycs are bristling with cores, stacked to the gills with cache

- Amazon isn't sold on AMD's tiny Zen 4c cores in manycore Bergamo processors

- Nearly every AMD CPU since 2017 vulnerable to Inception data-leak attacks

AWS M7a instances get a performance boost

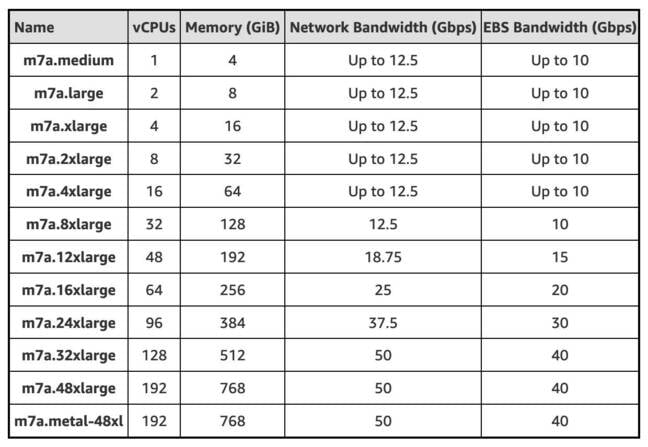

For those that maybe don't need the fastest clock-speeds or memory bandwidth, Amazon also launched the M7a general purpose instances it teased back in June.

Amazon claims the instances deliver up to 50 percent higher performance compared to its Epyc Milan-based M6a VMs and can be had with up to 192 vCPUs, each capable of 3.7GHz. Assuming Amazon is using SMT for these instances — we've reached out for clarification — this suggests Amazon is likely using a single Epyc 9654 for these instances, which coincidentally tops out at 3.7GHz.

Amazon's M7a instances pack can be had with up to 192 vCPUs and 768GB of memory ... Click to enlarge

The VMs are available in a dozen different configurations ranging from a single vCPU with 1GB of memory and 12.5Gbps of network bandwidth to a massive 192 vCPU instance with 768GBs of memory and 50Gbps of network throughput.

All of these instances are supported by Amazon's custom Nitro data processing units (DPUs) which offload a number of functions like networking, storage, and security from the host CPU.

But, if you're looking for something on the Intel side of things, we recently took a look at the company's M7i instances, which are based on a pair of 48-core Sapphire Rapids Xeons.

Amazon's M7a instances are generally available now in the cloud provider's North Virginia, Ohio, Oregon, and Ireland datacenters. ®

From Chip War To Cloud War: The Next Frontier In Global Tech Competition

The global chip war, characterized by intense competition among nations and corporations for supremacy in semiconductor ... Read more

The High Stakes Of Tech Regulation: Security Risks And Market Dynamics

The influence of tech giants in the global economy continues to grow, raising crucial questions about how to balance sec... Read more

The Tyranny Of Instagram Interiors: Why It's Time To Break Free From Algorithm-Driven Aesthetics

Instagram has become a dominant force in shaping interior design trends, offering a seemingly endless stream of inspirat... Read more

The Data Crunch In AI: Strategies For Sustainability

Exploring solutions to the imminent exhaustion of internet data for AI training.As the artificial intelligence (AI) indu... Read more

Google Abandons Four-Year Effort To Remove Cookies From Chrome Browser

After four years of dedicated effort, Google has decided to abandon its plan to remove third-party cookies from its Chro... Read more

LinkedIn Embraces AI And Gamification To Drive User Engagement And Revenue

In an effort to tackle slowing revenue growth and enhance user engagement, LinkedIn is turning to artificial intelligenc... Read more